The post Introduction to Istio Ingress: The easy way to manage incoming Kubernetes app traffic appeared first on Mirantis | Pure Play Open Cloud.

Istio is an open-source, cloud-native service mesh that enables you to reduce the complexity of application deployments and ease the strain on your development teams by giving more visibility and control over how traffic is routed among distributed applications.

Istio Ingress is a subset of Istio that handles the incoming traffic for your cluster. The reason you need ingress is that exposing your entire cluster to the outside world is not secure. Instead, you want to expose just the part of it that handles the incoming traffic and routes that traffic to the applications inside.

This is not a new concept for Kubernetes, and you may be familiar with the Kubernetes Ingress object. Istio Ingress takes this one step further and allows you to add additional routing rules based on routes, headers, IP addresses, and more. Routing gives you the opportunity to implement concepts such as A/B testing, Canary deployments, IP black/whitelisting, and so on.

Let’s take a look at how to use Istio Ingress.

Overview of how Istio is integrated in UCP

You can install Istio on any compatible Kubernetes cluster, but to make things simple we’ll look at how to use it with Docker Enterprise Universal Control Plane (UCP). (If you don’t have Docker Enterprise installed, you can get a free trial here.)

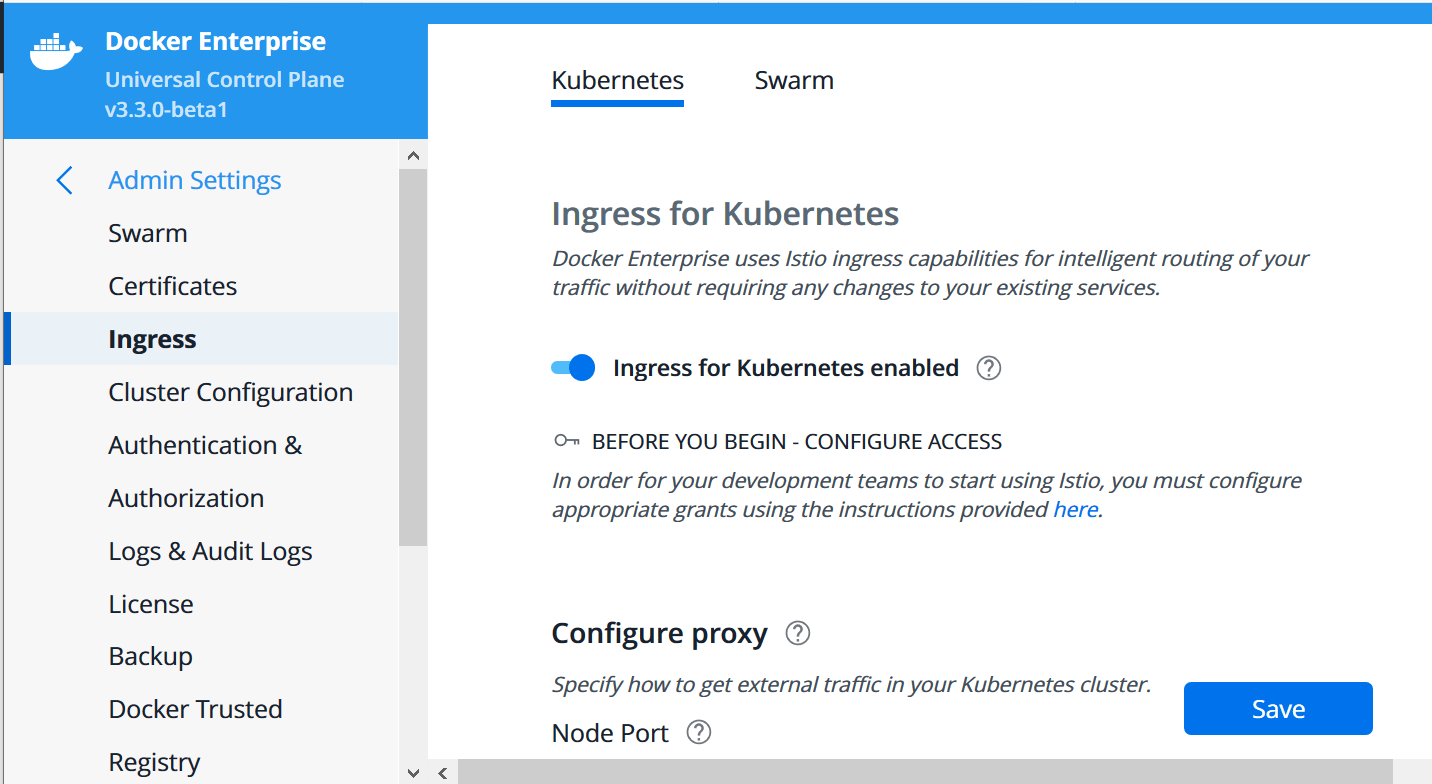

Docker Enterprise 3.1 includes UCP 3.3.0, which includes the ability to simply “turn on” Istio Ingress for your Kubernetes cluster. To do that, execute the following steps:

Log into the UCP user interface.

Click <username> –> Admin Settings –> Ingress. (If you’re following the Getting Started instructions, that will be admin –> Admin Settings –> Ingress.)

Under Kubernetes, click the slider to enable Ingress for Kubernetes.

Next you need to configure the proxy so Kubernetes knows what ports to use for Istio Ingress. (Note that for a production application, you would typically expose your services via a load balancer created by your cloud provider, but for now we’re just looking at how Ingress works.)

Set a specific port for incoming HTTP requests, add the external IP address of the UCP server (if necessary) and click Save to save your settings.

In a few seconds, the configurations will be applied and UCP will deploy the services that power Istio Ingress. From there, you can apply your configurations and make use of the features of Istio Ingress. Let’s look at how to do that.

Deployment of a sample application

The next step is to deploy a sample application to your Kubernetes cluster and expose it via Istio Ingress. As an example, we’ll use a simple httpbin app, which enables you to experiment with HTTP requests. You have two options for performing this step: via the UI and the CLI. Using one or the other depends on your preference; you can achieve the same things in both ways. We’ll cover both in this article, starting with the UI.

Installing the application via the UCP user interface

To install the application using the UI, log into Docker Enterprise and follow these steps:

Go to Kubernetes -> Create.

Select the default namespace and paste the following YAML into the editor:

apiVersion: v1

kind: ServiceAccount

metadata:

name: httpbin

—

apiVersion: v1

kind: Service

metadata:

name: httpbin

labels:

app: httpbin

spec:

ports:

– name: http

port: 8000

targetPort: 80

selector:

app: httpbin

—

apiVersion: apps/v1

kind: Deployment

metadata:

name: httpbin

spec:

replicas: 1

selector:

matchLabels:

app: httpbin

version: v1

template:

metadata:

labels:

app: httpbin

version: v1

spec:

serviceAccountName: httpbin

containers:

– image: docker.io/asankov/httpbin:1.0

imagePullPolicy: IfNotPresent

name: httpbin

ports:

– containerPort: 80

Click Create.

This YAML tells Kubernetes to create a Deployment for one replica of httpbin, a Service to create a stable IP and domain name within the cluster, and a ServiceAccount under which to run the application.

Now we have our application running within the cluster, but we have to expose it to the outside world, and that’s where Istio Ingress comes into play.

Create the Istio Gateway

The first step is to create the Istio Gateway that will be the entry point for all the traffic coming into our Kubernetes cluster. To do that, follow these steps:

Go to Kubernetes -> Ingress.

Click on Gateways -> Create and create a gateway object named httpbin-gateway. The gateway name is arbitrary; you will use it later to connect the Virtual Services to the Gateway.

Scroll down and click Add Server to add an HTTP server for port 80 and all hosts (*), and give the port a name such as gateway-port.

Click Generate YML.

Select the default namespace.

Click Create.

Now that we have our gateway in place we need to deploy a Virtual Service.

Deploy a Virtual Service

The Virtual Service is another Istio construct that deals with the actual routing logic we want to put in place. To create it, follow these steps:

Go to Kubernetes -> Ingress.

Click on Virtual Services -> Create and create a new service called httpbin-vs that can take requests from all hosts (*) and links to the httpbin-gateway we created in the previous section.

Click Generate YML.

Select the default namespace.

Click Create. You will be redirected to the Virtual Services view.

Select the new service and click the gear icon to edit to add the first routing. (You can also do this before creating the service.)

You will see a YAML editor with the Virtual Service configuration. You’ll need to make a few tweaks to add routing information before being able to use the Virtual Service. In this case, we want to create a route that takes all requests (/) and sends them to the httpbin service, which is exposed on port 8000.

…

spec:

http:

– match:

– uri:

prefix: /

route:

– destination:

host: httpbin

port:

number: 8000

In the end, your Virtual Service configuration should look like this:

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

generation: 1

name: httpbin-vs

spec:

gateways:

– httpbin-gateway

hosts:

– ‘*’

http:

– match:

– uri:

prefix: /

route:

– destination:

host: httpbin

port:

number: 8000

Click Save to make the changes to the service. Now you can go ahead and access the service. To do that, open the browser to the proxy you specified when we set up Istio Ingress. In this case, its:

<PROTOCOL>://<IP_ADDRESS>:<NODE_PORT>

In my case, the proxy is set up as:

We set up the service as the HTTP protocol, so the URL would be:

http://34.219.89.235:33000

Install the application via the CLI

In order to communicate with the UCP via the CLI, you first need to download a client bundle and run the environment script to set kubectl to point to the current cluster. (You can get instructions for how to do that in the Getting started tutorial.)

Now we are ready to start building.

Start by creating the prerequisites for the exercise – a deployment for our app, a service account and a service.

cat <<EOF | kubectl apply -f –

apiVersion: v1

kind: ServiceAccount

metadata:

name: httpbin

—

apiVersion: v1

kind: Service

metadata:

name: httpbin

labels:

app: httpbin

spec:

ports:

– name: http

port: 8000

targetPort: 80

selector:

app: httpbin

—

apiVersion: apps/v1

kind: Deployment

metadata:

name: httpbin

spec:

replicas: 1

selector:

matchLabels:

app: httpbin

version: v1

template:

metadata:

labels:

app: httpbin

version: v1

spec:

serviceAccountName: httpbin

containers:

– image: docker.io/asankov/httpbin:1.0

imagePullPolicy: IfNotPresent

name: httpbin

ports:

– containerPort: 80

EOF

Next create the Gateway that will accept the incoming connections.

cat <<EOF | kubectl apply -f –

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: httpbin-gateway

spec:

selector:

istio: ingressgateway

servers:

– hosts:

– ‘*’

port:

name: http

number: 80

protocol: HTTP

EOF

Now create the Virtual Service that is responsible for the routing of the ingress traffic to the application pods.

cat <<EOF | kubectl apply -f –

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

generation: 1

name: httpbin-vs

spec:

gateways:

– httpbin-gateway

hosts:

– ‘*’

http:

– match:

– uri:

prefix: /

route:

– destination:

host: httpbin

port:

number: 8000

EOF

Now that you have all of this in place, you can see the application.

Configuring blue/green deployments for an application via Istio Ingress

In the previous example, we saw how we can expose an application via Istio Ingress and do simple routing, but again, Istio Ingress is much more powerful than that. Let’s explore more of these capabilities.

In this case, the scenario that we are going to explore is doing Canary (blue/green) deployments via Istio Ingress.

Canary deployments involve deploying two versions of your application side by side and serving the new one only to a part of your clients. This way, you can gather metrics about your new version without showing it to all of your end users. When you decide everything with the new version is fine, you roll it out to everyone. If not, you rollback.

To explore this scenario we first need to deploy the second version of our application. For that, we have prepared a slightly modified version of httpbin. Everything is the same, except the header on the main page, which says httpbin.org V2 to indicate that this is indeed version 2.

To deploy the new application to your cluster, follow these steps:

Log into Docker Entperirse UCP.

Navigate to Kubernetes -> Create.

Select the default namespace

Paste the following YAML into the editor and click Create:

apiVersion: apps/v1

kind: Deployment

metadata:

name: httpbin-v2

spec:

replicas: 1

selector:

matchLabels:

app: httpbin

version: v2

template:

metadata:

labels:

app: httpbin

version: v2

spec:

serviceAccountName: httpbin

containers:

– image: docker.io/asankov/httpbin:2.0

imagePullPolicy: IfNotPresent

name: httpbin

ports:

– containerPort: 80

Now we have V1 and V2 of httpbin running side by side in our cluster. By default, Kubernetes does round-robin load balancing, so approximately 50 percent of your users will see V1 and the other 50 will see V2. You can see this for yourself by refreshing the application page.

When doing canary deployments, however, we usually want the percentage of users seeing the new version to start out much smaller, and that’s where Istio Ingress comes into play.

To control the percentage of traffic that goes to each version, we need to create a Destination Rule. This is an Istio construct that we will use to make a distinction between V1 and V2. To do that, follow these steps:

Log into Docker Enterprise UCP.

Go to Kubernetes -> Create.

Select the default namespace

Paste the following YAML into the editor and click Create:

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: httpbin-destination-rule

spec:

host: httpbin

subsets:

– name: ‘v1′

labels:

version: ‘v1′

– name: ‘v2′

labels:

version: ‘v2′

As you can see, we’ve created two different subsets, each pointing to a different version of the application.

Next, we need to edit our existing Virtual Service to make use of the newly created Destination Rule. Go to Kubernetes -> Ingress and click Virtual Services.

Find your Virtual Service in the list (you should have only one at that point) and click the gear icon to Edit.

Edit the service to replace the content of the http property so the service looks like the following YAML and click Save:

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: httpbin-vs

spec:

gateways:

– httpbin-gateway

hosts:

– ‘*’

http:

– route:

– destination:

host: httpbin

subset: ‘v1′

weight: 70

– destination:

host: httpbin

subset: ‘v2′

weight: 30

Notice that the routing now adds specific weights to each of the subsets we created in the previous step. Now if you access the URL and start refreshing the page you will see that approximately 7 out of 10 times, you see V1 and the other 3 times you see V2.

So at this point we have successfully completed a canary deployment with a 70-30 ratio. The next step would be to gradually increase the ratio of users seeing the new version until the weight of V2 is 100 percent. At that point we can completely remove the old version.

Next steps

At this point you know how to use Istio Ingress to safely expose your applications, and to create routing rules that enable you to control traffic flow to create scenarios such as canary deployments. To implement more complex situations, you can use these same techniques to create custom routing rules just as you did in this case.

To see a live demo of Istio Ingress in action, check out this video.

The post Introduction to Istio Ingress: The easy way to manage incoming Kubernetes app traffic appeared first on Mirantis | Pure Play Open Cloud.

Quelle: Mirantis

Published by