Beyond Containers: llama.cpp Now Pulls GGUF Models Directly from Docker Hub

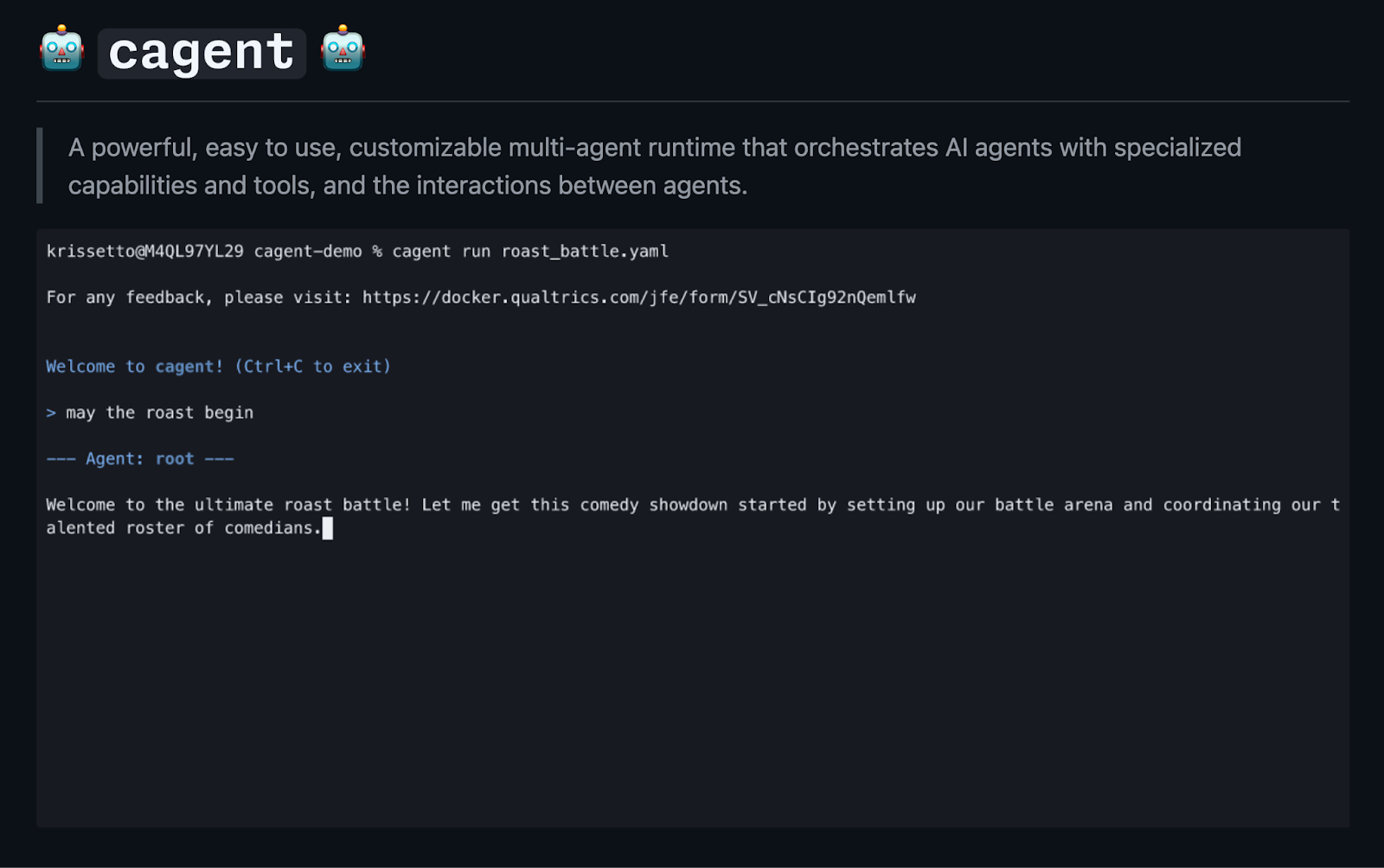

The world of local AI is moving at an incredible pace, and at the heart of this revolution is llama.cpp—the powerhouse C++ inference engine that brings Large Language Models (LLMs) to everyday hardware (and it’s also the inference engine that powers Docker Model Runner). Developers love llama.cpp for its performance and simplicity. And we at Docker are obsessed with making developer workflows simpler.

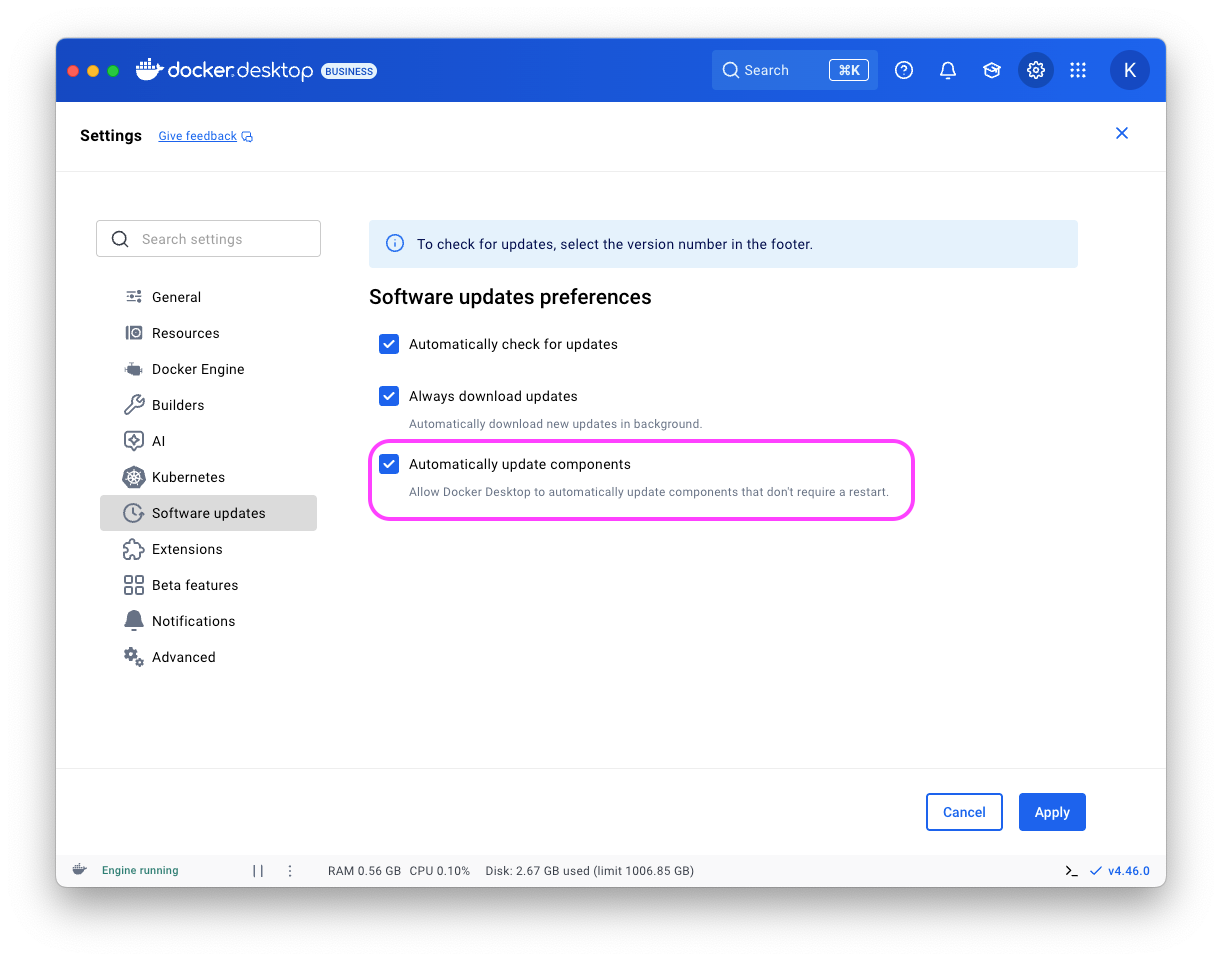

That’s why we’re thrilled to announce a game-changing new feature in llama.cpp: native support for pulling and running GGUF models directly from Docker Hub.

This isn’t about running llama.cpp in a Docker container. This is about using Docker Hub as a powerful, versioned, and centralized repository for your AI models, just like you do for your container images.

Why Docker Hub for AI Models?

Managing AI models can be cumbersome. You’re often dealing with direct download links, manual version tracking, and scattered files. By integrating with Docker Hub, llama.cpp leverages a mature and robust ecosystem to solve these problems.

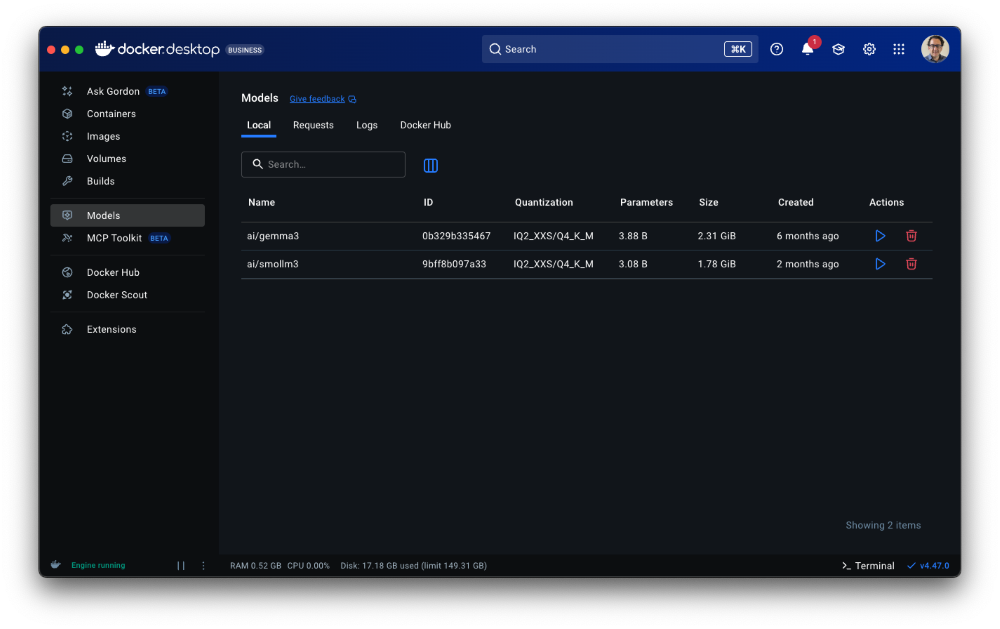

Rock-Solid Versioning: The familiar repository:tag syntax you use for images now applies to models. Easily switch between gemma3 and smollm2:135M-Q4_0 with complete confidence.

Centralized & Discoverable: Docker Hub can become the canonical source for your team’s models. No more hunting for the “latest” version on a shared drive or in a chat history.

Simplified Workflow: Forget curl, wget or manually downloading from web UIs. A single command-line flag now handles discovery, download, and caching.

Reproducibility: By referencing a model with its immutable digest or tag, you ensure that your development, testing, and production environments are all using the exact same artifact, leading to more consistent and reproducible results.

How It Works Under the Hood

This new feature cleverly uses the Open Container Initiative (OCI) specification, which is the foundation of Docker images. The GGUF model file is treated as a layer within an OCI manifest, identified by a special media type like application/vnd.docker.ai.gguf.v3. For more details on why the OCI standard matters for models, check out our blog.

When you use the new –docker-repo flag, llama.cpp performs the following steps:

Authentication: It first requests an authentication token from the Docker registry to authorize the download.

Manifest Fetch: It then fetches the manifest for the specified model and tag (e.g., ai/gemma3:latest).

Layer Discovery: It parses the manifest to find the specific layer that contains the GGUF model file by looking for the correct media type.

Blob Download: Using the layer’s unique digest (a sha256 hash), it downloads the model file directly from the registry’s blob storage.

Caching: The model is saved to a local cache, so subsequent runs are instantaneous.

This entire process is seamless and happens automatically in the background.

Get Started in Seconds

Ready to try it? If you have a recent build of llama.cpp, you can serve a model from Docker Hub with one simple command. The new flag is –docker-repo (or -dr).

Let’s run gemma3, a model available from Docker Hub.

# Now, serve a model from Docker Hub!

llama-server -dr gemma3

The first time you execute this, you’ll see llama.cpp log the download progress. After that, it will use the cached version. It’s that easy! The default organization is ai/, so gemma3 is resolved to ai/gemma3. The default tag is :latest, but a tag can be specified like :1B-Q4_K_M.

For a complete Docker-integrated experience with OCI pushing and pulling support try out Docker Model Runner. The docker model runner equivalent for chatting is:

# Pull, serve and chat to a model from Docker Hub!

docker model run ai/gemma3

The Future of AI Model Distribution

This integration represents a powerful shift in how we think about distributing and managing AI artifacts. By using OCI-compliant registries like Docker Hub, the AI community can build more robust, reproducible, and scalable MLOps pipelines.

This is just the beginning. We envision a future where models, datasets, and the code that runs them are all managed through the same streamlined, developer-friendly workflow that has made Docker an essential tool for millions.

Check out the latest llama.cpp to try it out, and explore the growing collection of models on Docker Hub today!

Learn more

Read our quickstart guide to Docker Model Runner.

Visit our Model Runner GitHub repo! Docker Model Runner is open-source, and we welcome collaboration and contributions from the community!

Discover curated models on Docker Hub

Quelle: https://blog.docker.com/feed/