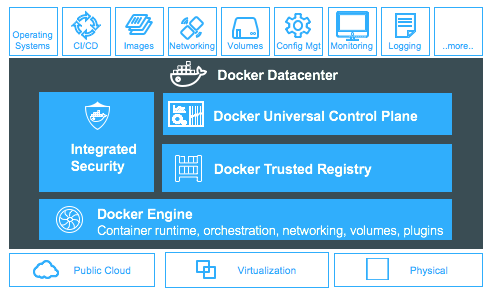

Introducing Docker Datacenter on 1.13 with Secrets, Security Scanning, Content Cache and more

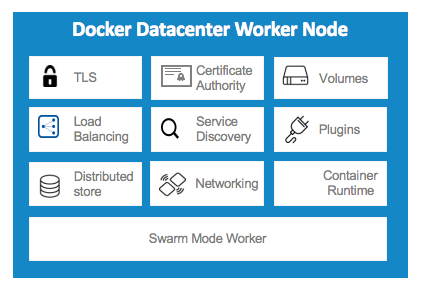

It’s another exciting day with a new release of Docker Datacenter (DDC) on 1.13. This release includes loads of new features around app services, security, image distribution and usability.

Check out the upcoming webinar on Feb 16th for a demo of all the latest features.

Let’s dig into some of the new features:

Integrated Secrets Management

This release of Docker Datacenter includes integrated support for secrets management from development all the way to production.

This feature allows users to store confidential data (e.g. passwords, certificates) securely on the cluster and inject these secrets to a service. Developers can reference the secrets needed by different services in the familiar Compose file format and handoff to IT for deployment in production. Check out the blog post on Docker secrets management for more details on implementation. DDC integrates secrets and adds several enterprise-grade enhancements, including lifecycle management and deployment of secrets in the UI, label-based granular access control for enhanced security, and auditing users’ access to secrets via syslog.

Image Security Scanning and Vulnerability Monitoring

Another element of delivering safer apps is around the ability to ensure trusted delivery of the code that makes up that app. In addition to Docker Content Trust (already available in DDC), we are excited to add Docker Security Scanning to enable binary level scanning of images and their layers. Docker Security Scanning creates a bill of materials (BOM) of your image and checks packages and versions against a number of CVE databases. The BOM is stored and checked regularly against the CVE databases, so if a new vulnerability is reported against an existing package, any user can be notified of the new vulnerability. Additionally, system admins can integrate their CI and build systems with the scanning service using the new registry webhooks.

The latest #DockerDatacenter features secrets, security scanning, caching and moreClick To Tweet

HTTP Routing Mesh (HRM)

Previously available as an experimental feature, the HTTP (Hostname) based routing mesh is available for production in this release. HRM extends the existing swarm-mode networking routing mesh by enabling you to route HTTP-based hostnames to your services.

New features in this release include ability to manage HRM for a service via the UI, HTTPS pass-through support via SNI protocol, using multiple HRM networks for application isolation, and sticky sessions integration. See the screenshot below for how HRM can be easily configured within the DDC admin UI.

Compose for Services

This release of DDC has increased support for managing complex distributed applications in the form of stacks–groups of services, networks, and volumes. DDC allows users to create stacks via Compose files (version 3.1 yml) and deploy through both the UI and CLI. Developer can specify the stack via the familiar Compose file format; for a seamless handoff, IT can cut and paste that the Compose file and deploy services into production.

Once deployed, DDC users are able to manage stacks directly through the UI and click into individual services, tasks, networks, and volumes to manage their lifecycle operations.

Content Cache

For companies with app teams that are distributed across a number of locations and want to maintain centralized control of images, developer performance is top of mind. Having developers connect to repositories thousands of miles away make not always make sense when considering latency and bandwidth. New for this release is the ability to set up satellite registry caches for faster pulls of Docker images. Caches can be assigned to individual users or configured by each user based on their current location. The registry caches can be deployed in a variety of scenarios including; high availability and in complex cache-chaining scenarios for the most stringent datacenter environments.

Registry Webhooks

To better integrate with external systems, DDC now includes webhooks to notify external systems of registry events. These events range from push or pull events in individual repositories, security scanning events, create or deletion of repositories, and system events like garbage collection. With this full set of integration points, you can fully automate your continuous integration environment and docker image build process.

Usability Improvements

As always, we have added a number of features to refine and continuously improve the system usability for both developers and IT admins.

Cluster and node level metrics on CPU, memory, and disk usage. Sort nodes by usage in order to quickly troubleshoot issues, and the metrics are also rolled up into the dashboard for a bird’s eye view of resource usage in the cluster.

Smoother application update process with support for rollback during rolling updates, and status notifications for service updates.

Easier installation and configuration with the ability to copy a Docker Trusted Registry install command directly from the Universal Control Plane UI

Additional LDAP/AD configuration options in the Universal Control Plane UI

Cloud templates on AWS and Azure to deploy DDC in a few clicks

These new features and more are featured in a Docker Datacenter demo video series

Get started with Docker Datacenter

These are just the latest set of features to join the Docker Datacenter

Learn More about Docker Secrets Management

Get the FREE 30 day trial

Register for an upcoming webinar

The post Introducing Docker Datacenter on 1.13 with Secrets, Security Scanning, Content Cache and more appeared first on Docker Blog.

Quelle: https://blog.docker.com/feed/