Amazon Elastic Container Service (Amazon ECS) now includes enhancements that improve service availability during rolling deployments. These enhancements help maintain availability when new application version tasks are failing, when current tasks are unexpectedly terminated, or when scale-out is triggered during deployments. Previously, when tasks in your currently running version became…

Mit bemerkenswerter Technik und 3D-gedrucktem Stahlrahmen soll sich das Waldwiesel abheben. Aber was bringt das auf der Straße und im Gelände? Ein Praxistest von Mario Petzold (E-Bike, Test) Quelle: Golem

AI-powered developer tools claim to boost your productivity, doing everything from intelligent auto-complete to [fully autonomous feature work](https://openai.com/index/introducing-codex/). But the productivity gains users report have been something of a mixed bag. Some groups claim to get 3-5x (or more), productivity boosts, while other devs claim to get no benefit at…

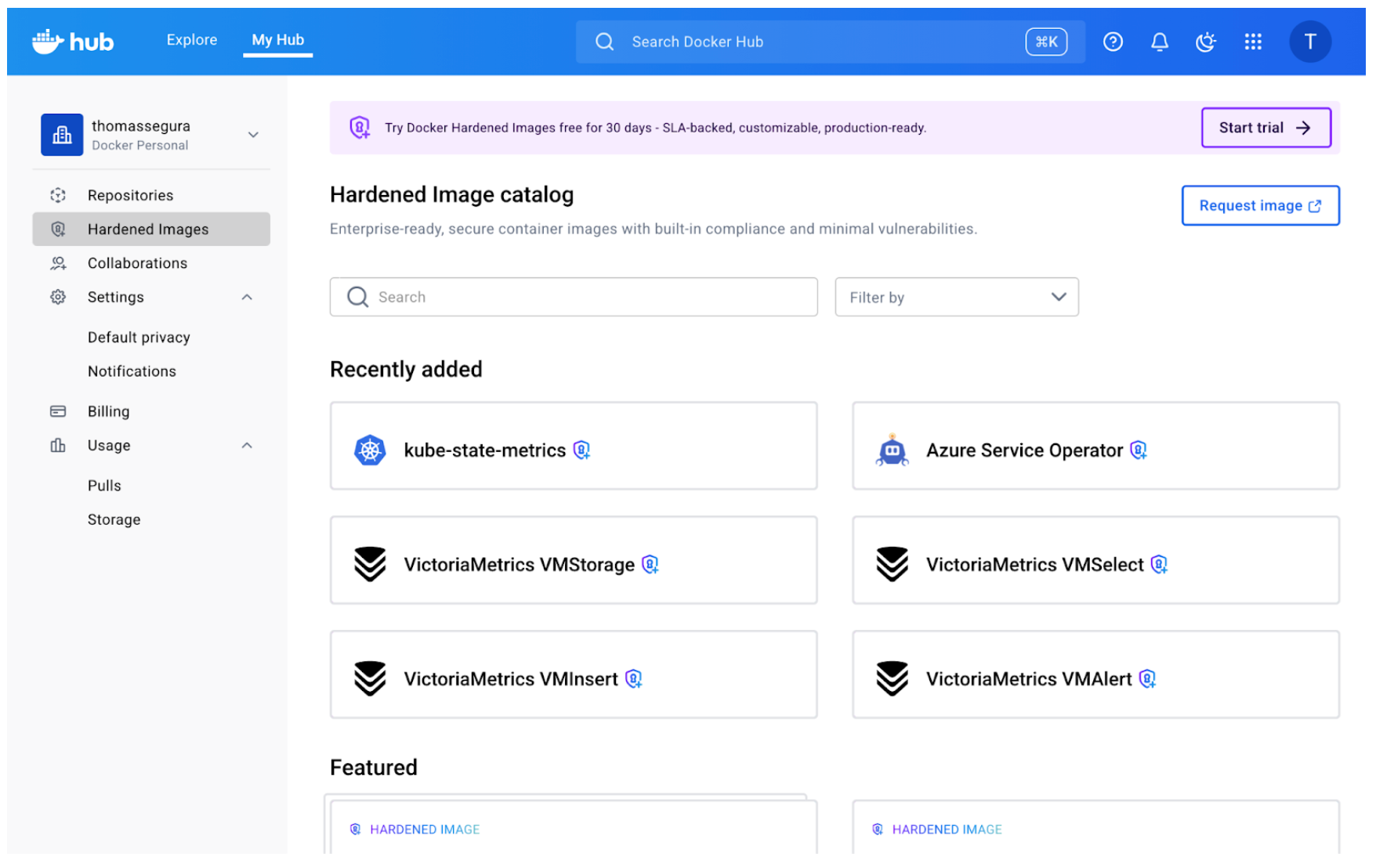

First steps: Run your first secure, production-ready image Container base images form the foundation of your application security. When those foundations contain vulnerabilities, every service built on top inherits the same risk. Docker Hardened Images addresses this at the source. These are continuously-maintained, minimal base images designed for security: stripped…

Starting today, AWS Network Firewall is available in the AWS New Zealand (Auckland) Region, enabling customers to deploy essential network protections for all their Amazon Virtual Private Clouds (VPCs). AWS Network Firewall is a managed firewall service that is easy to deploy. The service automatically scales with network traffic volume…

Amazon EventBridge introduces a new intuitive console based visual rule builder with a comprehensive event catalog for discovering and subscribing to events from custom applications, and over 200 AWS services. The new rule builder integrates the EventBridge Schema Registry with an updated event catalog and intuitive drag and drop canvas…

AWS Marketplace now delivers purchase agreement events via Amazon EventBridge, transitioning from our Amazon Simple Notification Service (SNS) notifications for Software as a Service and Professional Services product types. This enhancement simplifies event-driven workflows for both sellers and buyers by enabling seamless integration of AWS Marketplace Agreements, reducing operational overhead,…

AWS Lambda announces Provisioned Mode for SQS event-source-mappings (ESMs) that subscribe to Amazon SQS, a feature that allows you to optimize the throughput of your SQS ESM by provisioning event polling resources that remain ready to handle sudden spikes in traffic. SQS ESM configured with Provisioned Mode scales 3x faster…

Ein Berliner Gericht spricht Idealo 465 Millionen Euro Schadenersatz zu, weil Google seinen eigenen Vergleichsdienst bevorzugt haben soll. (Google, Rechtsstreitigkeiten) Quelle: Golem

Gleich drei Geräte von Belkin können gegebenenfalls überhitzen und Feuer fangen - Nutzer sollen sie daher umgehend zurückschicken. (Belkin, Verbraucherschutz) Quelle: Golem