Microsoft’s commitment to supporting cloud infrastructure demand in Asia

Microsoft supports cloud infrastructure demand in Asia

As Asia surges ahead in digital transformation, Microsoft is committed to expanding its cloud infrastructure to match the continent’s demand. In 2025, Microsoft launched new Azure datacenter regions in Malaysia and Indonesia, and is set to expand further with new datacenter regions launching in India and Taiwan in 2026. Microsoft is also announcing our intent to deliver a second datacenter region in Malaysia, called Southeast Asia 3. Across Asian markets, the company is investing billions to expand its AI infrastructure footprint—bringing cutting-edge AI, next-generation networking, and scalable storage to the world’s most populus area. These investments will empower enterprises across Asia to scale seamlessly, unlock the full value of their data, and capture new opportunities for growth.

Learn more about Microsoft Cloud Adoption Framework for Azure

Microsoft’s global infrastructure spans over 70 datacenter regions across 33 countries—more than any other cloud provider—designed to meet data residency, compliance, and performance. In Asia, where businesses across financial services, public sector, manufacturing, retail, and start-ups are deeply integrated into the global economy, Microsoft’s strategically distributed datacenters deliver seamless scalability, low-latency connectivity, and regulatory assurance. By keeping critical data and applications close on fault-tolerant, high-capacity networking infrastructure, organizations can operate confidently across local and international markets—delivering fast, reliable services that meet customer expectations and comply with legal requirements.

With a dozen datacenter regions already live across Asia, we are making significant datacenter region investments to expand across the continent. These investments will become some of our most integral datacenters in the region:

East Asia

East Asia, an historically established market in our Japan and Korea geographies, will see continued growth and expansion. In April 2025, Microsoft launched Azure Availability Zones in the Japan West region—enhancing resilience and efficiency as part of a two-year plan to invest in Japan’s AI and cloud infrastructure.

Additionally, Microsoft announced the launch of Microsoft 365 and associated data residency offerings for commercial customers in the Taiwan North cloud region. Azure services are also accessible to select customers in this region, with general availability for all customers expected in 2026.

Southeast Asia nations

Microsoft is also deepening its commitment in Southeast Asia countries through substantial investments, marked by the launch of new cloud regions in Indonesia and Malaysia in May 2025. The recently launched regions are designed with AI-ready hyperscale cloud infrastructure and three availability zones, providing organizations across Southeastern Asia with secure, low-latency access to cloud services.

The recently launched Indonesia Central region is a welcome addition to this area of the world. It offers comprehensive Azure services and local Microsoft 365 availability, unlocking new capabilities to allow customers to innovate. Our continued investments in Indonesia are expected to drive significant expansion, positioning this datacenter region to become one of the largest regions in Asia over the coming years. Today, more than 100 organizations are already using the Microsoft Cloud from Indonesia, to accelerate their transformation, including:

Binus University is leveraging Azure Machine Learning and Azure OpenAI Service to enhance both campus operations and student learning. AI enables accurate student intake forecasting and automates diploma supplement summaries for over 10,000 graduates annually, improving operational efficiency. On the academic side, BINUS is developing AI-powered tools like personalized AI Tutors, generative AI in libraries for tailored book recommendations, and the Beelingua platform for interactive language learning, all aimed at creating a more adaptive, inclusive, and future-ready educational experience.

GoTo Group integrates GitHub Copilot into its engineering workflow, aiming to boost productivity and innovation. Nearly a thousand engineers have adopted the AI-powered coding assistant, which offers real-time suggestions, chat-based help, and simplified explanations of complex code, significantly speeding up the time to innovate.

Customers such as Adaro, BCA, Binus University, Pertamina, Telkom Indonesia, and Manulife have joined the Indonesia Central cloud region, gaining on-premises access to Microsoft’s hyperscale infrastructure.

The Malaysia West datacenter region, our first cloud region in the country, helps empower Malaysia’s digital and AI transformation with access to Azure and Microsoft 365. A diverse group of organizations, enterprises, and startups are already leveraging the Malaysia West region including:

PETRONAS, Malaysia’s global energy and solutions provider, is partnering with Microsoft to leverage hyperscale cloud infrastructure to continue advancing its digital and AI transformation, as well as clean energy transition efforts in Asia.

Other customers using Microsoft’s new cloud region include FinHero, SCICOM Berhad, Senang, SIRIM Berhad, TNG Digital (the operator of TNG eWallet), and Veeam, along with more organizations expected to come onboard as demand for secure, scalable, and locally-hosted cloud services continues to grow across industries.

In Malaysia, Microsoft is expanding its digital infrastructure footprint further with a new datacenter region, Southeast Asia 3, planned in Johor Bahru. When this next-generation region comes online, it will feature Microsoft’s most comprehensive and strategic cloud services, designed to support advanced workloads and evolving customer needs from across the area.

In addition to Indonesia and Malaysia, Microsoft also announced in 2024, a significant commitment to enable a cloud and AI-powered future for Thailand.

India sub-continent

The India geography already has several live datacenter regions, and this footprint will expand further with the launch of the Hyderabad-based India South Central datacenter region coming in 2026. This is a part of a US $3 billion investment over two years in India cloud and AI infrastructure.

Consider a multi-region approach

Microsoft’s goal is to empower you to build and grow your business with unparalleled performance and availability. One of the best ways to position your organization for growth is to consider how you choose the right Azure regions.

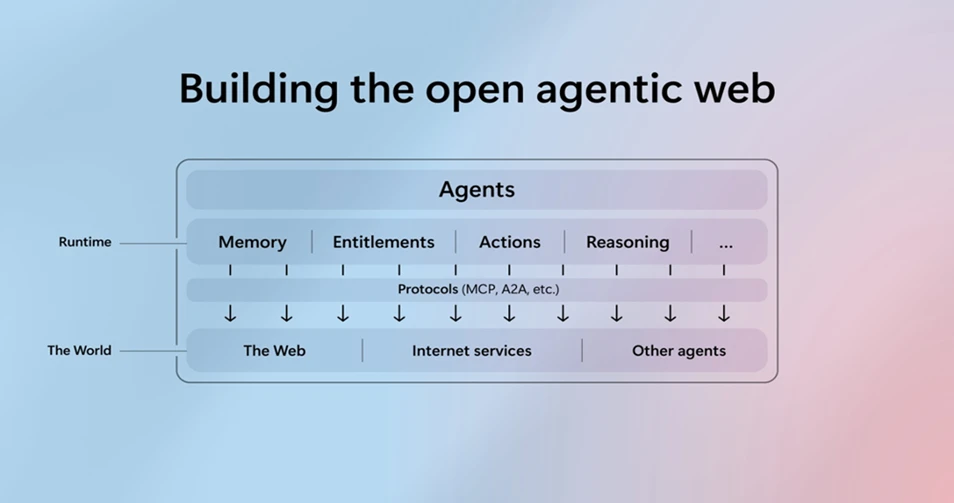

Our infrastructure investments in Asia are driven by the need for greater agility and flexibility in today’s dynamic cloud environment. Organizations can build a more resilient foundation by not locking themselves into a single region, all while optimizing performance. This enables access to Azure services, resources, and capacity across a broader set of geographic areas. A multi-region approach allows businesses to rapidly adapt to changing demands while maintaining high service levels. Our cloud infrastructure supports this agility by distributing services across regions, helping ensure responsiveness and scalability during peak usage. Leveraging a multi-region cloud architecture with any of our Asia-based regions further strengthens application performance, latency, and overall resilience and availability of cloud applications—empowering organizations to stay ahead in a fast-evolving digital landscape.

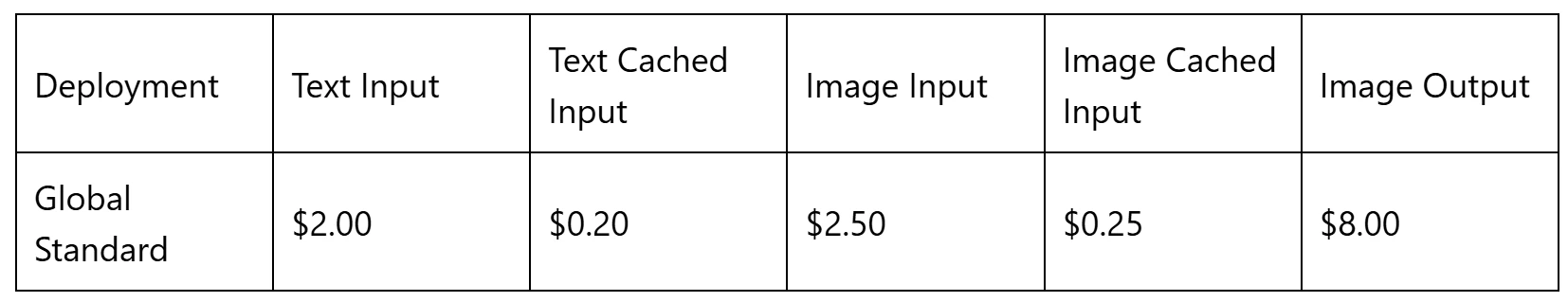

Opportunities for cost optimization

Pricing is a critical factor when selecting the right Azure regions for your organization. Through our significant investments in Asia, Microsoft is now able to offer newer and more cost-effective Azure regions, catering to both small and large organizations. Our newest regions like Indonesia Central, are designed to provide greater choice and flexibility, enabling businesses to optimize their cloud expenditures while maintaining high performance and availability.

Boost your cloud strategy

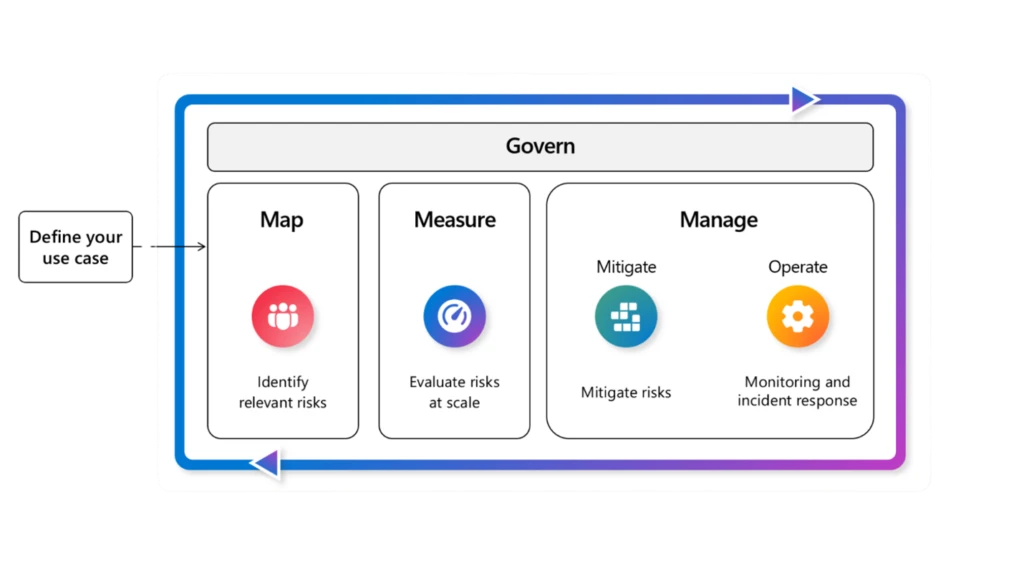

Use the Cloud Adoption Framework to achieve your cloud goals with best practices, documentation, and tools for business and technology strategies.

Use the Well Architected Framework to optimize workloads with guidance for building reliable, secure, and performant solutions on Azure.

By choosing to deploy services through any of our Azure regions, customers can leverage the diverse and robust infrastructure that Microsoft is developing across Asia. This approach not only offers resilience and flexibility but also paves the way for innovative solutions that drive economic growth and a more connected future.

Learn more about Cloud Adoption Framework

The post Microsoft’s commitment to supporting cloud infrastructure demand in Asia appeared first on Microsoft Azure Blog.

Quelle: Azure