Before joining Microsoft, I spent years helping organizations build and transform. I’ve seen how technology decisions can shape a business’s future. Whether it’s integrating platforms or ensuring your technology strategy stands the test of time, these choices define how a business operates, innovates, and stays ahead of the competition.

Today, business leaders everywhere are asking:

How do we use AI and agents to drive real outcomes?

Is our data ready for this shift?

What risks or opportunities come with AI and agents?

Are we moving fast enough, or will we fall behind?

This week at Microsoft Ignite 2025, Azure introduces solutions that address those questions with innovations designed for this very inflection point.

It’s not just about adopting the right tools. It’s about having a platform that gives every organization the confidence to embrace an AI-first approach. Azure is built for this moment, with bold ambitions to enable businesses of every size. By unifying AI, data, apps, and infrastructure, we’re delivering intelligence at scale.

If you’re still wondering if AI can really deliver ROI, don’t take my word for it; see how Kraft Heinz, The Premier League, and Levi Strauss & Co. are finding success by pairing their unique data with an AI-first approach.

const currentTheme =

localStorage.getItem(‘msxcmCurrentTheme’) ||

(window.matchMedia(‘(prefers-color-scheme: dark)’).matches ? ‘dark’ : ‘light’);

// Modify player theme based on localStorage value.

let options = {“autoplay”:false,”hideControls”:null,”language”:”en-us”,”loop”:false,”partnerName”:”cloud-blogs”,”poster”:”https://azure.microsoft.com/en-us/blog/wp-content/uploads/2025/11/Screenshot-2025-11-19-125803.png”,”title”:””,”sources”:[{“src”:”https://cdn-dynmedia-1.microsoft.com/is/content/microsoftcorp/1093182-YourIntelligentCloud-0x1080-6439k”,”type”:”video/mp4″,”quality”:”HQ”},{“src”:”https://cdn-dynmedia-1.microsoft.com/is/content/microsoftcorp/1093182-YourIntelligentCloud-0x720-3266k”,”type”:”video/mp4″,”quality”:”HD”},{“src”:”https://cdn-dynmedia-1.microsoft.com/is/content/microsoftcorp/1093182-YourIntelligentCloud-0x540-2160k”,”type”:”video/mp4″,”quality”:”SD”},{“src”:”https://cdn-dynmedia-1.microsoft.com/is/content/microsoftcorp/1093182-YourIntelligentCloud-0x360-958k”,”type”:”video/mp4″,”quality”:”LO”}],”ccFiles”:[{“url”:”https://azure.microsoft.com/en-us/blog/wp-json/msxcm/v1/get-captions?url=https%3A%2F%2Fwww.microsoft.com%2Fcontent%2Fdam%2Fmicrosoft%2Fbade%2Fvideos%2Fproducts-and-services%2Fen-us%2Fazure%2F1093182-yourintelligentcloud%2F1093182-YourIntelligentCloud_cc_en-us.ttml”,”locale”:”en-us”,”ccType”:”TTML”}]};

if (currentTheme) {

options.playButtonTheme = currentTheme;

}

document.addEventListener(‘DOMContentLoaded’, () => {

ump(“ump-691f1e1c0fe99″, options);

});

With these updates, we’re making it easier to build, run, and scale AI agents that deliver real business outcomes.

TLDR—the Ignite announcement rundown

On the go and want to get right to what’s new and how to learn more? We have you covered. Otherwise, keep reading for a summary of top innovations from the week.

If you want to make AI agents smarter with enterprise context…Microsoft Fabric IQPreviewMicrosoft Foundry IQPreviewMicrosoft Foundry new tool catalogPreviewIf you want a simple, all-in-one agent experience…Microsoft Agent FactoryAvailable nowIf you want to modernize and extend your data for AI–wherever it lives…SAP BDC Connect for Microsoft FabricComing soonAzure HorizonDBPreviewAzure DocumentDBAvailable nowSQL Server 2025Available nowIf you want to operate smarter and securely with AI-powered control…Foundry Control PlanePreviewAzure Copilot with built-in agentsPreviewNative integration for Microsoft Defender for Cloud and GitHub Advanced SecurityPreviewIf you want to build on an AI-ready foundation…Azure BoostAvailable nowAzure Cobalt 200Coming soon

Your AI and agent factory, expanded: Microsoft Foundry adds Anthropic Claude and Cohere models for ultimate model choice and flexibility

Earlier this year, we brought Anthropic models to Microsoft 365 Copilot, GitHub Copilot, and Copilot Studio. Today, we’re taking the next natural step: Claude Sonnet 4.5, Opus 4.1, and Haiku 4.5 are now part of Microsoft Foundry, advancing our mission to give customers choice across the industry’s leading frontier models—and making Azure the only cloud offering both OpenAI and Anthropic models.

This expansion underscores our commitment to an open, interoperable Microsoft AI ecosystem—bringing Anthropic’s reasoning-first intelligence into the tools, platforms, and workflows organizations depend on every day.

Read more about this announcement

This week, Cohere’s leading models join Foundry’s first-party model lineup, enabling organizations to build high-performance retrieval, classification, and generation workflows at enterprise scale.

With these additions to Foundry’s 11,000+-model ecosystem—alongside innovations from OpenAI, xAI, Meta, Mistral AI, Black Forest Labs, and Microsoft Research—developers can build smarter agents that reason, adapt, and integrate seamlessly with their data and applications.

Make AI agents smarter with enterprise context

In the agentic era, context is everything because the most useful agents don’t just reason, they’re capable of understanding your unique business. Microsoft Azure brings enterprise context to the forefront, so you can connect agents to the right data and systems—securely, consistently, and at scale. This set of announcements makes that real.

GPT‑5.1 in Foundry

Read more

Microsoft Fabric IQ turns your data into unified intelligence

Fabric IQ organizes enterprise data around business concepts—not tables—so decision-makers and AI agents can act in real time. Now in preview, Fabric IQ unifies analytics, time-series, and operational data under a semantic framework.

Because all data resides in OneLake, either natively or via shortcuts and mirroring, organizations can realize these benefits across on-premises, hybrid, and multicloud environments. This speeds up answering new questions and building processes, making Fabric the unified intelligence system for how enterprises see, decide, and operate.

Discover how Fabric IQ can support your business

Introducing Foundry IQ, which enables agents to understand more from your data

Now in preview, Foundry IQ makes it easier for businesses to connect AI agents to the right data, without the usual complexity. Powered by Azure AI Search, it streamlines how agents access and reason over both public and private sources, like SharePoint, Fabric IQ, and the web.

Instead of building custom RAG pipelines, developers get pre-configured knowledge bases and agentic retrieval in a single API that just works—all while also respecting user permissions. The outcome is agents that understand more, respond better, and help your apps perform with greater precision and context.

Build and control task forces of agents at cloud scale

Read Asha's blog

Back to the top

Agents, simplified: Microsoft Agent Factory, powered by Azure

This week, we’re introducing Microsoft Agent Factory—a program that brings Work IQ, Fabric IQ, and Foundry IQ together to help organizations build agents with confidence.

With a single metered plan, organizations can use Microsoft Foundry and Copilot Studio to build with IQ. This means you can deploy agents anywhere, including Microsoft 365 Copilot, without upfront licensing or provisioning.

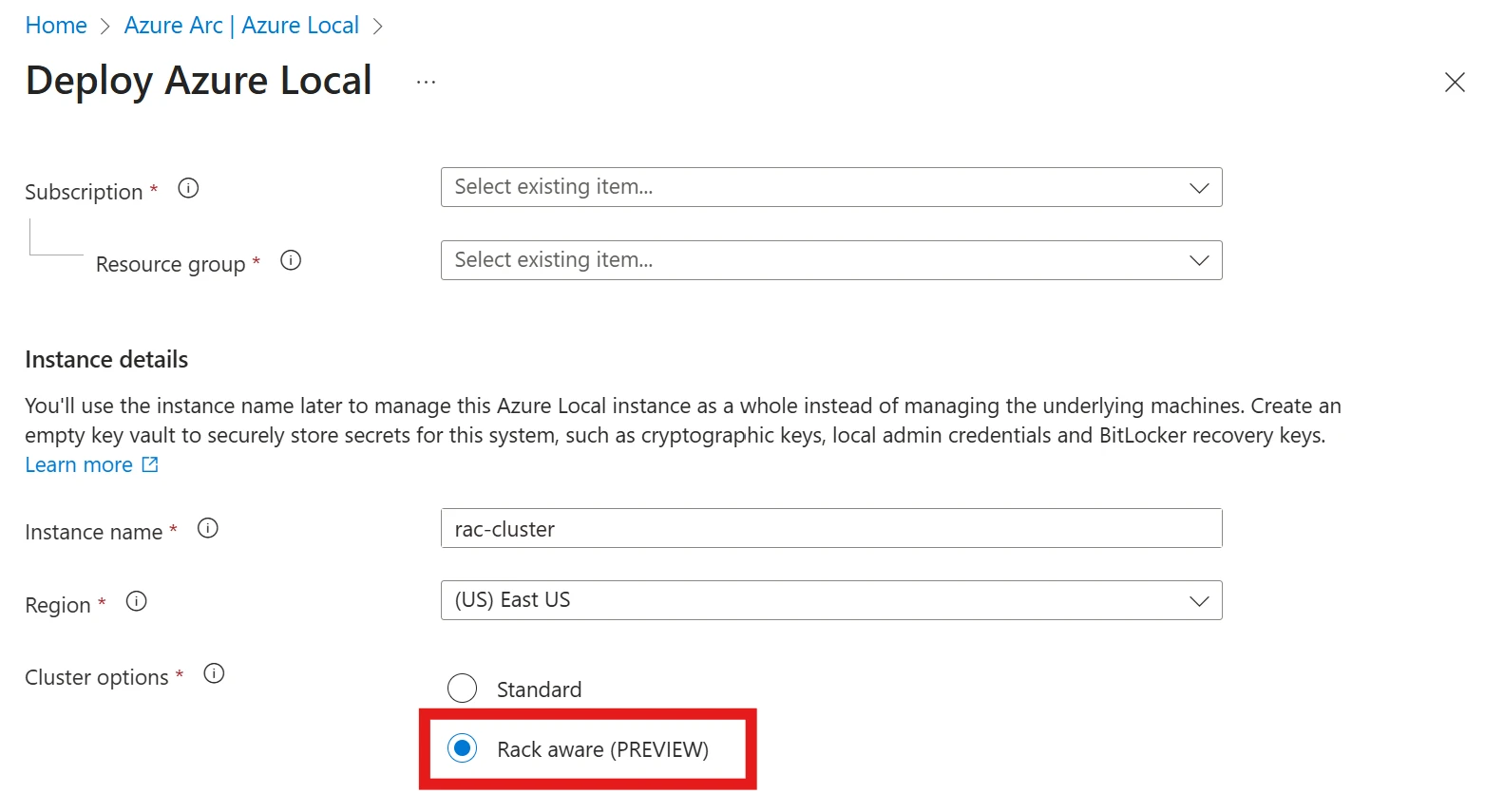

Azure delivers large scale cluster

Read more

Eligible organizations can also tap into hands-on support from top AI Forward Deployed Engineers and access tailored, role-based training to boost AI fluency across teams.

Confidently build agents with Microsoft Agent Factory

Modernize and extend your data for AI—wherever it lives

Great AI starts with great data. To succeed, organizations need a foundation that’s fast, flexible, and intelligent. This week, we introduced new capabilities to help make that possible.

Introducing Azure HorizonDB, a new fully managed PostgreSQL database service for faster, smarter apps

Now in preview, HorizonDB is a cloud database service built for speed, scale, and resilience. It runs up to three times faster than open-source PostgreSQL and grows to handle demanding storage requirements with up to 15 replicas running on auto-scaling shared storage.

Whether building new AI apps or modernizing core systems, HorizonDB delivers enterprise-grade security and natively integrated AI models to help you scale confidently and create smarter experiences.

Azure DocumentDB offers AI-ready data, open standards, and multi-cloud deployments

Now generally available, Azure DocumentDB is a fully managed NoSQL service built on open-source tech and designed for hybrid and multicloud flexibility. It supports advanced search and vector embeddings for more accurate results and is compatible with popular open-source MongoDB drivers and tools.

sovereign cloud capabilities

Read more

SQL Server 2025 delivers AI innovation to one of the world’s most widely used databases

The decades-long foundation of innovation continues with the availability of SQL Server 2025. This release helps developers build modern, AI-powered apps using familiar T-SQL—securely and at scale.

With built-in tools for advanced search, near real-time insights via OneLake, and simplified data handling, businesses can finally unlock more value from the data they already have. SQL Server 2025 is a future-ready platform that combines performance, security, and AI to help teams move faster and work smarter.

Start exploring SQL Server 2025

Fabric goes further

SQL database and Cosmos DB in Fabric are also available this week. These databases are natively integrated into Fabric, so you can run transactional and NoSQL workloads side-by-side, all in one environment.

Get instant access to trusted data with bi-directional, zero copy sharing through SAP BDC Connect for Fabric

Fabric now enables zero-copy data sharing with SAP Business Data Cloud, enabling customers to combine trusted business data with Fabric’s advanced analytics and AI—without duplication or added complexity. This breakthrough gives you instant access to trusted, business-ready insights for advanced analytics and AI.

We offer these world-class database options so you can build once and deploy at the edge, as platform as a service (PaaS), or even as software as a service (SaaS).And because our entire portfolio is either Fabric-connected or Fabric-native, Fabric serves as a unified hub for your entire data estate.

Strengthen the databases at the heart of your data estate

Read Arun's blog

Back to the top

Operate smarter and more securely with AI-powered control

We believe trust is the foundation of transformation. In an AI-powered world, businesses need confidence, control, and clarity. Azure provides that with built-in security, governance, and observability, so you can innovate boldly without compromise.

With capabilities that protect your data, keep your operations transparent, and make environments resilient, we announced updates this week to strengthen trust at every layer.

Unified observability helps keep agents secure, compliant, and under your control

One highlight from today’s announcements is the new Foundry Control Plane. It gives teams real-time security, lifecycle management, and visibility across agent platforms. Foundry Control Plane integrates signals from the entire Microsoft Cloud, including Agent 365 and the Microsoft security suite, so builders can optimize performance, apply agent controls, and maintain compliance.

New hosted agents and multi-agent workflows let agents collaborate across frameworks or clouds without sacrificing enterprise-grade visibility, governance, and identity controls. With Entra Agent ID, Defender runtime protection, and Purview data governance, you can scale AI responsibly with guardrails in place.

Azure Copilot: Turning cloud operations into intelligent collaboration

Azure Copilot is a new agentic interface that orchestrates specialized agents across the cloud management lifecycle. It embeds agents directly where you work—chat, console, or command line—for a personalized experience that connects action, context, and governance.

We are introducing new agents that simplify how you run on the cloud—from migration and deployment to operations and optimization—so each action aligns with enterprise policy.

Migration and modernization agents deliver smarter, automated workflows, using AI-powered discovery to handle most of the heavy lifting. This shift moves IT teams and developers beyond repetitive classification work so they can focus on building new apps and agents that drive innovation.

Similarly, the deployment agent streamlines infrastructure planning with guidance rooted in Azure Well-Architected Framework best practices, while the operations and optimization agents accelerate issue resolution, improve resiliency, and uncover cost savings opportunities.

Learn more about these agents in the Azure Copilot blog

Secure code to runtime with AI-infused DevSecOps

Microsoft and GitHub are transforming app security with native integration for Microsoft Defender for Cloud and GitHub Advanced Security. Now in preview, this integration helps protect cloud-native applications across the full app lifecycle, from code to cloud.

GitHub Universe 2025

Read more

This enables developers and security teams to collaborate seamlessly, allowing organizations to stay within the tools they use every day.

Streamline cloud operations and reimagine the datacenter

Read Jeremy's blog

Build on an AI-ready foundation

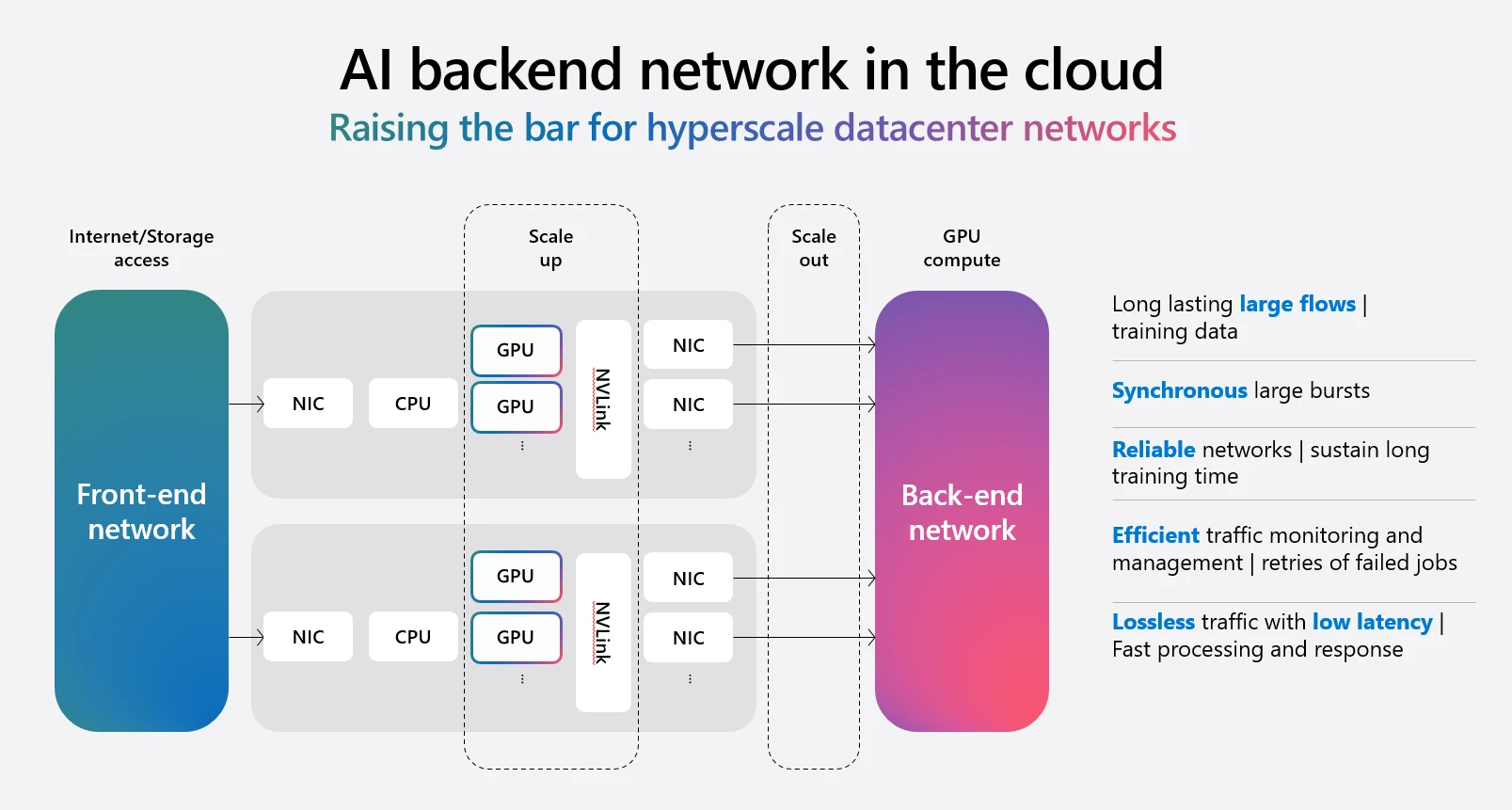

Azure infrastructure is transforming how we deliver intelligence at scale—both for our own services and for customers building the next generation of applications.

At the center of this evolution are new AI datacenters, designed as “AI superfactories,” and silicon innovations that enable Azure to provide unmatched flexibility and performance across every AI scenario.

THE first AI superfactory

Read more

Azure Boost delivers speed and security for your most demanding workloads

We’re announcing our latest generation of Azure Boost with remote storage throughput of up to 20 GBps, up to 1 million remote storage IOPS, and network bandwidth of up to 400 Gbps. These advancements significantly improve performance for future Azure VM series. Azure Boost is a purpose-built subsystem that offloads virtualization processes from the hypervisor and host operating system, accelerating storage and network-intensive workloads.

Azure Cobalt 200: Redefining performance for the agentic era

Azure Cobalt 200 is our next-generation ARM-based server, designed to deliver efficiency, performance, and security for modern workloads. It’s built to handle AI and data-intensive applications while maintaining strong confidentiality and reliability standards.

By optimizing compute and networking at scale, Cobalt 200 helps you run your most critical workloads more cost-effectively and with greater resilience. It’s infrastructure designed for today’s demands—and ready for what’s next.

See what Azure Cobalt 200 has to offer

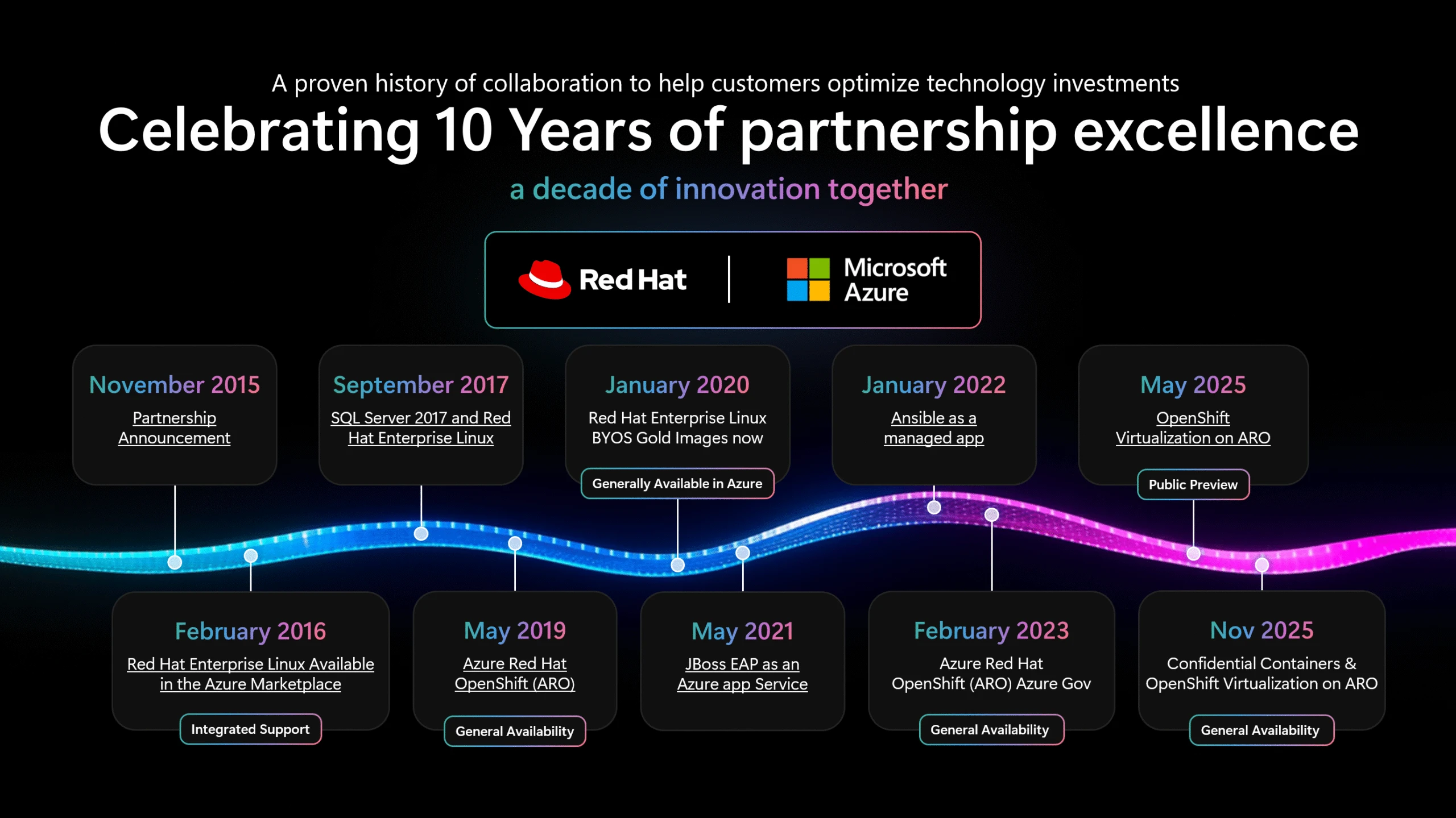

Keeping you at the frontier with continuous innovation

We’re delivering continuous innovation in AI, apps, data, security, and cloud. When you choose Azure, you get an intelligent cloud built on decades of experience and partnerships that push boundaries. And as we’ve just shown this week, the pace of innovation isn’t slowing down anytime soon.

Back to the top

Agentic enterprise, unlocked: Start now on Microsoft Azure

I hope Ignite—and our broader wave of innovation—sparked new ideas for you. The era of the agentic cloud isn’t on the horizon; it’s here right now. Azure brings together AI, data, and cloud capabilities to help you move faster, adapt smarter, and innovate confidently.

I invite you to imagine what’s possible—and consider these questions:

What challenges could you solve with a more connected, intelligent cloud foundation?

What could you build if your data, AI, and cloud worked seamlessly together?

How could your teams work differently with more time to innovate and less to maintain?

How can you stay ahead in a world where change is the only constant?

Want to go deeper into the news? Check out these blogs:

Microsoft Foundry: Scale innovation on a modular, interoperable, secure agent stack by Asha Sharma.

Azure Databases + Microsoft Fabric: Your unified and AI-powered data estate by Arun Ulagaratchagan.

Announcing Azure Copilot agents and AI infrastructure innovations by Jeremy Winter.

Ready to take the next step?

Explore technology methodologies and tools from real-world customer experiences with Azure Essentials.

Check out the latest announcements for software companies.

Visit the Microsoft Marketplace, the trusted source for cloud solutions, AI apps, and agents.

The post Azure at Microsoft Ignite 2025: All the intelligent cloud news explained appeared first on Microsoft Azure Blog.

Quelle: Azure