Posted by Brian Stevens, Vice President, Google Cloud

As we officially move into the Google Cloud era, Google Cloud Platform (GCP) continues to bring new capabilities to more regions, environments, applications and users than ever before. Our goal remains the same: we want to build the most open cloud for all businesses and make it easy for them to build and run great software.

Today, we’re announcing new products and services to deliver significant value to our customers. We’re also sharing updates to our infrastructure to improve our ability to not only power Google’s own billion-user products, such as Gmail and Android, but also to power businesses around the world.

Delivering Google Cloud Regions for all

We’ve recently joined the ranks of Google’s billion-user products. Google Cloud Platform now serves over one billion end-users through its customers’ products and services.

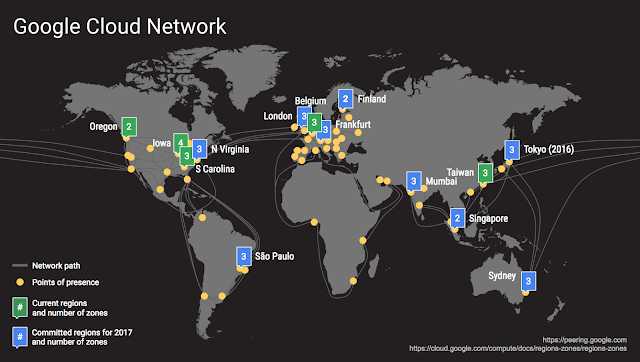

To meet this growing demand, we’ve reached an exciting turning point in our geographic expansion efforts. Today, we announced the locations of eight new Google Cloud Regions — Mumbai, Singapore, Sydney, Northern Virginia, São Paulo, London, Finland and Frankfurt — and there are more regions to be announced next year.

By expanding to new regions, we deliver higher performance to customers. In fact, our recent expansion in Oregon resulted in up to 80% improvement in latency for customers. We look forward to welcoming customers to our new Cloud Regions as they become publicly available throughout 2017.

Embracing the multi-cloud world

Not only do applications running on GCP benefit from state-of-the-art infrastructure, but they also run on the latest and greatest compute platforms. Kubernetes, the open source container management system that we developed and open-sourced, reached version 1.4 earlier this week, and we’re actively updating Google Container Engine (GKE) to this new version.

GKE customers will be the first to benefit from the latest Kubernetes features, including the ability to monitor cluster add-ons, one-click cluster spin-up, improved security, integration with Cluster Federation and support for the new Google Container-VM image (GCI).

Kubernetes 1.4 improves Cluster Federation to support straightforward deployment across multiple clusters and multiple clouds. In our support of this feature, GKE customers will be able to build applications that can easily span multiple clouds, whether they are on-prem, on a different public cloud vendor, or a hybrid of both.

We want GCP to be the best place to run your workloads, and Kubernetes is helping customers make the transition. That’s why customers such as Philips Lighting have migrated their most critical workloads to run on GKE.

Accelerating the move to cloud data warehousing and machine learning

Cloud infrastructure exists in the service of applications and data. Data analytics is critical to businesses, and the need to store and analyze data from a growing number of data sources has grown exponentially. Data analytics is also at the foundation for the next wave in business intelligence — machine learning.

The same principles of data analytics and machine learning apply to large-scale businesses: to derive business intelligence from your data, you need access to multiple data sources and the ability to seamlessly process it. That’s why GKE usage doubles every 90 days and is a natural fit for many businesses. Now, we’re introducing new updates to our data analytics and machine learning portfolio that help address this need:

Google BigQuery, our fully managed data warehouse, has been significantly upgraded to enable widespread adoption of cloud data analytics. BigQuery support for Standard SQL is now generally available, and we’ve added new features that improve compatibility with more data tools than ever and foster deeper collaboration across your organization with simplified query sharing. We also integrated Identity and Access Management (IAM) that allows businesses to fine-tune their security policies. And to make it accessible for any business to use BigQuery, we now offer unlimited flat-rate pricing that pairs unlimited queries with predictable data storage costs.

Cloud Machine Learning is now available to all businesses. Integrated with our data analytics and storage cloud services such as Google BigQuery, Google Cloud Dataflow, and Google Cloud Storage, it helps enable businesses to easily train quality machine learning models on their own data at a faster rate. “Seeing is believing” with machine learning, so we’re rolling out dedicated educational and certification programs to help more customers learn about the benefits of machine learning for their organization and give them the tools to put it into use.

To learn more about how to manage data across all of GCP, check out our new Data Lifecycle on GCP paper.

Introducing a new engagement model for customer support

At Google, we understand that the overall reliability and operational health of a customer’s application is a shared responsibility. Today, we’re announcing a new role on the GCP team: Customer Reliability Engineering (CRE). Designed to deepen our partnership with customers, CRE is comprised of Google engineers who integrate with a customer’s operations teams to share the reliability responsibilities for critical cloud applications. This integration represents a new model in which we share and apply our nearly two decades of expertise in #cloud computing as an embedded part of a customer’s organization. We’ll have more to share about this soon.

One of the CRE model’s first tests was joining Niantic as they launched Pokémon GO, scaling to serve millions of users around the world in a span of a few days.

The Google Cloud GKE/Kubernetes team that supports many of our customers like Niantic

The public cloud is built on customer trust, and we understand that it’s a significant commitment for a customer to entrust a public cloud vendor with their physical infrastructure. By offering new features to help address customer needs and collaborating with them to usher in the future with tools like machine learning, we intend to accelerate the usability of the public cloud and bring more businesses into the Google Cloud fold. Thanks for joining us as we embark toward this new horizon.

Quelle: Google Cloud Platform