At #Docker we have spent a lot of time discussing runtime security and isolation as a core part of the container architecture. However that is just one aspect of the total software pipeline. Instead of a one time flag or setting, we need to approach security as something that occurs at every stage of the application lifecycle. Organizations must apply security as a core part of the software supply chain where people, code and infrastructure are constantly moving, changing and interacting with each other.

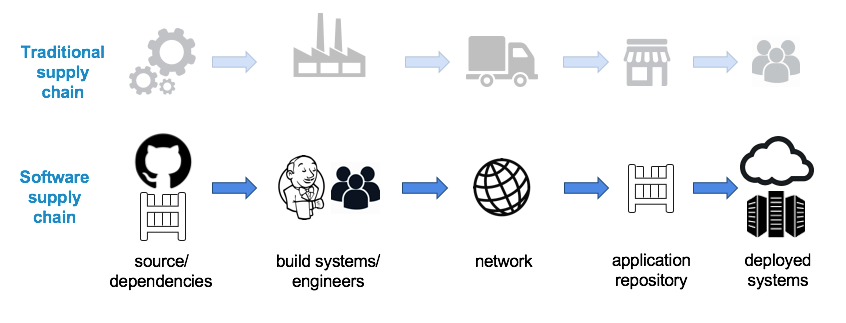

If you consider a physical product like a phone, it’s not enough to think about the security of the end product. Beyond the decision of what kind of theft resistant packaging to use, you might want to know where the materials are sourced from and how they are assembled, packaged, transported. Additionally it is important to ensure that the phone is not tampered with or stolen along the way.

The software supply chain maps almost identically to the supply chain for a physical product. You have to be able to identify and trust the raw materials (code, dependencies, packages), assemble them together, ship them by sea, land, or air (network) to a store (repository) so the item (application) can be sold (deployed) to the end customer.

Securing the software supply chain is also also quite similar. You have to:

Identify all the stuff in your pipeline; from people, code, dependencies, to infrastructure

Ensure a consistent and quality build process

Protect the product while in storage and transit

Guarantee and validate the final product at delivery against a bill of materials

In this post we will explain how Docker’s security features can be used to provide active and continuous security for a software supply chain.

Identity

The foundation of the entire pipeline is built on identity and access. You fundamentally need to know who has access to what assets and can run processes against them. The Docker architecture has a distinct identity concept that underpins the security strategy for securing your software supply chain: cryptographic keys allows the publisher to sign images to ensure proof-of-origin, authenticity, and provenance for Docker images.

Consistent Builds: Good Input = Good Output

Establishing consistent builds allow you to create a repeatable process and get control of your application dependencies and components to make it easier to test for defects and vulnerabilities. When you have a clear understanding of your components, it becomes easier to identify the things that break or are anomalous.

To get consistent builds, you have to ensure you are adding good components:

Evaluate the quality of the dependency, make sure it is the most recent/compatible version and test it with your software

Authenticate that the component comes from a source you expect and was not corrupted or altered in transit

Pin the dependency ensuring subsequent rebuilds are consistent so it is easier to uncover if a defect is caused by a change in code or dependency

Build your image from a trusted, signed base image using Docker Content Trust

Application Signing Seals Your Build

Application signing is the step that effectively “seals” the artifact from the build. By signing the images, you ensure that whomever verifies the signature on the receiving side (docker pull) establishes a secure chain with you (the publisher). This relationship assures that the images were not altered, added to, or deleted from while stored in a registry or during transit. Additionally, signing indicates that the publisher “approves” that the image you have pulled is good.

Enabling Docker Content Trust on both build machines and the runtime environment sets a policy so that only signed images can be pulled and run on those Docker hosts. Signed images signal to others in the organization that the publisher (builder) declares the image to be good.

Security Scanning and Gating

Your CI system and developers verify that your build artifact works with the enumerated dependencies, that operations on your application have expected behavior in both the success path and failure path, but have they vetted the dependencies for vulnerabilities? Have they vetted subcomponents of the dependencies or bundled system libraries for dependencies? Do they know the licenses for their dependencies? This kind of vetting is almost never done on a regular basis, if at all, since it is a huge overhead on top of already delivering bugfixes and features.

Docker Security Scanning assists in automating the vetting process by scanning the image layers. Because this happens as the image is pushed to the repo, it acts as a last check or final gate before #containers are deployed into production. Currently available in Docker Cloud and coming soon to Docker Datacenter, Security Scanning creates a Bill of Materials of all of the image’s layers, including packages and versions. This Bill of Materials is used to continuously monitor against a variety of CVE databases. This ensures that this scanning happens more than once and notifies the system admin or application developer when a new vulnerability is reported for an application package that is in use.

Threshold Signing – Tying it all Together

One of the strongest security guarantees that comes from signing with Docker Content Trust is the ability to have multiple signers participate in the signing process for a container. To understand this, imagine a simple CI process that moves a container image through the following steps:

Automated CI

Docker Security Scanning

Promotion to Staging

Promotion to Production

This simple 4 step process can add a signature after each stage has been completed and verify the every stage of the CI/CD process has been followed.

Image passes CI? Add a signature!

Docker Security Scanning says the image is free of vulnerabilities? Add a signature!

Build successfully works in staging? Add a signature!

Verify the image against all 3 signatures and deploy to production

Now before a build can be deployed to the production cluster, it can be cryptographically verified that each stage of the CI/CD process has signed off on an image.

Conclusion

The Docker platform provide enterprises the ability to layer in security at each step of the software lifecycle. From establishing trust with their users, to the infrastructure and code, our model gives both freedom and control to the developer and IT teams. From building secure base images to scanning every image to signing every layer, each feature allows IT to layer in a level of trust and guarantee into the application. As applications move through their lifecycle, their security profile is actively managed, updated and finally gated before it is finally deployed.

Docker secure beyond containers to your entire app pipeline #securityClick To Tweet

More Resources:

Read the Container Isolation White Paper

ADP hardens enterprise containers with Docker Datacenter

Try Docker Datacenter free for 30 days

Watch this talk from DockerCon 2016

The post Securing the Enterprise Software Supply Chain Using Docker appeared first on Docker Blog.

Quelle: https://blog.docker.com/feed/