SAN FRANCISCO — Just past 8 a.m. on March 14, police trod quietly through the snow to the double-fronted doors of Karim Baratov’s lavish home in Ancaster, Ontario. The officers passed by the garage where Baratov’s jet-black Mercedes Benz and Aston Martin DBS were parked, two of the only outward indications that the 22-year-old had money to spend. Minutes later, they took the Canadian-Kazakh hacker away into custody — a subdued end to an international cyber drama that involved the highest levels of the US government, Russian spies, a global cybercrime syndicate, and hundreds of millions of unsuspecting Americans.

The baby-faced Baratov is currently awaiting trial in the US on charges that he helped hack into half a billion Yahoo accounts — the largest known hack in history. His co-conspirators are Alexsey Belan, 29, a notorious Russian hacker still at large, and two Russian intelligence officers, Dmitry Aleksandrovich Dokuchaev, 33, and Igor Anatolyevich Sushchin, 43. The case against them is the starkest public example of the ways in which the Russian government works with cybercriminals to achieve its global agenda through cyberwarfare, and why those attacks have proven so difficult for governments around the world to track, let alone prosecute.

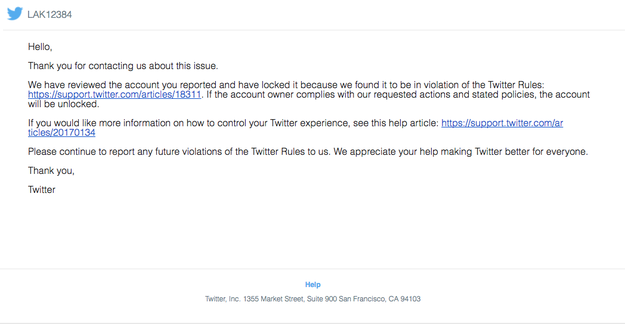

Left to right: Baratov, Dokuchaev, and Sushchin.

Courtesy FBI

Baratov, according to accounts given by US law enforcement, was a hacker for hire. It appears he simply took the wrong job.

“The Yahoo hack is a great example of the US government coming forward and saying we know what you are doing and we can prove it,” said Milan Patel, the former chief technology officer of the FBI’s cyber division and now managing director at the K2 Intelligence cybersecurity firm. “In the past the US and Russia engaged in a lot of tit-for-tat covert operations. But with Russia now, a lot is coming to the forefront and being made public about how they run their cyberactivities.”

“We would tip them off about a person we were looking for, and they would mysteriously disappear, only to appear later on working for the Russian government.”

That’s not always how it was. In the mid-2000s, FBI agents tried to work with their counterparts in the FSB, Russia's Federal Security Service, to investigate hackers, with regular bilateral meetings featuring US and Russian agents working together in the hope that the two countries could stem the growing tide of online crime. At least that’s how the Americans saw it.

“We would tip them off about a person we were looking for, and they would mysteriously disappear, only to appear later on working for the Russian government,” Patel said. “We basically helped the FSB identify talent and recruit by telling them who we were after.”

The arrest of Baratov and his co-conspirators signals a broader US government crackdown on Russian cybercriminals. For years, cybersecurity researchers and US authorities have traced the ties between cybercriminals and the Russian state, including how malware first developed for criminal enterprises has made its way into state-sponsored cyberattacks on Russia’s neighbors, and how botnet armies created by hackers have been repurposed to launch attacks on Russian targets. Now, they appear ready to strike. Earlier this month, Spanish authorities acting on behalf of the US arrested Pyotr Levashov, long known to authorities as one of the world’s most prolific spam kingpins. Five months ago, the US named a number of well-known Russian hackers as being behind the hacks on the Democratic National Committee, which they say were aimed at influencing the US elections. For those following the murky dealings of the world’s top hackers, the names did not come as a surprise. What was new was the willingness of US officials to publicly name the hackers, and to aggressively pursue Russian cybercriminals who aid Russia’s increasingly aggressive strides into cyberwarfare.

“Russia is playing with different rules — or maybe just without rules.”

Three Russian hackers told BuzzFeed News over the last month that there was “panic” about how far the arrests would go, and for how long hackers would be pursued by US authorities. US security officials told BuzzFeed News that they would do well to be scared, as “the gloves were coming off” with Russian hackers.

“We’ve reached a boiling point with Russia. They are the closest competitor to the US when it comes to cyberespionage and cyberattacks,” Patel said. “But Russia is playing with different rules — or maybe just without rules.”

Erik Carter for BuzzFeed News

Ask Americans to describe a typical Russian hacker who targets the US and they will likely describe a scruffy Russian teenager in a dimly lit basement, or a chiseled military figure in a warehouse-like room filled with hundreds of hackers, pounding away at their keyboards as they plot to take down the US. The truth is that Russian cyber operations are far more complex than either of those scenarios, with the Russian state relying on a network of hackers it hires within its military and intelligence divisions, as well as cybercriminal networks and hackers for hire it can recruit or co-opt as it needs.

“It’s a multilayered system, and it is very flexible. That’s what makes it so hard to track,” said one FBI agent who currently works within the bureau’s cyber division. He asked to speak off the record so that he could discuss open cases with BuzzFeed News. “Let’s say, for instance that Russian intelligence decide they want to hack into eBay to try and find information about a certain person. They might do that through an existing team they have in place, or they might go to a hacker, who has already infected a computer they want compromised and tell him to give them access or else … or they might just pay a guy who has previously hacked eBay to do it for them again.”

That flexibility makes it very difficult for the FBI, or any other law enforcement agency, to track what is being hacked, and why, the FBI agent said.

“They will use whatever method they need to use to get in, and they have no lines between criminals who are hacking for profit and those who are hacking for the government,” he said. “They might be going into eBay to steal credit cards, or they might be doing it as part of a covert op to target a US member of Congress. They might be doing both, really. It makes it hard to know when a hack is a matter of national security and when it is not.”

The hack on Yahoo that compromised the information of more than 500 million people lays out the complex relationship between the hackers and their targets. The accounts were hacked in 2014, with Yahoo only discovering the compromised accounts in September 2016. Just a few months later, Yahoo announced it had discovered a second, earlier breach, which had affected an additional 500 million people in 2013. Together, the hacks cost the company roughly $350 million, as users fled from the platform amid security concerns. It was, cybersecurity experts said, a death blow for Yahoo.

A spokesman for Yahoo did not answer a request for comment from BuzzFeed News. In a public statement published soon after the indictment was issued, Yahoo wrote: “The indictment unequivocally shows the attacks on Yahoo were state-sponsored. We are deeply grateful to the FBI for investigating these crimes and the DOJ for bringing charges against those responsible.”

For weeks, cybersecurity researchers investigating the hacks believed they were looking at a case of corporate espionage. But as the scope of the breach was discovered, researchers began to fear that an enemy of the US was compiling a massive database of all US nationals, complete with personal details and email accounts they could mine for vulnerable information. The indictments issued last month against Baratov, Belan, and the FSB officers revealed that the group had breached Yahoo looking for both political targets and financial targets. The hundreds of millions of other people who had been caught up in the breach were just collateral damage.

The hundreds of millions of other people who had been caught up in the breach were just collateral damage.

“The guys who did this to Yahoo, they were criminals. They could have turned around and sold the entire database to the highest bidder,” the FBI agent said. “We are lucky they didn’t.”

Enough is known about the four men to sketch a rough timeline of how they came together to carry out the hack. Dokuchaev was once known in hacker circles as “Forb,” and he spoke openly about hiring out his services until he was recruited into government work, as the Russian newspaper RBC has reported. At the FSB, Dokuchaev was partnered with Sushchin, and the two recruited Belan, a Latvian-born hacker who had been on a list of the FBI’s most wanted since 2012.

“This is the way it goes: They trap one hacker and then they get him to trap his friends,” said one Russian hacker, who agreed to speak to BuzzFeed News via an encrypted app on condition of anonymity. The hacker, who recently served time in a Russian prison and had fled the country once he was released, said the “pressure was intense” to do work on behalf of Russian intelligence officers. “They press on you. It’s not, like, a nice request. It’s a knock on your door and maybe a knock on your ass. If they can’t threaten you they threaten your family.”

Amedeo DiCarlo, lawyer for Karim Baratov, arrives at the courthouse in a chauffeured Rolls-Royce in Hamilton, Ontario, Canada, on Wednesday, April 5.

Robert Gillies / AP

It’s unclear how the men were connected to Baratov, who immigrated to Canada from Kazakhstan with his family in 2007. Investigators say Baratov was a hacker for hire. In a July 14, 2016, post on his Facebook page, Baratov wrote that he first discovered how profitable hacking could be when he was expelled from his high school for “threatening to kill my ex-friend as a joke.” The time off school “allowed me to work on my online projects 24/7, and really move my businesses to the next level.” The post, which included photos of a BMW, Audi, and Lamborghini, claims he made “triple and even quadruple the normal amount” of income. He ended the post with “Taking shortcuts doesn&039;t mean shortcutting the end result.”

Once the group had gained access to Yahoo, its targets included an economic development minister of a country bordering Russia, an investigative reporter who worked for Russian newspaper Kommersant, and a managing director of a US private equity firm, court documents show. FBI investigators believe that in addition to searching for the political targets requested by the FSB, Belan also used the Yahoo database to line his own pockets by searching for credit card information and devising various schemes to target Yahoo users. In November 2014, he began tampering with the Yahoo database so that anyone interested in erectile dysfunction treatments was redirected to his own online pharmacy store, from which he got a commission for driving traffic to the site.

“It’s a knock on your door and maybe a knock on your ass. If they can’t threaten you they threaten your family.”

“When you look at this case, you realize it has national security and criminal elements. It doesn’t fit neatly into one box or the other,” the FBI agent involved in the case said.

Patel said that the FBI often had difficulty distinguishing between cyber cases that were criminal in nature, versus those which were politically motivated, or had ties to the Russian state. “The government is making an effort to bridge the gap between investigations that involve classified national security issues, and those which are criminal because those worlds aren’t separate anymore,” he said, explaining that departments were trying to form more joint task forces and share classified information when possible.

It’s unclear who within the FSB was responsible for the group, or if their orders ultimately came from another arm of Russia’s government. In December 2016, Dokuchaev was arrested in Russia and accused of treason. His arrest appeared to be part of a roundup of Russian military and cybersecurity figures, though little information has emerged since their arrests.

Andrei Soldatov, a Russian investigative journalist and co-author of The Red Web, a book about the Kremlin’s online activities, said that while the Russian government’s tactic of outsourcing cyber operations to various groups is helpful in distancing themselves (and ultimately providing deniability), it also left them vulnerable to hackers running amuck.

“Hackers are not people who are traditionally easy to control,” said Soldatov. “They might disobey you sometimes.”

Erik Carter for BuzzFeed News

When asked why they first started hacking, many Russian hackers say you’ve asked them to solve the question of which came first, the chicken or the egg.

“I hacked because I wanted to get online, and then I was online because I was hacking,” said one Russian hacker, who considers himself a veteran of the Russian hacking scene due to his early involvement in credit cards schemes in the 1990s. He agreed to speak with BuzzFeed News on condition of anonymity, as he was concerned for the safety of himself and his family. “In the &039;90s you could only afford the internet in Russia if you were rich, or a hacker.”

Russians visit a cybercafé on July 25, 1997, in Moscow.

Andres Hernandez / Getty Images

The internet came to Russia after the fall of the Soviet Union. A devastated economy and uncertain political times meant that few had access to the internet, which could cost hundreds of dollars to surf for just a few hours. The Russian hacker said he and his friends got involved in early credit card schemes as a way of paying for internet use, which they then used to discover more about burgeoning online crime.

“We were baby hackers. Nobody knew what was possible,” he said. “But when the internet came to Russia, so did the hackers.”

Police initially ignored cybercriminals, and a de facto rule came into effect that as long as the hackers were targeting people and institutions outside of Russia, they would be left alone by the state.

“We were baby hackers. Nobody knew what was possible,” he said. “But when the internet came to Russia, so did the hackers.”

Quelle: <a href="Inside The Hunt For Russia’s Hackers“>BuzzFeed