Introducing Azure Storage Discovery: Transform data management with storage insights

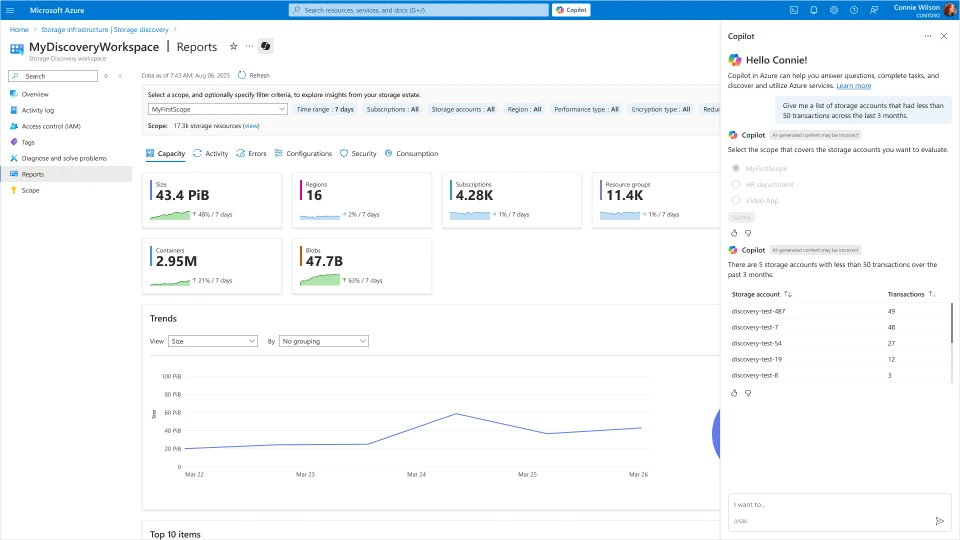

We are excited to announce the public preview of Azure Storage Discovery, a fully managed service that provides you enterprise-wide visibility into your Azure Blob Storage data estate. It provides a single pane of glass to understand and analyze how your data estate has evolved over time, optimize costs, enhance security, and drive operational efficiency. Azure Storage Discovery integrates with the Azure Copilot enabling you to use natural language to unlock insights and accelerate decision-making without utilizing any query language.

As your organization expands its digital footprint in the cloud, managing vast and globally distributed datasets across various business units and workloads becomes increasingly challenging. Insights aggregated across the entire Azure Blob Storage data estate can simplify the detection of outliers, enable long-term trend analysis, and support deep dives into specific resources using filters and pivots. Currently, customers rely on disparate tools and PowerShell scripts to generate, maintain and view such insights. This requires constant development, deployment, and management of infrastructure at scale. Azure Storage Discovery automates and scales this process by aggregating insights across all the subscriptions in your Microsoft Entra tenant and delivering them to you directly within the Azure portal.

Learn more about Azure Storage Discovery

Whether you’re a cloud architect, storage administrator, or data governance lead, Azure Storage Discovery helps you quickly answer key questions about your enterprise data estate in Azure Blob Storage:

How much data do we store across all our storage accounts?

Which regions are experiencing the highest growth?

Can I reduce our costs by finding data that is not being frequently used?

Are our storage configurations aligned with security and compliance best practices?

With Azure Storage Discovery, you can now explore such insights—and many more—with just a few clicks and with a Copilot by your side.

From insight to action with Azure Storage Discovery

Azure Storage Discovery simplifies the process of uncovering and analyzing insights from thousands of storage accounts, transforming complexity into clarity with just a few clicks.

Some of the key capabilities are:

Tap into Azure Copilot to get answers to the most critical storage questions for your business, without needing to learn a new query language or writing a single line of code. You can use Copilot to go beyond the pre-built reports and bring together insights across capacity, activity, errors and configurations.

Gain advanced storage insights that help you analyze how the data estate in Azure Blob Storage is growing, identify opportunities for cost optimization, discover data that is under-utilized, pinpoint workloads that could be getting throttled and find ways to strengthen the security of your storage accounts. These insights are powered by metrics related to storage capacity (object size and object count), activity on the data estate (transactions, ingress, egress), aggregation of transaction errors and detailed configurations for data protection, cost optimization and security.

Interactive reports in the Azure Portal make it simple to analyze trends over time, drill into top storage accounts, and instantly navigate to the specific resources represented in each chart. The reports can be filtered to focus on specific parts of the data estate based on Storage account configurations like regions, redundancy, performance type, encryption type, and others. Organization-wide visibility with flexible scoping to gather insights for multiple business groups or workloads. Analyze up to 1 million storage accounts spread across different subscriptions, resource groups and regions within a single workspace. The ability to drill down and filter data allows you to quickly obtain actionable insights for optimizing your data estate.

Fully managed service available right in the Azure Portal, with no additional infrastructure deployment or impact on business-critical workloads.

Up to 30 days of historical data will automatically be added within hours of deploying Azure Storage Discovery and all insights will be retained for up to 18 months.

Customer stories

Several customers have already started exploring Azure Storage Discovery during the preview to analyze their enterprise Azure Blob Storage data estate. Here are a few customers who found immediate value during the preview.

Getting a 360-degree view of the data estate in Azure Blob Storage

Tesco, one of the world’s largest and most innovative retailers, has been leveraging Storage Discovery in preview to gain an “effortless 360 View” of its data estate in Azure Blob Storage. To boost agility in development, the cloud deployment at Tesco is operated in a highly democratized manner, giving departments and teams autonomy over their subscriptions and storage accounts. However, to manage their cloud spend, ensure their deployment is configured correctly and optimize their data estate, each team is looking for detailed insights in a timely manner. The Cloud Platform Engineering (CPE) team works with each team providing them centralized data for cost analysis, security, and operational reporting. Currently, gathering and reporting on these insights to each team is a highly manual and operationally challenging task. As early adopters they have been using Azure Storage Discovery to provide a centralized, tenant-wide dashboard—to enable a “single-pane-of-glass” for key metrics and baselines. This helps them reduce the resources and time associated with answering simple questions such as “how much data do we have, and where?” or “what’s our baseline trends?”

As our data estate in Azure Storage continues to grow, it has become time consuming to gather the insights required to drive decisions around ‘How’ and ‘What’ we do—especially at the pace which is often demanded by stakeholders. Today, a lot of this is done using PowerShell scripts which even with parallelism, take a significant time to run, due to our overall scale. Anything which reduces the time it takes me to gather valuable insights is super valuable. On the other side, if I were to put my Ops hat on, the data presented is compelling for conversations with application teams; allowing us to focus on what really matters and addressing our top consumers, as opposed to being ‘snowed in’ under a mountain of data.

—Rhyan Waine, Lead Engineer, Cloud Platform Engineering, Tesco

Manage budget by identifying Storage Accounts that are growing rapidly

Willis Towers Watson (WTW) is at the forefront of using generative AI to enhance their offering for Human Resources and Insurance services while also balancing their costs. With Azure Storage Discovery, the team was able to quickly identify storage accounts where data was growing rapidly and increasing costs. With the knowledge of which storage accounts to focus on, they were able to identify usage patterns, roll out optimizations and control their costs.

As soon as my team started using Storage Discovery, they were immediately impressed by the insights it provided. Their reaction was, ‘Great—let’s dive in and see what we can uncover.’ Very quickly, they identified several storage accounts that were growing at an unusual rate. With that visibility, we were able to zero in on those Storage Accounts. We also discovered data that hadn’t been accessed in a long time, so we implemented automatic cleanups using Blob Lifecycle Management to efficiently manage and delete unused data.

—Darren Gipson, Lead DevOps Engineer, Willis Towers Watson

How Storage Discovery works

To get started with Azure Storage Discovery, follow these two simple steps: first, configure a Discovery workspace which contains the definition of the resource, and then define the Scopes that represent your business groups or workloads. Once these steps are completed, Azure Storage Discovery will start aggregating the relevant insights and make them available to you in detailed dashboards that can be found in the Reports page.

Deploying a Discovery workspace enables you to select which part of your data estate in Azure Blob Storage you want to analyze. You can do this by selecting all the subscriptions and resource groups of interest within your Microsoft Entra tenant. Upon successful verification of your access credentials, Azure Storage Discovery will advance to the next step.

Once the workspace is configured, you can create up to 5 scopes, each representing a business group, a workload, or any other logical grouping of storage accounts that has business value to you. This filtering can be done by selecting ARM resource tags that were previously applied to your storage accounts.

After the deployment is successful, Azure Storage Discovery provides reports right within the Azure portal with no additional setup.

Pricing and availability

Storage Discovery is available in select Azure regions during public preview. The service offers a Free pricing plan with insights related to capacity and configurations retained for up to 15 days and a Standard pricing plan that also includes advanced insights related to activity, errors and security configurations retained for up to 18 months to analyze annual trends and cycles in your business workloads. Pricing is based on the number of storage accounts and objects analyzed, with tiered rates to support all sizes of data estates in Azure Blob Storage.

The Free and Standard pricing plans will be offered for free, with no additional cost until September 30th, 2025. Learn more about pricing in the Azure Storage Discovery documentation.

Get started with Azure Storage Discovery

You can get started using Azure Storage Discovery to unlock the full potential of your storage within minutes. We invite you to preview Azure Storage Discovery for data management of your object storage. To get started, refer to the quick start guide to configure your first workspace. To learn more, check out the documentation.

We’d love to hear your feedback. What insights are most valuable to you? What would make Storage Discovery more compelling for your business? Let us know at StorageDiscoveryFeedback@service.microsoft.com.

Discover more about Azure Storage Discovery

The post Introducing Azure Storage Discovery: Transform data management with storage insights appeared first on Microsoft Azure Blog.

Quelle: Azure