Testing with Telepresence and Docker

Ever found yourself wishing for a way to synchronize local changes with a remote Kubernetes environment? There’s a Docker extension for that! Read on to learn more about how Telepresence partners with Docker Desktop to help you run integration tests quickly and where to get started.

A version of this article was first published on Ambassador’s blog.

Run integration tests locally with the Telepresence Extension on Docker Desktop

Testing your microservices-based application becomes difficult when it can no longer be run locally due to resource requirements. Moving to the cloud for testing is a no-brainer, but how do you synchronize your local changes against your remote Kubernetes environment?

Run integration tests locally instead of waiting on remote deployments with Telepresence, now available as an Extension on Docker Desktop. By using Telepresence with Docker, you get flexible remote development environments that work with your Docker toolchain so you can code and test faster for Kubernetes-based apps.

Install Telepresence for Docker through these quick steps:

Download Docker Desktop.

Open Docker Desktop.

In the Docker Dashboard, click “Add Extensions” in the left navigation bar.

In the Extensions Marketplace, search for the Ambassador Telepresence extension.

Click install.

Connect to Ambassador Cloud through the Telepresence extension:

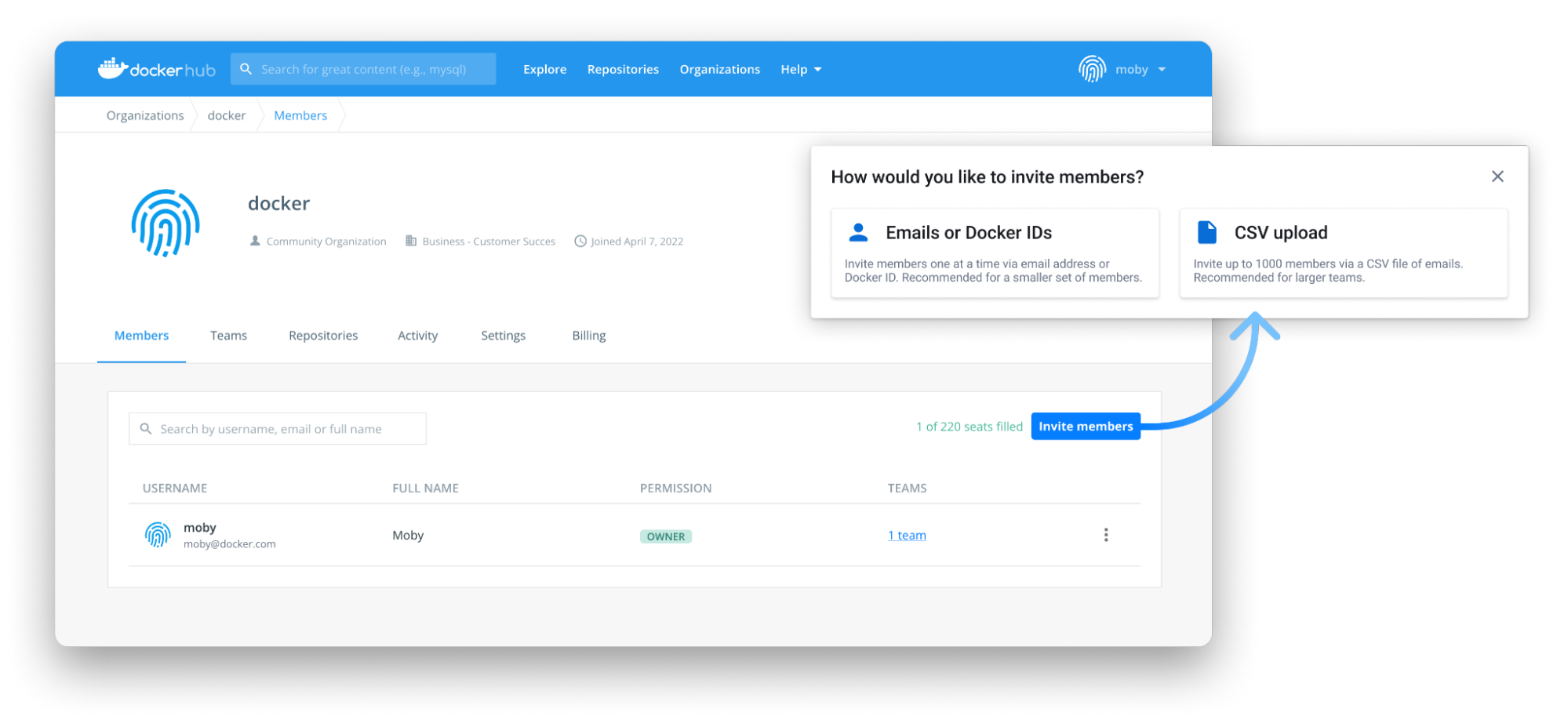

After you install the Telepresence extension in Docker Desktop, you need to generate an API key to connect the Telepresence extension to Ambassador Cloud.

Click the Telepresence extension in Docker Desktop, then click Get Started.

Click the Get API Key button to open Ambassador Cloud in a browser window.

Sign in with your Google, GitHub, or Gitlab account. Ambassador Cloud opens to your profile and displays the API key.

Copy the API key and paste it into the API key field in the Docker Dashboard.

Connect to your cluster in Docker Desktop:

Select the desired cluster from the dropdown menu and click Next. This cluster is now set to kubectl’s current context.

Click Connect to [your cluster]. Your cluster is connected, and you can now create intercepts.

To get hands-on with the example shown in the above recording, please follow these instructions:

1. Enable and start your Docker Desktop Kubernetes cluster locally 1.

Install the Telepresence extension to Docker Desktop if you haven’t already.

2. Install the emojivoto application in your local Docker Desktop cluster (we will use this to simulate a remote K8s cluster).

Use the following command to apply the Emojivoto application to your cluster.

kubectl apply -k github.com/BuoyantIO/emojivoto/kustomize/deployment

3. Start the web service in a single container with Docker.

Create a file docker-compose.yml and paste the following into that file:

version: ‘3’

services:

web:

image: buoyantio/emojivoto-web:v11

environment:

– WEB_PORT=8080

– EMOJISVC_HOST=emoji-svc.emojivoto:8080

– VOTINGSVC_HOST=voting-svc.emojivoto:8080

– INDEX_BUNDLE=dist/index_bundle.js

ports:

– "8080:8080"

network_mode: host

In your terminal run docker compose up to start running the web service locally.

4. Using a test container, curl the “list” API endpoint in Emojivoto and watch it fail (because it can’t access the backend cluster).

In a new terminal, we will test the Emojivoto app with another container. Run the following command docker run -it –rm –network=host alpine. Then we’ll install curl: apk –no-cache add curl.

Finally, curl localhost:8080/api/list, and you should get an rpc error message because we are not connected to the backend cluster and cannot resolve the emoji or voting services:

> docker run -it –rm –network=host alpine

apk –no-cache add curl

fetch https://dl-cdn.alpinelinux.org/alpine/v3.15/main/x86_64/APKINDEX.tar.gz

fetch https://dl-cdn.alpinelinux.org/alpine/v3.15/community/x86_64/APKINDEX.tar.gz

(1/5) Installing ca-certificates (20211220-r0)

(2/5) Installing brotli-libs (1.0.9-r5)

(3/5) Installing nghttp2-libs (1.46.0-r0)

(4/5) Installing libcurl (7.80.0-r0)

(5/5) Installing curl (7.80.0-r0)

Executing busybox-1.34.1-r3.trigger

Executing ca-certificates-20211220-r0.trigger

OK: 8 MiB in 19 packages

curl localhost:8080/api/list

{"error":"rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing dial tcp: lookup emoji-svc on 192.168.65.5:53: no such host""}

5. Run Telepresence connect via Docker Desktop.

Open Docker Desktop and click the Telepresence extension on the left-hand side. Click the blue “Connect” button. Copy and paste an API key from Ambassador Cloud if you haven’t done so already (https://app.getambassador.io/cloud/settings/licenses-api-keys). Select the cluster you deployed the Emojivoto application to by selecting the appropriate Kubernetes Context from the menu. Click next and the extension will connect Telepresence to your local cluster.

6. Re-run the curl and watch this succeed.

Now let’s re-run the curl command. Instead of an error, the list of emojis should be returned indicating that we are connected to the remote cluster:

curl localhost:8080/api/list

[{"shortcode":":joy:","unicode":"😂"},{"shortcode":":sunglasses:","unicode":"😎"},{"shortcode":":doughnut:","unicode":"🍩"},{"shortcode":":stuck_out_tongue_winking_eye:","unicode":"😜"},{"shortcode":":money_mouth_face:","unicode":"🤑"},{"shortcode":":flushed:","unicode":"😳"},{"shortcode":":mask:","unicode":"😷"},{"shortcode":":nerd_face:","unicode":"🤓"},{"shortcode":":gh

7. Now, let’s “intercept” traffic being sent to the web service running in our K8s cluster and reroute it to the “local” Docker Compose instance by creating a Telepresence intercept.

Select the emojivoto namespace and click the intercept button next to “web”. Set the target port to 8080 and service port to 80, then create the intercept.

After the intercept is created you will see it listed in the UI. Click the nearby blue button with three dots to access your preview URL. Open this URL in your browser, and you can interact with your local instance of the web service with its dependencies running in your Kubernetes cluster.

Congratulations, you’ve successfully created a Telepresence intercept and a sharable preview URL! Send this to your teammates and they will be able to see the results of your local service interacting with the Docker Desktop cluster.

—

Want to learn more about Telepresence or find other life-improving Docker Extensions? Check out the following related resources:

Install the official Telepresence Docker Desktop Extension.

Learn more about Ambassador and their solutions for Kubernetes developers.

Read similar articles covering new Docker Extensions.

Find more helpful Docker Extensions on Docker Hub.

Learn how to create your own extensions for Docker Desktop.

Get started and download Docker Desktop for Windows, Mac, or Linux.

Quelle: https://blog.docker.com/feed/