Over the last five years, Node.js has maintained its position as a top platform among professional developers. It’s an open source, cross-platform JavaScript runtime environment designed to maximize throughput. Node.js uses an event-driven, non-blocking I/O model that makes it lightweight and efficient — perfect for data intensive, real-time, and distributed applications.

With over 90,500 stars and 24,400 forks, Node’s developer community is highly active. With more devs creating Node.js apps than ever before, finding efficient ways to build and deploy and cross platform is key. Let’s discuss how containerization can help before jumping into the meat of our guide.

Why is containerizing a Node application important?

Containerizing your Node application has numerous benefits. First, Docker’s friendly, CLI-based workflow lets any developer build, share, and run containerized Node applications. Second, developers can install their app from a single package and get it up and running in minutes. Third, Node developers can code and test locally while ensuring consistency from development to production.

We’ll show you how to quickly package your Node.js app into a container. We’ll also tackle key concerns that are easy to forget — like image vulnerabilities, image bloat, missing image tags, and poor build performance. Let’s explore a simple todo list app and discuss how our nine tips might apply.

Analyzing a simple todo list application

Let’s first consider a simple todo list application. This is a basic React application with a Node.js backend and a MongoDB database. The source code of the complete project is available within our GitHub samples repo.

Building the application

Luckily, we can build our sample application in just a few steps. First, you’ll want to clone the appropriate awesome-compose sample to use it with your project:

git clone https://github.com/dockersamples/awesome-compose/

cd awesome-compose/react-express-mongodb

docker compose -f docker-compose.yaml up -d

Second, enter the docker compose ps command to list out your services in the terminal. This confirms that everything is accounted for and working properly:

docker compose ps

NAME COMMAND SERVICE STATUS PORTS

backend "docker-entrypoint.s…" backend running 3000/tcp

frontend "docker-entrypoint.s…" frontend running 0.0.0.0:3000->3000/tcp

mongo "docker-entrypoint.s…" mongo running 27017/tcp

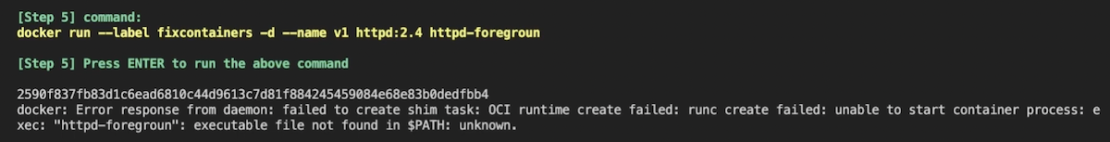

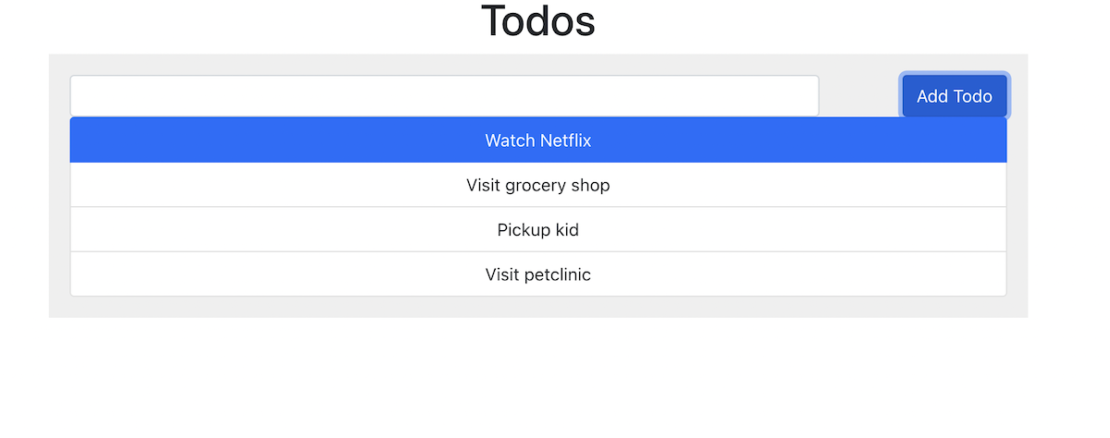

Third, open your browser and navigate to https://localhost:3000 to view your application in action. You’ll see your todo list UI and be able to directly interact with your application:

This is a great way to spin up a functional application in a short amount of time. However, remember that these samples are foundations you can build upon. They’re customizable to better suit your needs. And this can be important from a performance standpoint — since our above example isn’t fully optimized. Next, we’ll share some general optimization tips and more to help you build the best app possible.

Our top nine tips for containerizing and optimizing Node applications

1) Use a specific base image tag instead of “version:latest”

When building Docker images, we always recommended specifying useful tags which codify version information, intended destination (prod or test, for instance), stability, or other useful information for deploying your application across environments.

Don’t rely on the latest tag that Docker automatically pulls, outside of local development. Using latest is unpredictable and may cause unexpected behavior. Each time you pull a latest image version, it could contain a new build or untested code that may break your application.

Consider the following Dockerfile that uses the specific node:lts-buster Docker image as a base image instead of node:latest. This approach may be preferable since lts-buster is a stable image:

# Create image based on the official Node image from dockerhub

FROM node:lts-buster

# Create app directory

WORKDIR /usr/src/app

# Copy dependency definitions

COPY package.json ./package.json

COPY package-lock.json ./package-lock.json

# Install dependencies

#RUN npm set progress=false

# && npm config set depth 0

# && npm i install

RUN npm ci

# Get all the code needed to run the app

COPY . .

# Expose the port the app runs in

EXPOSE 3000

# Serve the app

CMD ["npm", "start"]

Overall, it’s often best to avoid using FROM node:latest in your Dockerfile.

2) Use a multi-stage build

With multi-stage builds, a Docker build can use one base image for compilation, packaging, and unit testing. A separate image holds the application’s runtime. This makes the final image more secure and shrinks its footprint (since it doesn’t contain development or debugging tools). Multi-stage Docker builds help ensure your builds are 100% reproducible and lean. You can create multiple stages within a Dockerfile to control how you build that image.

You can containerize your Node application using a multi-layer approach. Each layer may contain different app components like source code, resources, and even snapshot dependencies. What if we want to package our application into its own image like we mentioned earlier? Check out the following Dockerfile to see how it’s done:

FROM node:lts-buster-slim AS development

WORKDIR /usr/src/app

COPY package.json ./package.json

COPY package-lock.json ./package-lock.json

RUN npm ci

COPY . .

EXPOSE 3000

CMD [ "npm", "run", "dev" ]

FROM development as dev-envs

RUN <<EOF

apt-get update

apt-get install -y –no-install-recommends git

EOF

# install Docker tools (cli, buildx, compose)

COPY –from=gloursdocker/docker / /

CMD [ "npm", "run", "dev" ]

We first add an AS development label to the node:lts-buster-slim statement. This lets us refer to this build stage in other build stages. Next, we add a new development stage labeled dev-envs. We’ll use this stage to run our development.

Now, let’s rebuild our image and run our development. We’ll use the same docker build command as above — while adding the –target development flag to specifically run the development build stage:

docker build -t node-docker –target dev-envs .

3) Fix security vulnerabilities in your Node image

Today’s developers rely on third-party code and apps while building their services. External software can introduce unwanted vulnerabilities into your code if you’re not careful. Leveraging trusted images and continually monitoring your containers helps protect you.

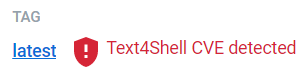

Whenever you build a node:lts-buster-slim Docker image, Docker Desktop prompts you to run security scans of the image to detect any known vulnerabilities.

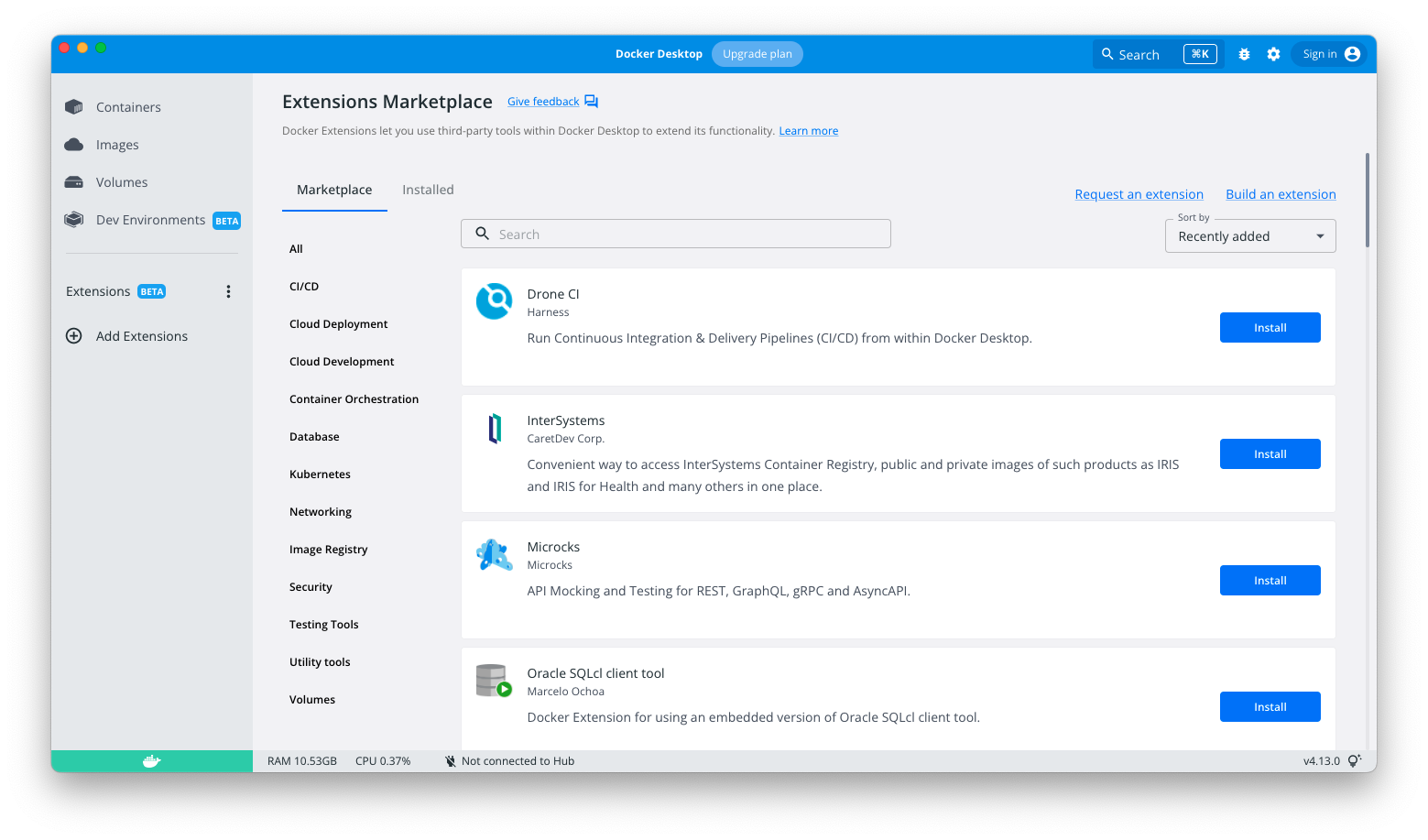

Let’s use the the Snyk Extension for Docker Desktop to inspect our Node.js application. To begin, install Docker Desktop 4.8.0+ on your Mac, Windows, or Linux machine. Next, check the box within Settings > Extensions to Enable Docker Extensions.

You can then browse the Extensions Marketplace by clicking the “Add Extensions” button in the left sidebar, then searching for Snyk.

Snyk’s extension lets you rapidly scan both local and remote Docker images to detect vulnerabilities.

Install the Snyk and enter the node:lts-buster-slim Node Docker Official Image into the “Select image name” field. You’ll have to log into Docker Hub to start scanning. Don’t worry if you don’t have an account — it’s free and takes just a minute to create.

When running a scan, you’ll see this result within Docker Desktop:

Snyk uncovered 70 vulnerabilities of varying severity during this scan. Once you’re aware of these, you can begin remediation to fortify your image.

That’s not all. In order to perform a vulnerability check, you can use the docker scan command directly against your Dockerfile:

docker scan -f Dockerfile node:lts-buster-slim

4) Leverage HEALTHCHECK

The HEALTHCHECK instruction tells Docker how to test a container and confirm that it’s still working. For example, this can detect when a web server is stuck in an infinite loop and cannot handle new connections — even though the server process is still running.

When an application reaches production, an orchestrator like Kubernetes or a service fabric will most likely manage it. By using HEALTHCHECK, you’re sharing the status of your containers with the orchestrator to enable configuration-based management tasks. Here’s an example:

# syntax=docker/dockerfile:1.4

FROM node:lts-buster-slim AS development

# Create app directory

WORKDIR /usr/src/app

COPY package.json ./package.json

COPY package-lock.json ./package-lock.json

RUN npm ci

COPY . .

EXPOSE 3000

CMD [ "npm", "run", "dev" ]

FROM development as dev-envs

RUN <<EOF

apt-get update

apt-get install -y –no-install-recommends git

EOF

RUN <<EOF

useradd -s /bin/bash -m vscode

groupadd docker

usermod -aG docker vscode

EOF

HEALTHCHECK CMD curl –fail http://localhost:3000 || exit 1

# install Docker tools (cli, buildx, compose)

COPY –from=gloursdocker/docker / /

CMD [ "npm", "run", "dev" ]

When HEALTHCHECK is present in a Dockerfile, you’ll see the container’s health in the STATUS column after running the docker ps command. A container that passes this check is healthy. The CLI will label unhealthy containers as unhealthy:

docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

1d0c5e3e7d6a react-express-mongodb-frontend "docker-entrypoint.s…" 23 seconds ago Up 21 seconds (health: starting) 0.0.0.0:3000->3000/tcp frontend

a89721d3c42d react-express-mongodb-backend "docker-entrypoint.s…" 23 seconds ago Up 21 seconds (health: starting) 3000/tcp backend

194c953f5653 mongo:4.2.0 "docker-entrypoint.s…" 3 minutes ago Up 3 minutes 27017/tcp mongo

You can also define a healthcheck (note the case difference) within Docker Compose! This can be pretty useful when you’re not using a Dockerfile. Instead of writing a plain text instruction, you’ll write this configuration in YAML format.

Here’s a sample configuration that lets you define healthcheck within your docker-compose.yml file:

backend:

container_name: backend

restart: always

build: backend

volumes:

– ./backend:/usr/src/app

– /usr/src/app/node_modules

depends_on:

– mongo

networks:

– express-mongo

– react-express

expose:

– 3000

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:3000"]

interval: 1m30s

timeout: 10s

retries: 3

start_period: 40s

5) Use .dockerignore

To increase build performance, we recommend creating a .dockerignore file in the same directory as your Dockerfile. For this tutorial, your .dockerignore file should contain just one line:

node_modules

This line excludes the node_modules directory — which contains output from Maven — from the Docker build context. There are many good reasons to carefully structure a .dockerignore file, but this simple file is good enough for now.

Let’s now explain the build context and why it’s essential . The docker build command builds Docker images from a Dockerfile and a “context.” This context is the set of files located in your specified PATH or URL. The build process can reference any of these files.

Meanwhile, the compilation context is where the developer works. It could be a folder on Mac, Windows, or a Linux directory. This directory contains all necessary application components like source code, configuration files, libraries, and plugins. With a .dockerignore file, we can determine which of the following elements like source code, configuration files, libraries, plugins, etc. to exclude while building your new image.

Here’s how your .dockerignore file might look if you choose to exclude the node_modules directory from your build:

Backend:

Frontend:

6) Run as a non-root user for security purpose

Running applications with user privileges is safer since it helps mitigate risks. The same applies to Docker containers. By default, Docker containers and their running apps have root privileges. It’s therefore best to run Docker containers as non-root users.

You can do this by adding USER instructions within your Dockerfile. The USER instruction sets the preferred user name (or UID) and optionally the user group (or GID) while running the image — and for any subsequent RUN, CMD, or ENTRYPOINT instructions:

FROM node:lts-buster AS development

WORKDIR /usr/src/app

COPY package.json ./package.json

COPY package-lock.json ./package-lock.json

RUN npm ci

COPY . .

EXPOSE 3000

CMD ["npm", "start"]

FROM development as dev-envs

RUN <<EOF

apt-get update

apt-get install -y –no-install-recommends git

EOF

RUN <<EOF

useradd -s /bin/bash -m vscode

groupadd docker

usermod -aG docker vscode

EOF

# install Docker tools (cli, buildx, compose)

COPY –from=gloursdocker/docker / /

CMD [ "npm", "start" ]

7) Favor multi-architecture Docker images

Your CPU can only run binaries for its native architecture. For example, Docker images built for an x86 system can’t run on an Arm-based system. With Apple fully transitioning to their custom Arm-based silicon, it’s possible that your x86 (Intel or AMD) container image won’t work with Apple’s M-series chips.

Consequently, we always recommended building multi-arch container images. Below is the mplatform/mquery Docker image that lets you query the multi-platform status of any public image in any public registry:

docker run –rm mplatform/mquery node:lts-buster

Unable to find image ‘mplatform/mquery:latest’ locally

d0989420b6f0: Download complete

af74e063fc6e: Download complete

3441ed415baf: Download complete

a0c6ee298a93: Download complete

894bcacb16df: Downloading [=============================================> ] 3.146MB/3.452MB

Image: node:lts-buster (digest: sha256:a5d9200d3b8c17f0f3d7717034a9c215015b7aae70cb2a9d5e5dae7ff8aa6ca8)

* Manifest List: Yes (Image type: application/vnd.docker.distribution.manifest.list.v2+json)

* Supported platforms:

– linux/amd64

– linux/arm/v7

– linux/arm64/v8

We introduced the docker buildx command to help you build multi-architecture images. Buildx is a Docker component that enables many powerful build features with a familiar Docker user experience. All Buildx builds run using the Moby BuildKit engine.

BuildKit is designed to excel at multi-platform builds, or those not just targeting the user’s local platform. When you invoke a build, you can set the –platform flag to specify the build output’s target platform (like linux/amd64, linux/arm/v7, linux/arm64/v8, etc.):

docker buildx build –platform linux/amd64,linux/arm/v7 -t node-docker .

8) Explore graceful shutdown options for Node

Docker containers are ephemeral in nature. They can be stopped and destroyed, then either rebuilt or replaced with minimal effort. You can terminate containers by sending a SIGTERM notice signal to the process. This little grace period requires you to ensure that your app is handling ongoing requests and cleaning up resources in a timely fashion.

On the other hand, Node.js accepts and forwards signals like SIGINT and SIGTERM from the OS, which is key to properly shutting down your app. Node.js lets your app decide how to handle those signals. If you don’t write code or use a module to handle them, your app won’t shut down gracefully. It’ll ignore those signals until Docker or Kubernetes kills it after a timeout period.

Using certain init options like docker run –init or tini within your Dockerfile is viable when you can’t change your app code. However, we recommend writing code to handle proper signal handling for graceful shutdowns.

Check out this video from Docker Captain Bret Fisher (12:57) where he covers all three available Node shutdown options in detail.

9) Use the OpenTelemetry API to measure NodeJS performance

How do Node developers make their apps faster and more performant? Generally, developers rely on third-party observability tools to measure application performance. This performance monitoring is essential for creating multi-functional Node applications with top notch user experiences.

Observability extends beyond application performance. Metrics, traces, and logs are now front and center. Metrics help developers to understand what’s wrong with the system, while traces help you discover how it’s wrong. Logs tell you why it’s wrong. Developers can dig into particular metrics and traces to holistically understand system behavior.

Observing Node applications means tracking your Node metrics, requests rates, request error rate, and request durations. OpenTelemetry is one popular collection of tools and APIs that help you instrument your Node.js application.

You can also use an open-source tool like SigNoz to analyze your app’s performance. Since SigNoz offers a full-stack observability tool, you don’t need to rely on multiple tools.

Conclusion

In this guide, we explored many ways to optimize your Docker images — from carefully crafting your Dockerfile to securing your image via Snyk scanning. Building better Node.js apps doesn’t have to be complex. By nailing some core fundamentals, you’ll be in great shape.

If you’d like to dig deeper, check out these additional recommendations and best practices for building secure, production-grade Docker images:

Docker development best practicesDockerfile best practicesBuilding images with BuildKitBest practices for scanning images Getting started with the Snyk Extension

Quelle: https://blog.docker.com/feed/