Announcing Docker Hub OCI Artifacts Support

We’re excited to announce that Docker Hub can now help you distribute any type of application artifact! You can now keep everything in one place without having to leverage multiple registries.

Before today, you could only use Docker Hub to store and distribute container images — or artifacts usable by container runtimes. This became a limitation of our platform, since container image distribution is just the tip of the application delivery iceberg. Nowadays, modern application delivery requires numerous types of artifacts:

Helm chartsWebAssembly modulesDocker VolumesSBOMsOPA bundles…and many other custom artifacts

Developers often share these with clients that need them since they add immense value to each project. And while the OCI working groups are busy releasing the latest OCI Artifact Specification, we still have to package application artifacts as OCI images in the meantime.

Docker Hub acts as an image registry and is perfectly suited for distributing application artifacts. That’s why we’ve added support for any software artifact — packaged as an OCI image — to Docker Hub.

What’s the Open Container Initiative (OCI)?

Back in 2015, we helped establish the Open Container Initiative as an open governance structure to standardize container image formats, container runtimes, and image distribution.

The OCI maintains a few core specifications. These govern the following:

How to package filesystem bundlesHow to launch containerized, cross-platform appsHow to make packaged content accessible to remote clients

The Runtime Specification determines how OCI images and runtimes interact. Next, the Image Specification outlines how to create OCI images. Finally, the Distribution Specification defines how to make content distribution interoperable.

The OCI’s overall aim is to boost transparency, runtime predictability, software compatibility, and distribution. We’ve since donated our own container format and runC OCI-compliant runtime to the OCI, plus given the OCI-compliant distribution project to the CNCF.

Why are we adding OCI support?

Container images are integral to supporting your containerized application builds. We know that images accumulate between projects, making centralized cloud storage essential to efficiently manage resources. Developers shouldn’t have to rely on local storage or wonder if these resources are readily accessible. However, we also know that developers want to store a variety of artifacts within Docker Hub.

Storing your artifacts in Docker Hub unlocks “anywhere access” while also enabling improved collaboration through Docker Hub’s standard sharing capabilities. This aligns us more closely with the OCI’s content distribution mission by giving users greater control over key pieces of application delivery.

How do I manage different OCI artifacts?

We recommend using dedicated tools to help manage non-container OCI artifacts, like the Helm CLI for Helm charts or the OCI Registry-as-Storage (ORAS) CLI for arbitrary content types.

Let’s walk through a few use cases to showcase OCI support in Docker Hub.

Working with Helm charts

Helm chart support was your most-requested feature, and we’ve officially added it to Docker Hub! So, how do you take advantage? We’ll create a simple Helm chart and push it to Docker Hub. This process will follow Helm’s official guide for storing Helm charts as OCI images in registries.

First, we’ll create a demo Helm chart:

$ helm create demo

This’ll generate a familiar Helm chart boilerplate of files that you can edit:

demo

├── Chart.yaml

├── charts

├── templates

│ ├── NOTES.txt

│ ├── _helpers.tpl

│ ├── deployment.yaml

│ ├── hpa.yaml

│ ├── ingress.yaml

│ ├── service.yaml

│ ├── serviceaccount.yaml

│ └── tests

│ └── test-connection.yaml

└── values.yaml

3 directories, 10 files

Once we’re done editing, we’ll need to package the Helm chart as an OCI image:

$ helm package demo

Successfully packaged chart and saved it to: /Users/martine/tmp/demo-0.1.0.tgz

Don’t forget to log into Docker Hub before pushing your Helm chart. We recommend creating a Personal Access Token (PAT) for this. You can export your PAT via an environment variable, and login, as follows:

$ echo $REG_PAT | helm registry login registry-1.docker.io -u martine –password-stdin

Pushing your Helm chart

You’re now ready to push your first Helm chart to Docker Hub! But first, make sure you have write access to your Helm chart’s destination namespace. In this example, let’s push to the docker namespace:

$ helm push demo-0.1.0.tgz oci://registry-1.docker.io/docker

Pushed: registry-1.docker.io/docker/demo:0.1.0

Digest: sha256:1e960ad1693c234b66ec1f9ddce80986cbf7159d2bb1e9a6d2c2cd6e89925e54

Viewing your Helm chart and using filters

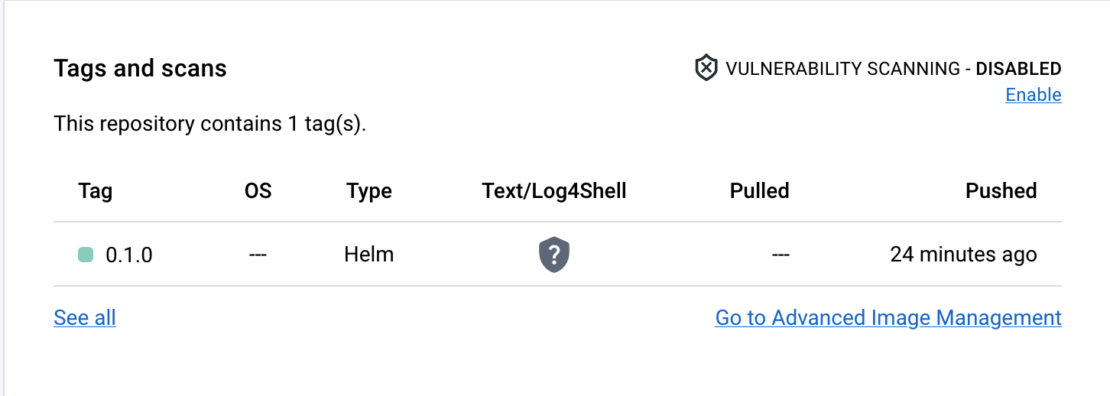

Now, If you log in to Docker Hub and navigate to the demo repository detail, you’ll find your Helm chart in the list of repository tags:

You can navigate to the Helm chart page by clicking on the tag. The page displays useful Helm CLI commands:

Repository content management is now easier. We’ve improved content discoverability by adding a drop-down button to quickly filter the repository list by content type. Simply click the Content drop-down and select Helm from the list:

Working with volumes

Developers use volumes throughout the Docker ecosystem to share arbitrary application data like database files. You can already back up your volumes using the Volume Backup & Share extension that we recently launched. You can now also filter repositories to find those containing volumes using the same drop-down menu.

What if you want to push a volume to Docker Hub without using the Volume Backup & Share extension — say from the command line — and still have Docker Hub recognize it as a volume? The easiest method leverages the ORAS project. Let’s walk through a simple use case that mirrors the examples documented by the ORAS CLI.

First, we’ll create a simple file we want to package as a volume:

$ echo "bar" > foo.txt

For Docker Hub to recognize this volume, we must attach a config file to the OCI image upon creation and mark it with a specific media type. The file can contain arbitrary content, so let’s create one:

$ echo "{"name":"foo","value":"bar"}" > config.json

With this step completed, you’re now ready to push your volume.

Pushing your volume

Here’s where the magic happens. The media type Docker Hub needs to successfully recognize the OCI image as a volume is application/vnd.docker.volume.v1+tar.gz. You can attach the media type to the config file and push it to Docker Hub with the following command (plus its resulting output):

$ oras push registry-1.docker.io/docker/demo:0.0.1 –config config.json:application/vnd.docker.volume.v1+tar.gz foo.txt:text/plain

Uploading b5bb9d8014a0 foo.txt

Uploaded b5bb9d8014a0 foo.txt

Pushed registry-1.docker.io/docker/demo:0.0.1

Digest: sha256:f36eddbab8459d0ad1436b7ca8af6bfc512ec74f45d8136b53c16db87562016e

We now have two types of content in the demo repository as shown in the following breakdown:

If you navigate to the content page, you’ll see some basic information that we’ll expand upon in future iterations. This will boost visibility into a volume’s contents.

Handling generic content types

If you don’t use the application/vnd.docker.volume.v1+tar.gz media type when pushing the volume with the ORAS CLI, Docker Hub will mark the artifact as generic to distinguish it from recognized content.

Let’s push the same volume but use application/vnd.random.volume.v1+tar.gz media type instead of the one known to Docker Hub:

$ oras push registry-1.docker.io/docker/demo:0.1.1 –config config.json:application/vnd.random.volume.v1+tar.gz foo.txt:text/plain

Exists 7d865e959b24 foo.txt

Pushed registry-1.docker.io/docker/demo:0.1.1

Digest: sha256:d2fb2b176ee4e326f1f34ecdaede8db742f2c444cb2c9ceff0f5c8b743281c95

You can see the new content is assigned a generic Other type. We can still view the tagged content’s media type by hovering over the type label. In this case, that’s application/vnd.random.volume.v1+tar.gz:

If you’d like to filter the repositories that contain both Helm charts and volumes, use the same drop-down menu in the top-right corner:

Working with container images

Finally, you can continue pushing your regular container images to the exact same repository as your other artifacts. Say we re-tag the Redis Docker Official Image and push it to Docker Hub:

$ docker tag redis:3.2-alpine docker/demo:v1.2.2

$ docker push docker/demo:v1.2.2

The push refers to repository [docker.io/docker/demo]

a1892d5d1a6d: Mounted from library/redis

e41876edb6d0: Mounted from library/redis

7119119b7542: Mounted from library/redis

169a281fff0f: Mounted from library/redis

04c8ef03e935: Mounted from library/redis

df64d3292fd6: Mounted from library/redis

v1.2.2: digest: sha256:359cfebb00bef01cda3bc1ca453e6455c770a246a06ad8df499a28118c144eda size: 1570

Viewing your container images

If you now visit the demo repository page on Docker Hub, you’ll see every artifact listed under Tags and scans:

We’ll also introduce more features soon to help you better organize your application content, so stay tuned for more announcements!

Follow along for more updates

All developers can now access and choose from more robust sets of artifacts while building and distributing applications with Docker Hub. Not only does this remove existing roadblocks, but it’ll hopefully encourage you to create and distribute even more exciting applications.

But, our mission doesn’t end here! We’re continually working to bolster our OCI support. While the OCI Artifact Specification is considered a release candidate, full Docker Hub support for OCI Reference Types and the accompanying Referrers API is on the horizon. Stay tuned for upcoming enhancements, improved repo organization, and more.

Quelle: https://blog.docker.com/feed/