Everyone’s a Snowflake: Designing Hardened Image Processes for the Real World

Hardened container images and distroless software are the new hotness as startups and incumbents alike pile into the fast-growing market. In theory, hardened images provide not only a smaller attack surface but operational simplicity. In practice, there remains a fundamental – and often painful – tension between the promised security perfection of hardened images and the reality of building software atop those images and running them in production. This causes real challenges for platform engineering teams trying to hit the Golden Mean between usability and security.

Why? Everyone’s a snowflake.

No two software stacks, CI/CD pipeline set ups and security profiles are exactly the same. In software, small differences can cause big headaches. When a developer can no longer access their preferred debugging tools, or cannot add the services they are used to pairing in a container, that causes friction and frustration. Naturally, devs who must ship figure out workarounds or other methods to achieve desired functionality. This snowflake reality can have a snowball affect of driving modifications underground, moving them outside of the hardened image process, or causing backlogs at hardened image vendors who designed their products for rigid security, not reality. In the worst case, they simplify ditch distroless and stymie adoption.

The counterintuitive truth? Rigid container solutions can have the opposite effect, making organizations less secure. This is why the process of designing and applying hardened images is most effective when developer and DevOps needs are taken into account and flexibility is baked into the process. At the same time, too much choice is chaos and chaos generates excessive risk. This is a delicate balance and the ultimate challenge for platform ops today.

The Snowflake Problem: Why Every Environment is Unique

The Snowflake Challenge in container security is pervasive. Walk into any engineering team and you’ll find them standardized not only on an OS distro and changes to that distro will likely cause unforeseen disruptions. They’ve got applications that need to connect to internal services with self-signed certificates, but hardened images often lack the CA bundles or the ability to easily add custom ones. They need to debug production issues with standard system tools, but hardened images leave them out. They’re running containers with multiple processes because splitting legacy applications into separate containers would break existing functionality and require months of rewriting. And they rely on package managers to install operational tools that security teams never planned for.

Distribution, tool and package loyalty isn’t just preference. It’s years of institutional knowledge baked into deployment scripts, monitoring configurations, and troubleshooting runbooks. Teams that have mastered a specific toolchain don’t want to retrain their entire organization just to get security benefits they can’t immediately see. Platform teams know this and will bias towards hardened image solutions that do not layer on cognitive load.

The reality is this. Real-world deployment patterns rarely match the security team’s slideshow. Multi-service containers are everywhere because deadlines matter more than architectural purity. These environments work, they’re tested, and they’re supporting actual users. Asking teams to rebuild their entire stack for theoretical security improvements feels like asking them to fix something that isn’t obviously broken. And they will find a way not to. So platform’s job is to find a hardened image solution that recognizes these types of realities and adjusts for them rather than forces behavioral change.

Familiarity as a Security Strategy

The most secure system in the world is worthless if your development teams route around it or ignore it. Flexibility and recognition that at least giving teams what they are used to having can make security nearly invisible and quite palatable.

In this light, multi-distro options from a hardened image vendor isn’t a luxury feature. It’s an adoption requirement and critical way to mitigate the Snowflake Challenge. A hardened image solution that supports multiple major distros removes the biggest barrier to getting started – the fear of having to adopt an unfamiliar operating system. Once they recognize that their operating system in the hardened images will be familiar, platform teams can confidently begin hardening their existing stacks without worrying about retraining their entire engineering organization on a new base distribution or rewriting their deployment tooling.

Self-service customization turns potential friction into adoption drivers. When developers can add their required CA certificates easily and through self-service instead of filing support tickets, they actually use the tool. When they can merge their existing images with hardened bases through automated workflows, the migration path becomes clear. The goal isn’t to eliminate necessary customization but to make it just another simple step that is no big deal. No big deal modifications leads to smooth adoption paths and developer satisfaction.

The adoption math is straightforward. DDifficulty correlates inversely with security coverage. A perfectly hardened image that only 20% of teams can use provides less overall organizational security than a reasonably hardened image that 80% of teams adopt. Meeting developers where they are beats forcing architectural changes every time.

Migration Friction and Community Trust

The gap between current state and hardened images can feel daunting to many teams. Their existing Dockerfiles might be single-stage builds with years of accumulated dependencies. Their CI/CD pipelines assume certain tools will be available. Their developers assume packages they are comfortable with will be supported.

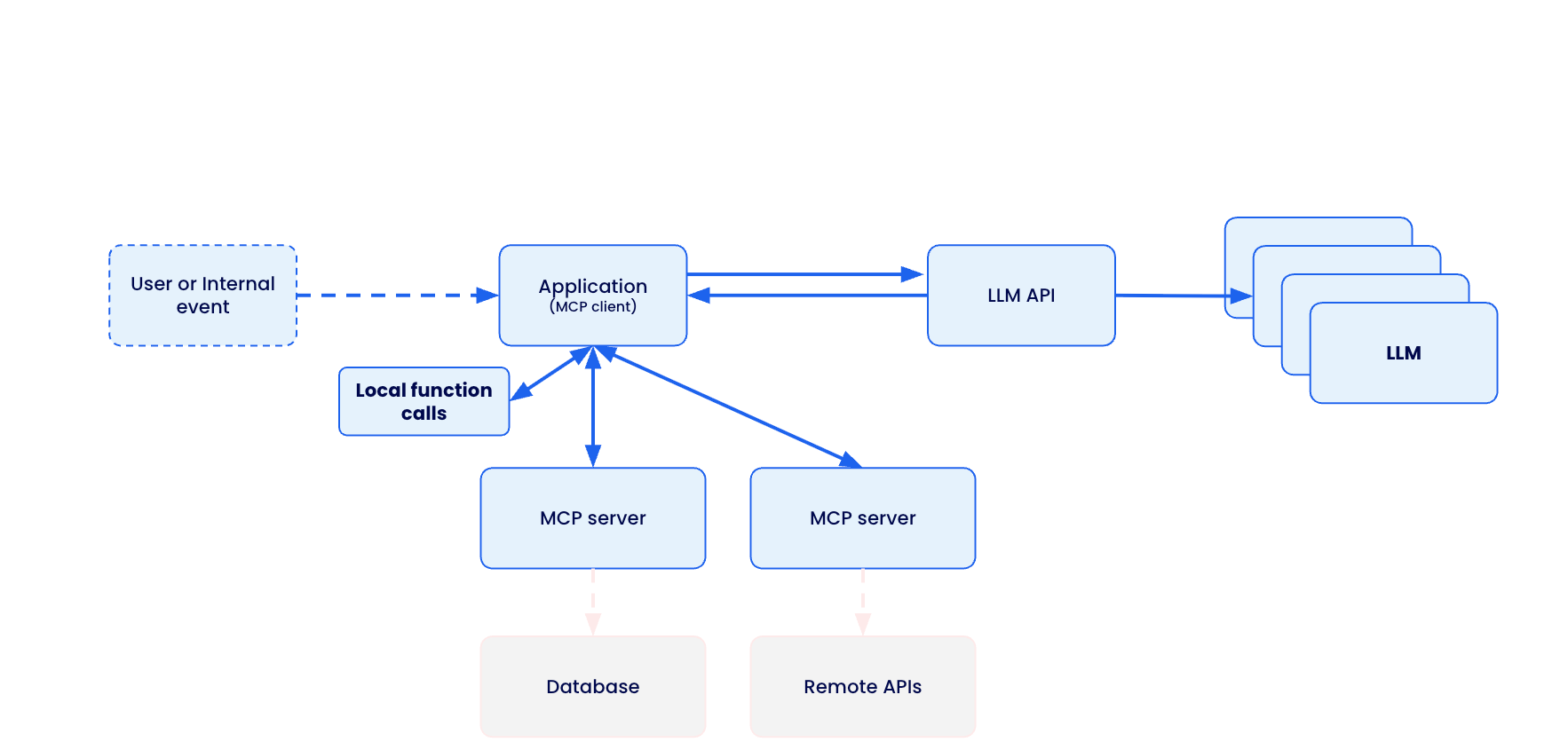

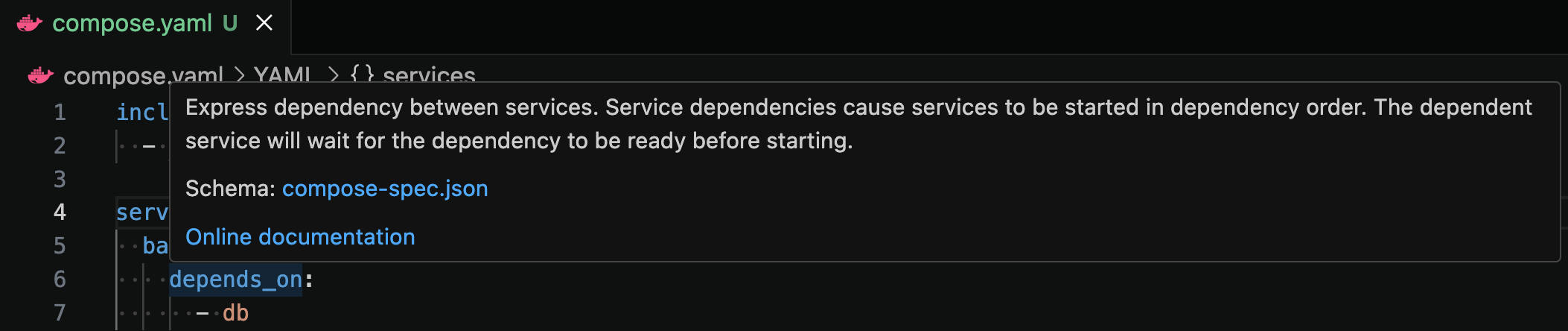

Modern tooling for hardened images can bridge this gap through progressive assistance. AI-powered converters can help translate existing Dockerfiles into multi-stage builds compatible with hardened bases. Converting legacy applications to hardened images through guided automation removes much of the technical friction. The tools handle the mechanical aspects of separating build dependencies from runtime dependencies while preserving application functionality. Teams can retain their existing development flows with less disruption and toil. Security adoption will be greater, while down-sizing the attack surface.

Hardened image adoption can depend on trust as much as technical merit. Organizations trust hardened image providers who demonstrate knowledge of the open source projects they’re securing. Docker has maintained close relationships with each open source project of the more than 70 official images listed on Docker Hub, That signals long-term commitment beyond just security theater. The reality is, the best hardened image design processes are dialogues that include project stakeholders and benefit from project insights and experience.The upshot? Platform teams need to talk to their developer and DevOps customers to understand what software is critical and to talk to their hardened image provider to understand their ties and active interactions with the upstream communities. A successful hardened image rollout must navigates these realities and acknowledge all the invested parties.

The Happy Medium: Secure Defaults, Controlled Flexibility, Community Cred

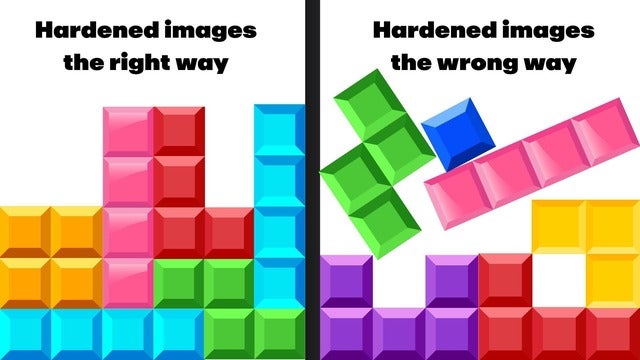

Effective container security resembles building with Lego blocks rather than erecting security monoliths. The beloved Lego kits not only have a base-level design but are also easy to modify while maintaining structural integrity. Monoliths make appear more solid and substantial but modifying them is challenging and their strong opinionated view of the world is destined to cramp someone’s style.

Auditable customization paths maintain security posture while accommodating reality. When developers can add packages through controlled processes that log changes and validate security implications, both security and productivity goals get met. The secret lies in making the secure path the easy path rather than trying to eliminate all alternatives. At the foundational level, this requires solutions that integrate with existing practices rather than replacing them wholesale.

Success metrics need to include coverage and adoption alongside traditional hardening measurements. A hardened image strategy that achieves 95% team adoption with 80% attack surface reduction delivers better organizational security than one that achieves 99% hardening but only gets used by 30% of applications. Platform teams that understand this math are far more likely to succeed in hardened image adoption and embrace.

Beyond the Binary: A New Security Paradigm

The bottom line? Really good security deployed everywhere beats perfect security deployed sporadically because security is a system property, not a component property. The weakest link determines overall posture. An organization with consistent, reasonable security practices across all applications faces lower aggregate risk than one with perfect security on some applications and no security on others.

The path forward involves designing hardened image processes that acknowledge developer reality and involves community in order to improve security outcomes. That comes through broad adoption and minimal disruption.. This means creating migration paths that feel achievable rather than overwhelming, providing automation to smooth the path, and delivering self-service options rather than more Jira-ticket Bingo. Every organization may be a snowflake, but that doesn’t make security impossible. It just means hardened image solutions need to be as adaptable as the environments they’re protecting.

Quelle: https://blog.docker.com/feed/