FabCon Vienna: Build data-rich agents on an enterprise-ready foundation

Welcome everyone to the second annual European Microsoft Fabric Community Conference this week in the vibrant city of Vienna, Austria! With more than 130 sessions and 10 full-day workshops, this year’s sold-out European event is bigger than ever and there’s no shortage of incredible learning experiences. More than 4,200 attendees will get to test their driving skills on a high-octane racing simulator powered by Fabric Real Time Intelligence, ask their questions directly at expert-staffed booths, compete for a chance to be crowned the DataViz World Champion, and celebrate Microsoft Power BI’s tenth anniversary.

This event is an opportunity to get much deeper into Microsoft Fabric, which has now become the fastest growing data platform in Microsoft’s history.1 In less than two years, we’ve been able to expand Microsoft Fabric into a complete data and analytics platform with more than 25,000 customers, including about 80% of the Fortune 500, spanning everything from analytics to databases to real-time insights.

Microsoft has massive investments in Fabric, and I’m thrilled to share a new slate of announcements that will further advance Fabric’s vision as the most comprehensive, enterprise-grade data platform on the planet. These announcements include new OneLake shortcut and mirroring sources, a brand-new Graph database enabling you to connect entities across OneLake, new geospatial capabilities with Maps in Fabric, improved developer experiences, and new security controls—giving you what you need to run your mission-critical scenarios on Fabric.Get started with Microsoft Fabric

Unify your data with OneLake, the AI-ready data foundation

Any successful AI or data project starts with the right data foundation. Organizations like Lumen, IFS, NTT Data, and the Chalhoub Group have all adopted Microsoft OneLake as the unified access point for their data. Lumen—a leader in enterprise connectivity—cut 10,000 hours of manual effort with OneLake. “We used to spend up to six hours a day copying data into SQL servers,” says Chad Hollingsworth, Cloud Architect at Lumen. “Now it’s all streamlined. OneLake allowed us to ingest once and use anywhere.”

With mirroring and OneLake shortcuts, we’ve simplified how you connect to and transform your data with a zero-copy, zero-ETL approach that allows you to instantly connect to any data—no matter the cloud, database, vendor, engine, or format. In addition to the recent announcement of mirroring for Azure Databricks, we are thrilled to announce the preview of mirroring for Oracle and Google BigQuery, allowing you to access your Oracle and Google data in OneLake in near real-time. We are also extending Fabric data agents to support all mirrored databases, so you can ask questions about your external database data. Additionally, we are announcing the general availability of OneLake shortcuts to Azure Blob Storage and the preview of new OneLake shortcut transformations to automatically convert JSON and Parquet files to Delta tables, for instant analysis. Finally, we are releasing the OneLake integration with Azure AI Search into general availability, enabling you to easily ground your custom agents with OneLake data.

https://www.youtube-nocookie.com/embed/vgi5yb7KlxY?rel=0&v=vgi5yb7KlxY

With your data in OneLake, the OneLake catalog then provides the tools to discover, govern, and secure your data from a single place. With more than 30 million monthly active Power BI and Fabric users, it’s already the default source of data and insights. We are also launching OneLake security into full preview and creating a new tab in the OneLake catalog called Secure, where you can manage the security and permissions for all your data items. Along with this new tab, we are releasing OneLake catalog Govern tab into general availability.

https://www.youtube-nocookie.com/embed/UiFm5AjKXHQ?rel=0&v=UiFm5AjKXHQ

We are also excited to enrich our extensibility story with the preview of a new OneLake Table API, which lets apps use GET and LIST calls to discover and inspect OneLake tables stored in either Iceberg or Delta format using Fabric’s security model. Finally, for workspace owners, we are releasing preview of OneLake diagnostics that allows you to capture all the data activity and storage operations for a specific workspace into any lakehouse in the same capacity.

Train smarter agents with connected intelligence from graph and maps in Fabric

The first step in starting any agentic project is data. You need to bring the data together and ensure your data estate can handle the volume of data used in training. But sophisticated AI agents require more than simply huge quantities of data. To provide you with accurate answers grounded on your business, they need to first understand the relationships between data. They need to understand your business operations. They need context.

We believe this is the next major shift now required for a modern AI-ready data estate. You can learn more about this shift and our vision in Jessica Hawk’s blog, “Microsoft leads shift beyond data unification to organization, delivering next gen AI readiness.” To help you provide this context to your agents or any other data project, we are excited to announce the preview of two transformative new features in Fabric: Graph and Maps.

Model, analyze, and visualize complex data relationships

Graph in Fabric is designed to enable organizations to visualize and query relationships that drive business outcomes. Built upon the proven architecture principles of LinkedIn’s graph technology, graph in Fabric can help you reveal connections across customers, partners, and supply chains. But like your data, graph is easier to explain visually:

https://www.youtube-nocookie.com/embed/TFrAAdRdyVc?rel=0&v=TFrAAdRdyVc

“Graph in Microsoft Fabric is a game changer. The highly scalable graph engine coupled with Fabric’s ease of use is a uniquely powerful combination.”

—Luke Hiester, Senior Data Scientist, Eastman Chemical Company

Graph will roll out in various Fabric regions starting on October 1, 2025.

Visualize, analyze, and act on location-based data instantly

Maps in Fabric can help you bring geospatial context to your agents and operations by transforming enormous volumes of location-based data into interactive, real-time visualizations that drive location-aware decisions and enhance business awareness. Check out a full demo of the new experience:

https://www.youtube-nocookie.com/embed/zdZOrYR049E?rel=0&v=zdZOrYR049E

By combining streaming analytics, geospatial mapping, and contextual modeling, maps can help you extract location-based insights for your existing business processes to drive better awareness and outcomes.

You can learn more about graph and maps in Yitzhak Kesselman’s “The Foundation for Powering AI-Driven Operations: Fabric Real-Time Intelligence” blog.

Delighting developers with new tools in Fabric

Power BI is a leader in business intelligence for developers with more than 7 million actively building data visuals. Now, Microsoft Fabric is quickly becoming the home for all data developers. To help developers feel even more at home, we’re adding a huge range of new tooling across Fabric.

First, we’ve released the Fabric Extensibility Toolkit into preview—an evolution of the Microsoft Fabric Workload Development Kit but newly designed to help any developer bring their data apps to Fabric for their own organizations along with a simplified architecture and additional automation to drastically streamline development. Developers can now simply build their own Fabric items, and everything else like distribution, user interface, and security is taken care of for you—try it today.

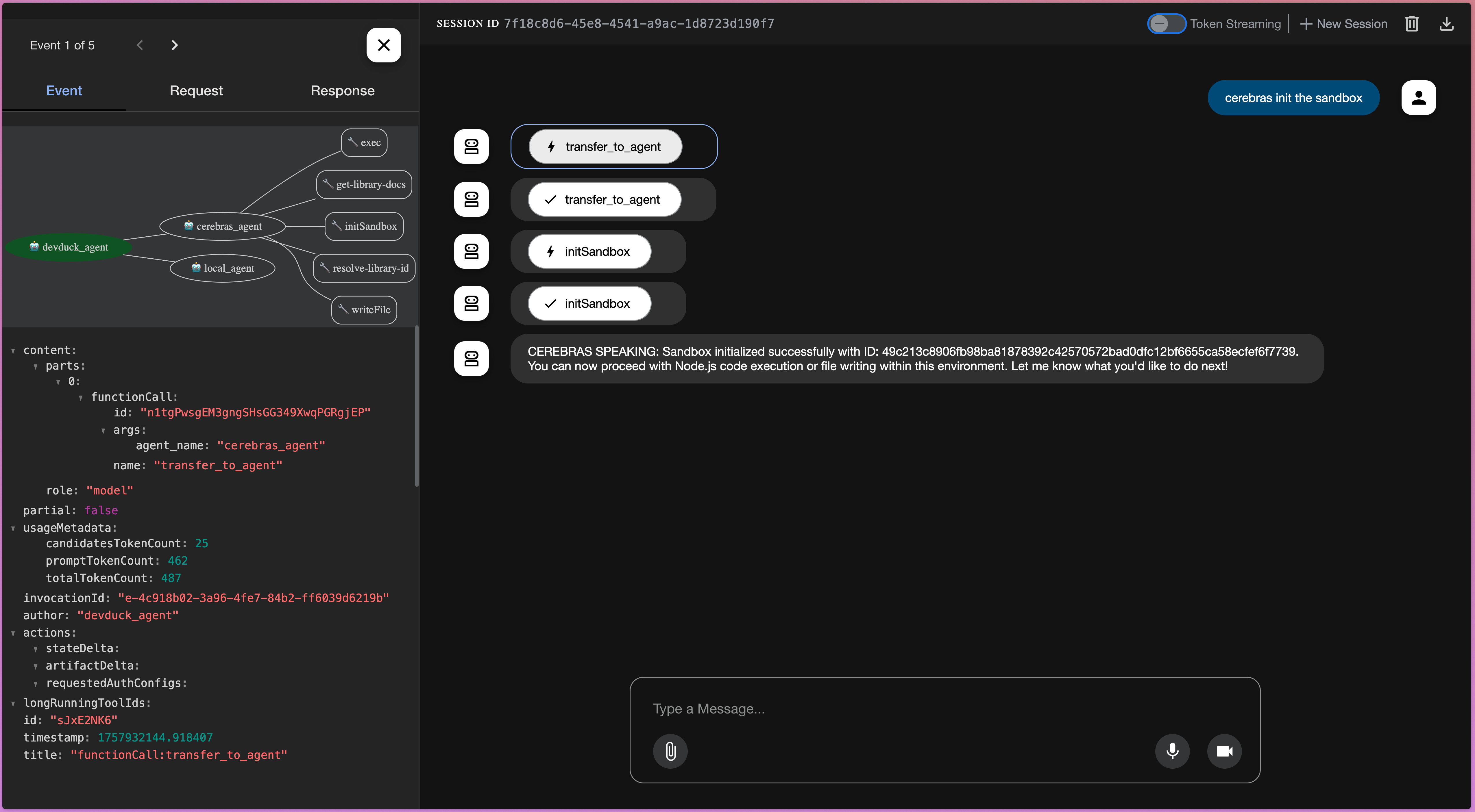

We’re also introducing the preview of Fabric MCP, a developer-focused Model Context Protocol that enables AI-assisted code generation and item authoring in Microsoft Fabric. Designed for agent-powered development and automation, it streamlines how you build using Fabric’s public APIs with built-in templates and best-practice instructions. It also integrates with tools like Microsoft Visual Studio Code and GitHub Codespaces and is fully open and extensible.

With the general availability of Git integration and deployment pipelines with lakehouses, data warehouses, copy jobs, activator, Power BI reports, and many more, we are excited to announce that you can employ continuous integration and continuous delivery (CI/CD) capabilities across the Fabric platform. We are even extending CI/CD support to Fabric data agents. We are also releasing User Data Functions and the Fabric VS Code extension into general availability. And we are releasing an open-source version of the command line interface in Fabric.

Finally, we are also releasing horizontal tabs for open items, support for multiple active workspaces, and a new object explorer—all designed to make multitasking in Fabric smoother, faster, and more intuitive.

Build your mission-critical scenarios on Microsoft Fabric

Fabric has comprehensive, built-in tools for network security, data security, and governance, enabling any organization to effectively manage and govern their data. A detailed overview of all of the existing capabilities are available in the Fabric Security Whitepaper.

Now, we are thrilled to announce significant additions to our security, capacity management, performance, and migration—all of which further cement Fabric as the ideal data platform for every AI and mission-critical scenario. Frontier firms implementing AI need more than just next-generation AI tools. You need a comprehensive, cost-effective data platform to support your projects with end-to-end data protection, integration with developer tools, and performance that can scale to any need. Microsoft Fabric has both the leading generative AI capabilities and the enterprise-ready foundation to truly foster an AI-powered data culture.

Connect securely to even the most sensitive data

First, we are providing additional safeguards to help you manage secure data connections and precisely manage the level of isolation you need in each workspace. We are excited to announce the general availability of Azure Private Link in Fabric and outbound access protection for Spark, and the soon to be released preview of workspace IP filtering—all at the workspace-level. Additionally, we are expanding mirroring to support on-premises data sources and data sources behind firewalls. Finally, we are excited to announce the general availability of customer managed keys for Fabric workspaces.

More granular capacity management

Gaining control over the jobs running on your Fabric capacities is critical to any mission critical scenario. To give you this control, we are announcing the general availability of surge protection for background jobs and the preview of surge protection for workspaces. With surge protection, you can set limits on background activity consumption and now, specific workspace activity—helping you protect capacities from unexpected surges. Learn more.

Enhanced Fabric Data Warehouse performance

Fabric is engineered to handle massive data volumes with exceptional performance across its analytics engines, and we’re continuously enhancing their efficiency. Since August 2024, we’ve released 40 performance improvements to Fabric Data Warehouse driven by your feedback, resulting in a 36% performance improvement in industry standard benchmarks—try it today.

Seamlessly migrate your Synapse data to Fabric

We are also excited to release the general availability of an end-to-end migration experience natively built into Fabric, enabling Azure Synapse Analytics (data warehouse) customers to transition seamlessly to Microsoft Fabric. The migration experience allows you to migrate both metadata and data from Synapse Analytics and comes with an intelligent assessment, guided support, and AI-powered assistance to minimize the migration effort.

Extend Fabric with partner-created workloads and seamless integration with Snowflake

We are excited to announce the general availability of new partner solutions native to Microsoft Fabric from ESRI, Lumel, and Neo4j. ESRI’s advanced geospatial analytics, Lumel’s vibrant business intelligence insights, and Neo4j’s graph analytics are all just a click away in the Fabric workload hub. In addition, several new partners are announcing capabilities built on Microsoft Fabric, learn more by reading the FabCon Vienna partner blog.

In May of 2024, we announced an expanded partnership with Snowflake—committing both our platforms to provide seamless bi-directional integration and enable customers with the flexibility to do what makes sense for their business. Since then, we’ve expanded interoperability between Snowflake and Microsoft OneLake including the ability to write Snowflake tables to OneLake, the ability to use OneLake shortcuts to access Snowflake tables, the ability to read OneLake tables directly from Snowflake, and full support for Apache Iceberg format in OneLake. Now, we are releasing new Iceberg REST Catalog APIs that allow Snowflake to read Iceberg tables from OneLake, keeping OneLake tables automatically in sync. You can learn more about this new announcement and our partnership by reading the Microsoft OneLake and Snowflake interoperability blog.

See more Microsoft Fabric innovation

In addition to the announcements above, we are excited to share a huge slate of other innovations coming to Fabric, including enhancements to SQL databases in Fabric, the preview of Runtime 2.0, the preview of AI functions in Data Wrangler, the general availability of editing semantic models in the Power BI service, and so much more.

You can learn more about these announcements and everything else by reading the Fabric September 2025 Feature summary blog, the Power BI September feature summary blog, or by exploring the latest blogs on the Fabric Updates channel.

Join us at FabCon Atlanta and Microsoft Ignite

Already excited about the next FabCon? Join us in Atlanta, Georgia, from March 16 to 20, 2026, for even more in-depth sessions, cutting-edge demos and announcements, community networking, and everything else you love about FabCon. Register today and use code MSCATL for a $200 discount on top of current Early Access pricing!

In the meantime, you can join us at Microsoft Ignite this year from November 18 to 21, 2025, either in person in San Francisco or online to see even more innovation coming to Fabric and the rest of Microsoft. You’ll see firsthand the latest solutions and capabilities across all of Microsoft and connect with experts who can help you bolster your knowledge, build connections, and explore emerging technologies.

Explore additional resources for Microsoft Fabric

Sign up for the Fabric free trial.

View the updated Fabric Roadmap.

Try the Microsoft Fabric SKU Estimator.

Visit the Fabric website.

Join the Fabric community.

Read other in-depth, technical blogs on the Microsoft Fabric Updates Blog.

Sign up now for our upcoming ask the Fabric expert sessions

Join us on September 24, 2025, for the “Ask the Experts—Microsoft OneLake” webinar where experts from our OneLake team will join to answer all your questions live.

Get certified in Microsoft Fabric

Join the thousands of other Fabric users who’ve achieved more than 50,000 certifications collectively for the Fabric Analytics Engineers and Fabric Data Engineers roles. To celebrate FabCon Vienna, we are offering the entire Fabric community a 50% discount on exams DP-600, DP-700, DP-900, and PL-300. Request your voucher.

Join the FabCon Global Hackathon

Build real-world data and AI solutions that push the boundaries of what’s possible with Microsoft Fabric. Join the hackathon to compete for prizes up to $10,000.

Read additional blogs by industry-leading partners

How AI-native data platforms are redefining business by PricewaterhouseCoopers LLP.

Your Operational Data Just Became Your Smartest Business Agent by iLink Digital.

From OLTP to AI: Choosing Your Microsoft Fabric Database Engine by Lumel Technologies.

Building scalable e-commerce product recommendations with Microsoft Fabric SQL by MAQ Software.

Things You Should Know About MCP in Microsoft Fabric by Nimble Learn.

How to Estimate Microsoft Fabric Costs: Capacity Planning Strategies for AI Workloads by JourneyTeam.

How Microsoft Fabric Modernized & Transformed ERP Data Analytics by Bizmetric.

Unlocking the full value of Data as a Product with Microsoft Fabric and Purview by Thoughtworks.

The post FabCon Vienna: Build data-rich agents on an enterprise-ready foundation appeared first on Microsoft Azure Blog.

Quelle: Azure