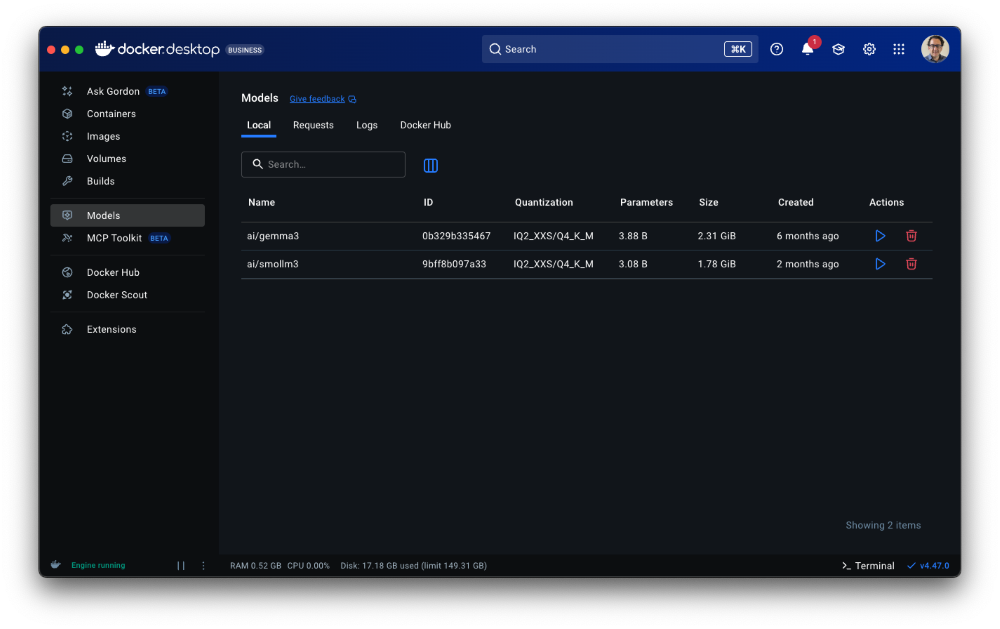

cagent is a new open-source project from Docker that makes it simple to build, run, and share AI agents, without writing a single line of code. Instead of writing code and wrangling Python versions and dependencies when creating AI agents, you define your agent’s behavior, tools, and persona in a single YAML file, making it incredibly straightforward to create and share personalized AI assistants.

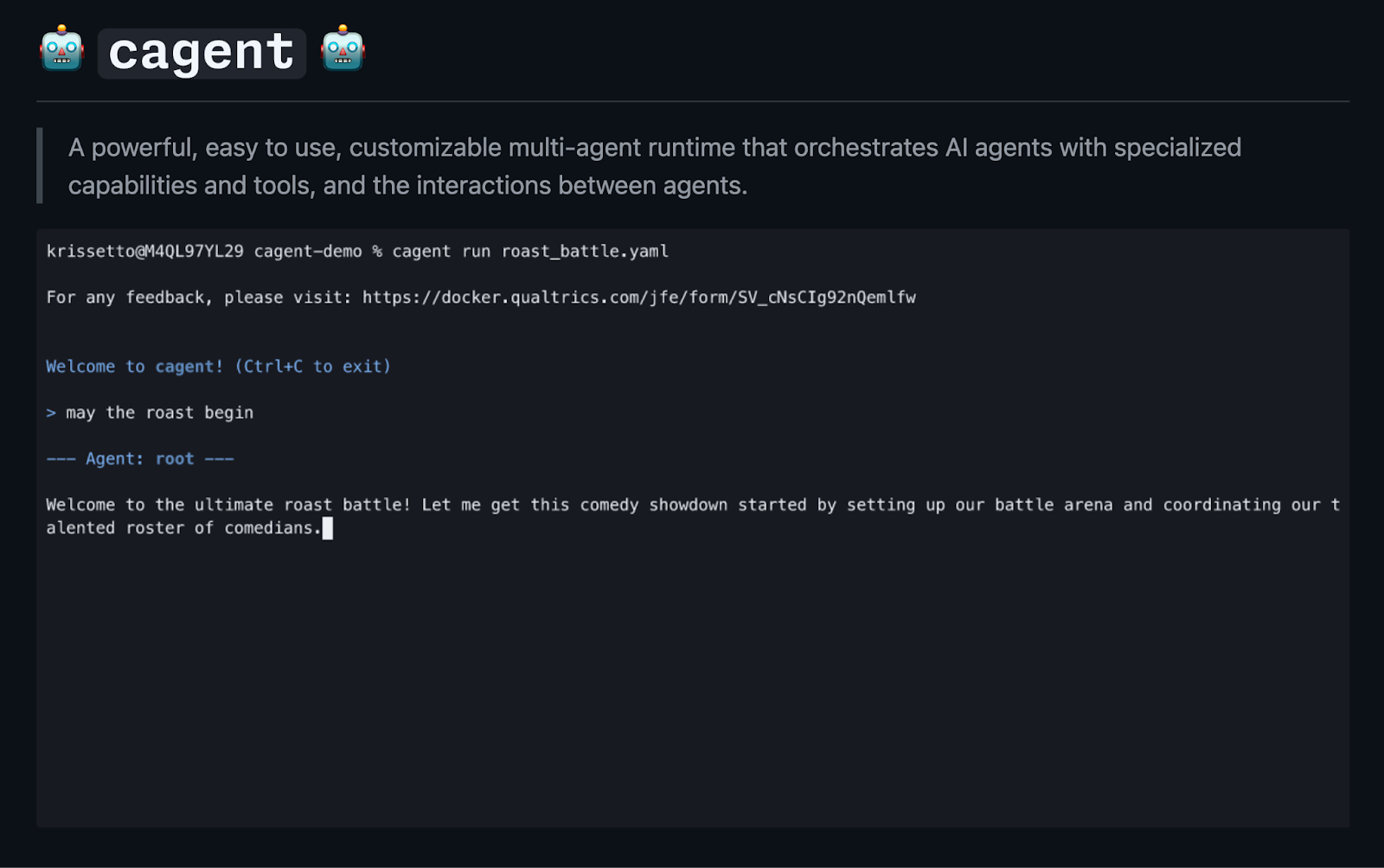

Figure 1: cagent is a powerful, easy to use, customizable multi-agent runtime that orchestrates AI agents with specialized capabilities and tools, and the interactions between agents.

cagent can use OCI registries to share and pull agents created by the community, so not only can you elegantly solve the agent creation problem, but also the agent distribution problem.

Let’s dive into what makes cagent special and explore some real-world use cases.

What is cagent?

At its core, cagent is a command-line utility that runs AI agents defined in cagent.yaml files. The philosophy is simple: declare what you want your agent to do, and cagent handles the rest.

There are a few features that you’ll probably like for authoring your agents.

Declarative and Simple: Define models, instructions, and agent behavior in one YAML file. This “single artifact” approach makes agents portable, easy to version, and easy to share.

Flexible Model Support: You’re not tied to a specific provider. You can run remote models or even local ones using Docker Model Runner, ideal for privacy reasons.

Powerful Tool Integration: cagent includes built-in tools for common tasks (like shell commands or filesystem access) and supports external tools via MCP, enabling agents to connect to virtually any API.

Multi-Agent Systems: You’re also not limited to a single agent. Cagent allows you to define a team of agents that can collaborate and delegate tasks to one another, with each agent having its own specialized skills and tools.

Practical use cases for agent

I’ve lived with and used cagent for a few weeks now, and in this article, I want to share two of my practically useful agents that I actually use.

A GitHub Task Tracker

Let’s start with a practical, developer-centric example. While tracking GitHub issues with AI might not be revolutionary, it’s surprisingly useful and demonstrates cagent’s capabilities in a real-world workflow.

There’s no shortage of task tracking solutions to integrate with, but one of the most useful for developers is GitHub. We’ll use a repository in GitHub and issues on it as our to-do list. Does it have the best UX? It doesn’t actually matter; we’ll consume and create issues with AI, so the actual underlying UX is irrelevant.

I have a GitHub repo: github.com/shelajev/todo, which has issues enabled, and we’d like an agent that can, among other things, create issues, list issues, and close issues.

Figure 2

Here’s the YAML for a GitHub-based to-do list agent. The instructions for the agent were generated with the agent new command, and then I refined the instructions it generated by manually asking Gemini to make them shorter.

YAML

version: "2"

models:

gpt:

provider: openai

model: gpt-5

max_tokens: 64000

agents:

root:

model: gpt

description: "GitHub Issue Manager – An agent that connects to GitHub to use a repo as a todo-list"

instruction: |

You are a to-do list agent, and your purpose is to help users manage their tasks in their "todo" GitHub repository.

# Primary Responsibilities

– Connect to the user's "todo" GitHub repository and fetch their to-do items, which are GitHub issues.

– Identify and present the to-do items for the current day.

– Provide clear summaries of each to-do item, including its priority and any labels.

– Help the user organize and prioritize their tasks.

– Assist with managing to-do items, for example, by adding comments or marking them as complete.

# Key Behaviors

– Always start by stating the current date to provide context for the day's tasks.

– Focus on open to-do items.

– Use labels such as "urgent," "high priority," etc., to highlight important tasks.

– Summarize to-do items with their title, number, and any relevant labels.

– Proactively suggest which tasks to tackle first based on their labels and context.

– Offer to help with actions like adding notes to or closing tasks.

# User Interaction Flow

When the user asks about their to-do list:

1. List the open items from the "todo" repository.

2. Highlight any urgent or high-priority tasks.

3. Offer to provide more details on a specific task or to help manage the list.

add_date: true

toolsets:

– type: mcp

command: docker

args: [mcp, gateway, run]

tools:

[

"get_me",

"add_issue_comment",

"create_issue",

"get_issue",

"list_issues",

"search_issues",

"update_issue",

]

It’s a good example of a well-crafted prompt that defines the agent’s persona, responsibilities, and behavior, ensuring it acts predictably and helpfully. The best part is editing and running it is fast and frictionless, just save the YAML and run:

cagent run github-todo.yaml

This development loop works without any IDE setup. I’ve done several iterations in Vim, all from the same terminal window where I was running the agent.

This agent also uses a streamlined tools configuration. A lot of examples show adding MCP servers from the Docker MCP toolkit like this:

toolsets:

– type: mcp

ref: docker:github-official

This would run the GitHub MCP server from the MCP catalog, but as a separate “toolkit” from your Docker Desktop’s MCP toolkit setup.

Using the manual command to connect to the MCP toolkit makes it easy to use OAuth login support in Docker Desktop.

Figure 3

Also, the official GitHub MCP server is awfully verbose. Powerful, but verbose. So, for the issue-related agents, it makes a lot of sense to limit the list of tools exposed to the agent:

tools:

[

"get_me",

"add_issue_comment",

"create_issue",

"get_issue",

"list_issues",

"search_issues",

"update_issue",

]

That list I made with running:

docker mcp tools list | grep "issue"

And asking AI to format it as an array.

This todo-agent is available on Docker Hub, so it’s a simple agent pull command away:

cagent run docker.io/olegselajev241/github-todo:latest

Just enable the GitHub MCP server in the MCP toolkit first, and well, make sure the repo exists.

The Advocu Captains Agent

At Docker, we use Advocu to track our Docker Captains, ambassadors who create content, speak at conferences, and engage with the community. We use Advocu to track their information details and contributions, such as blog posts, videos, and conference talks about Docker’s technologies.

Manually searching through Advocu is time-consuming. For a long time, I wondered: what if we could build an AI assistant to do it for us?

My first attempt was to build a custom MCP server for our Advocu instance: https://github.com/shelajev/mcp-advocu

It’s largely “vibe-coded”, but in a nutshell, running

docker run -i -rm -e ADVOCU_CLIENT_SECRET=your-secret-here olegselajev241/mcp-advocu:stdio

will run the MCP server with tools that expose information about Docker Captains, allowing MCP clients to search through their submitted activities.

Figure 4

However, sharing the actual agent, and especially the configuration required to run it, was a bit awkward.

cagent solved this for me in a much neater way. Here is the complete cagent.yaml for my Advocu agent:

YAML

#!/usr/bin/env cagent run

version: "2"

agents:

root:

model: anthropic/claude-sonnet-4-0

description: Agent to help with finding information on Docker Captains and their recent contributions to Docker

toolsets:

– type: mcp

command: docker

args:

– run

– -i

– –rm

– –env-file

– ./.env

– olegselajev241/mcp-advocu:stdio

instruction: You have access to Advocu – a platform where Docker Captains log their contributions. You can use tools to query and process that information about captains themselves, and their activities like articles, videos, and conference sessions. You help the user to find relevant information and to connect to the captains by topic expertise, countries, and so on. And to have a hand on the pulse of their contributions, so you can summarize them or answer questions about activities and their content

With this file, we have a powerful, personalized assistant that can query Captain info, summarize their contributions, and find experts by topic. It’s a perfect example of how cagent can automate a specific internal workflow.

Users simply need to create a .env file with the appropriate secret. Even for less technical team members, I can give a shell one-liner to get them set up quickly.

Now, everyone at Docker can ask questions about Docker captains without pinging the person running the program (hi, Eva!) or digging through giant spreadsheets.

Figure 5

I’m also excited about the upcoming cagent 1Password integration, which will simplify the setup even more.

All in all, agents are really just a combination of:

A system prompt

An integration with a model (ideally, the most efficient one that gets the job done)

And the right tools via MCP

With cagent, it’s incredibly easy to manage all three in a clean, Docker – native way.

Get Started Today!

cagent empowers you to build your own fleet of AI assistants, tailored to your exact needs.

It’s a tool designed for developers who want to leverage the power of AI without getting bogged down in complexity.

You can get started right now by heading over to the cagent GitHub repository. Download the latest release and start building your first agent in minutes.

Give the repository a star, try it out, and let us know what amazing agents you build!

Quelle: https://blog.docker.com/feed/