These days, almost every tech company is looking for ways to integrate AI into their apps and workflows, and Docker is no exception. They’ve been rolling out some impressive AI capabilities across their products. This is my first post as a Docker Captain and in this post, I want to shine a spotlight on a feature that hasn’t gotten nearly enough attention in my opinion: Docker’s AI Agent Gordon (also known as Docker AI), which is built into Docker Desktop and CLI.

Gordon is really helpful when it comes to containerizing applications. Not only does it help you understand how to package your app as a container, but it also reduces the overhead of figuring out dependencies, runtime configs, and other pieces that add to a developer’s daily cognitive load. The best part? Gordon doesn’t just guide you with responses; it can also generate or update the necessary files for you.

The Problem: Containerizing apps and optimizing containers isn’t always easy

Containerizing apps can range from super simple to a bit tricky, depending on what you’re working with. If your app has a single runtime like Node.js, Python, or .NET Core, with clearly defined dependencies and no external services, it will be straightforward.

A basic Dockerfile will usually get you up and running without much effort. But once you start adding more complexity, like a backend, frontend, database, and caching layer, you now have the need for a multi-container app. At this point, you might be dealing with additional Dockerfile configurations and potentially a Docker Compose setup. That’s where things can start to be challenging to get going.

This is where Gordon shines. It’s helpful in containerizing apps and can even handle multi-service container app setups, guiding you through what’s needed and even generating the supporting config files, such as Dockerfiles and docker-compose, to get you going.

Optimizing containers can be a headache too

Beyond just containerizing, there’s also the need to optimize your containers for performance, security, and image size. And let’s face it, optimizing can be tedious. You need to know what base images to use, how to slim them down, how to avoid unnecessary layers, and more.

Gordon can help here too. It provides optimization suggestions, shows you how to apply best practices like multi-stage builds or removing dev dependencies, and helps you create leaner, more secure images.

Why not just use general-purpose Generative AI?

Sure, general-purpose AI tools like ChatGPT, Claude, Gemini, etc. are great and I use them regularly. But when it comes to containers, they can lack the context needed for accurate and efficient help. Gordon, on the other hand, is purpose-built for Docker. It has access to Docker’s ecosystem and has been trained on Docker documentation, best practices, and the nuances of Docker tooling. That means its recommendations are more likely to be precise and aligned with the latest standards.

Walkthrough of Gordon

Gordon can help with containerizing applications, optimizing your containers and more. Gordon is still a Beta feature. To start using Gordon, you need Docker Desktop version 4.38 or later. Gordon is powered by Large Language Models (LLMs), and it goes beyond prompt and response: it can perform certain tasks for you as an AI agent. Gordon can have access to your local files and local images when you give it permission. It will prompt you for access if needed for a task.

Please note, the examples I will show in this post are based on a single working session. Now, let’s dive in and start to explore Gordon.

Enabling Gordon / Docker AI

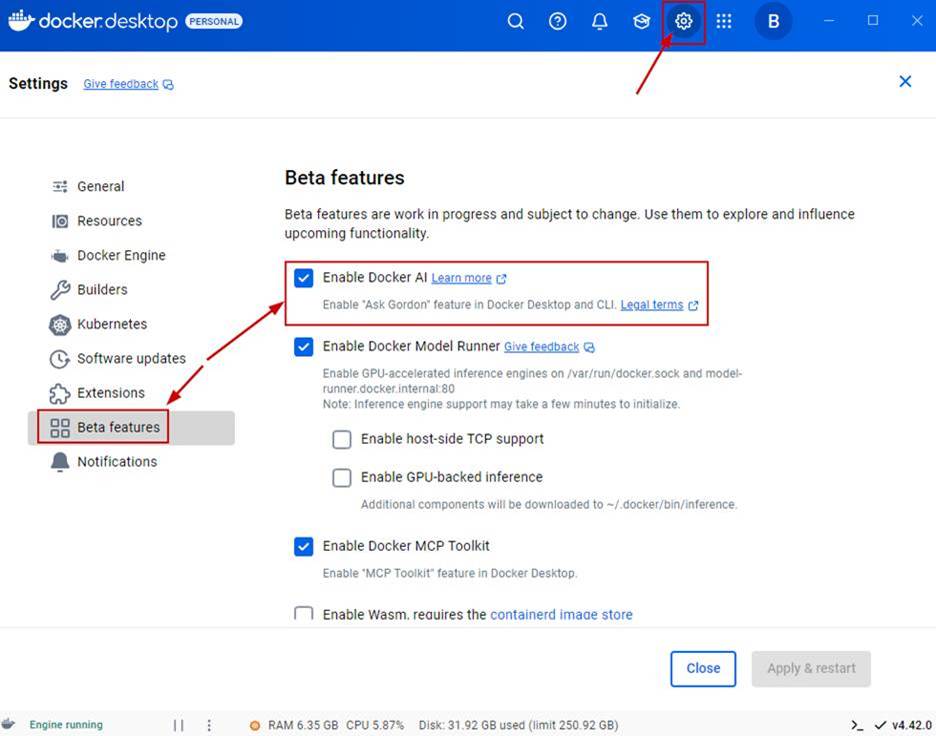

In order to turn Gordon on, go to Settings > Beta features check the Enable Docker AI box as shown in the following screenshot.

Figure 1: screenshot of where to enable Docker AI in beta features

Accept the terms. The AI in Docker Desktop is in two forms. The first one is through the Docker Desktop UI and is known as Gordon. The second option is Docker AI. Docker AI is accessed through the Docker CLI. The way you activate it is by typing Docker AI in the CLI. I will demonstrate this later on in this blog post.

Figure 2: screenshot of Docker AI terms acceptance dialog box

Exploring Gordon in Docker Desktop

Now Gordon will appear in your Docker Desktop UI. Here you can prompt it just like any Generative AI tool. Gordon will also have examples that you can use to get started working with it.

You can access Gordon throughout Docker Desktop by clicking on the AI icon as shown in the following screenshot.

Figure 3: screenshot of Docker Desktop interface showing the AI icon for Gordon

When you click on the AI icon a Gordon prompt box appears along with suggested prompts as shown in the following screenshot. The suggestions will change based on the object the AI is next to, and are context-aware.

Figure 4: Screenshot showing Gordon’s suggestion prompt box in Docker Desktop UI

Here is another example of Docker AI suggestions being context-aware based on what area of Docker Desktop you are in.

Figure 5: Screenshot showing Docker AI context- specific suggestions

Another common use case for Gordon is listing local images and using AI to work with them. You can see this in the following set of screenshots. Notice that Gordon will prompt you for permission before showing your local images.

Figure 6: Screenshot showing Gordon referencing local images

You can also prompt Gordon to take action. As shown in the following screenshot, I asked Gordon to run one of my images.

Figure 7: Screenshot showing Gordon prompts

If it can’t perform the action, it will attempt to help you.

Figure 8: Screenshot showing Gordon prompt response to failed request

Another cool use of Gordon is to explain a container image to you. When you ask this, Gordon will ask you to select the directory where the Dockerfile is and permission to access it as shown in the following screenshot.

Figure 9: Screenshot showing Gordon’s request for particular directory access

After you give it access to the directory where the Dockerfile is, it will then breakdown what’s in the Dockerfile.

Figure 10: Screenshot showing Gordon’s response to explaining a Dockerfile

As shown in the following screenshot, I followed up with a prompt asking Gordon to display what’s in the Dockerfile. It did a good job of explaining its contents, as shown in the following screenshot.

Figure 11: Screenshot showing Gordon’s response regarding Dockerfile contents

Exploring Gordon in the Docker Desktop CLI

Let’s take a quick tour through Gordon in the CLI. Gordon is referred to as Docker AI in the CLI. To work with Docker AI, you need to launch the Docker CLI as shown in the following screenshot.

Figure 12: Screenshot showing how to launch Docker AI from the CLI

Once in the CLI you can type “docker ai” and it will bring you into the chat experience so you can prompt Gordon. In my example, I asked Gordon about one of my local images. You can see that it asked me for permission.

Figure 13: Screenshot showing Docker CLI request for access

Next, I asked Docker AI to list all of my local images as shown in the following screenshot.

Figure 14: Screenshot showing Docker CLI response to display local images

I then tested pulling an image using Docker AI. As you can see in the following screenshot, Gordon pulled a nodeJS image for me!

Figure 15: Screenshot showing Docker CLI pulling nodeJS image

Containerizing an application with Gordon

Now let’s explore the experience of containerizing an application using Gordon.

I started by clicking on the example for containerizing an application. Gordon then prompted me for the directory where my application code is.

Figure 16: Screenshot showing where to enable access to directory for containerizing an application

I pointed it to my apps directory and gave it permission. It then started to analyze and containerize my app. It picked up the language and started to read through my app’s README file.

Figure 17: Screenshot showing Gordon starting to analyze and containerize app

You can see it understand the app was written in JavaScript and worked through the packages and dependencies.

Figure 18: Screenshot showing final steps of Gordon processing

Gordon understands that my app has a backend, frontend, and a database, knowing from this that I would need a Docker compose file.

Figure 19: Screenshot showing successful completion of steps to complete the Dockerfiles

From the following screenshot you can see the Docker related files needed for my app. Gordon created all of these.

Figure 20: Screenshot showing files produced from Gordon

Gordon created the Dockerfile (on the left) and a Compose yaml file (on the right) even picking up that I needed a Postgres DB for this application.

Figure 21: Screenshot showing Dockerfile and Compose yaml file produced from Gordon

I then took it a step further and asked Gordon to build and run the container for my application using the prompt “Can you build and run this application with compose?” It created the Docker Compose file, built the images, and ran the containers!

Figure 22: Screenshot showing completed containers from Gordon

Conclusion

I hope you picked up some useful insights about Docker and discovered one of its lesser-known AI features in Docker Desktop. We explored what Gordon is, how it compares to general-purpose generative AI tools like ChatGPT, Claude, and Gemini, and walked through use cases such as containerizing an application and working with local images. We also touched on how Gordon can support developers and IT professionals who work with containers. If you haven’t already, I encourage you to enable Gordon and take it for a test run. Thanks for reading and stay tuned for more blog posts coming soon.

Quelle: https://blog.docker.com/feed/