Gary Kildall: Der Mann, der das PC-Betriebssystem erfand

Gary Kildall schuf mit CP/M die Grundlage für Microsofts MS DOS, aber Bill Gates gewann am Ende alles. Ein Porträt von Martin Wolf (Betriebssystem, Microsoft)

Quelle: Golem

Gary Kildall schuf mit CP/M die Grundlage für Microsofts MS DOS, aber Bill Gates gewann am Ende alles. Ein Porträt von Martin Wolf (Betriebssystem, Microsoft)

Quelle: Golem

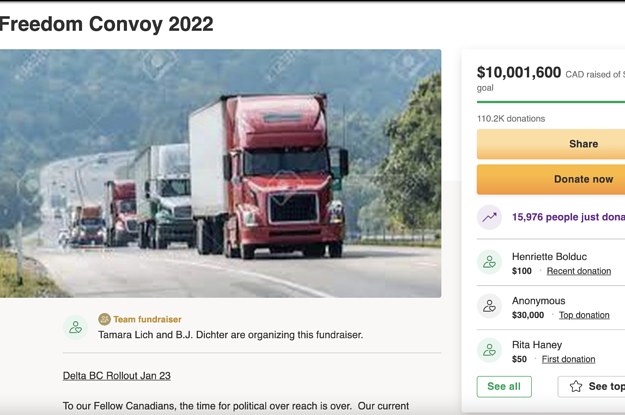

Quelle: <a href="GoFundMe Says The Viral Campaign For Canada’s Trucker Protest Hasn’t Violated Its Rules Even Though It Sure Seems Like It Does“>BuzzFeed

In this multi-part tutorial, we cover how to provision RHEL VMs to a vSphere environment from Red Hat Satellite. In this part, we configure Satellite to manage content for our RHEL environment.

Quelle: CloudForms

Most businesses today choose Apache Spark for their data engineering, data exploration and machine learning use cases because of its speed, simplicity and programming language flexibility. However, managing clusters and tuning infrastructure have been highly inefficient, and a lack of an integrated experience for different use cases are draining productivity gains, opening up governance risks and reducing the potential value that businesses could achieve with Spark. Today, we are announcing the general availability of Serverless Spark, industry’s first autoscaling serverless Spark. We are also announcing the private preview of Spark through BigQuery, empowering BigQuery users to use serverless Spark for their data analytics, along with BigQuery SQL. With these, you can effortlessly power ETL, data science, and data analytics use cases at scale. Dataproc Serverless for Spark (GA)Per IDC, developers spend 40% time writing code, and 60% of the time tuning infrastructure and managing clusters. Furthermore, not all Spark developers are infrastructure experts, resulting in higher costs and productivity impact. Serverless Spark, now in GA, solves for these by providing the following benefits:Developers can focus on code and logic. They do not need to manage clusters or tune infrastructure. They submit Spark jobs from their interface of choice, and processing is auto-scaled to match the needs of the job.Data engineering teams do not need to manage and monitor infrastructure for their end users. They are freed up to work on higher value data engineering functions.Pay only for the job duration, vs paying for infrastructure time.How OpenX is using Serverless Spark: From heavy cluster management to ServerlessOpenX, the largest independent ad exchange network for publishers and demand partners, was in the process of migrating an old MapReduce pipeline. The previous MapReduce cluster was shared among multiple jobs, which required frequent cluster size updates, autoscaler management and also, from time to time, cluster recreation due to upgrades as well as other unrecoverable reasons. OpenX used Google Cloud’s serverless Spark to abstract away all the cluster resources and just focus on the job itself. This significantly helped to boost the team’s productivity, while reducing infrastructure costs. It turned out that for OpenX, serverless Spark is cheaper from the resource perspective, not to mention maintenance costs of a cluster lifecycle management. During implementation, OpenX first evaluated the existing pipeline characteristics. It’s hourly batch workload with input size varies from 1.7 TB to 2.8 TB compressed Avro per hour. The pipelines were run on the shared cluster along with other jobs and do not require data shuffling.To set up the flow, Airflow Kubernetes worker is assigned to a workload identity, which submits a job to the Dataproc serverless batch service using Airflow’s plugin DataprocCreateBatchOperator. The batch job itself is assigned to a tailored service account and Spark application jar is released to the Google Cloud Storage using gradle plugin as Dataproc batches can load a jar directly from there. Another job dependency (Spark-Avro) is loaded from the driver or worker filesystem. The batch job is then started with the initial size of 100 executors (1vCPU + 4GB RAM) and autoscaler adds resources as needed, as the hourly data sizes vary during the day.Serverless Spark through BigQuery (Preview)We announced at NEXT that BigQuery is adding a unified interface for data analysts to write SQL or PySpark. That Preview is now live, and you can request access through the signup form.You can write PySpark code in the BigQuery editor, and the code is executed using serverless Spark seamlessly, without the need for infrastructure provisioning. BigQuery has been the pioneer for serverless data warehousing, and now supports serverless Spark for Spark-based analytics.What’s next?We are working on integrating serverless Spark with the interfaces different users use, for enabling Spark without any upfront infrastructure provisioning. Watch for the availability of serverless Spark through Vertex AI workbench for data scientists, and Dataplex for data analysts, in the coming months. You can use the same signup form to express interest.Getting started with Serverless Spark and Spark through BigQuery todayYou can try serverless Spark using this tutorial, or use one of templates for common ETL tasks. To sign up for Spark through BigQuery preview, please use this form.Related ArticleSpark on Google Cloud: Serverless Spark jobs made seamless for all data usersSpark on Google Cloud allows data users of all levels to write and run Spark jobs that autoscale, from the interface of their choice, in …Read Article

Quelle: Google Cloud Platform

The Asia-Pacific region has long been a strong manufacturing base and the sector continues to be a strong adopter of the Internet of Things (IoT). But as the latest Microsoft IoT Signals report shows, IoT is now much more widely adopted across verticals, and across the globe, with smart spaces—a key focus for many markets in the Asia-Pacific region—becoming one of the leading application areas.

The newest edition of this report provides encouraging reading for organizations in the Asia-Pacific region. The global study of over 3,000 business decision-makers (BDMs), developers, and internet technology decision-makers (ITDMs) across ten countries—including Australia, China, and Japan—shows that IoT continues to be widely adopted for a range of uses and is seen as critical to business success by a large majority. Further, rather than slowing growth which some might have feared, the COVID-19 pandemic is driving even greater investment across different industries as IoT becomes more tightly integrated with other technologies.

Across the Asia-Pacific region, the research shows that organizations in Australia report the highest rate of IoT adoption at 96 percent—beating both Italy (95 percent) and the United States (94 percent)—and that organizations in China are adopting IoT for more innovative use cases and have the highest rates of implementation against emerging technology strategies. In Japan, it found that companies are using IoT more often to improve productivity and optimize operations. Below we dive into three key trends that emerge for organizations in this region.

1. A greater focus on planning IoT projects pays off

Whilst IoT projects in the region take slightly longer to reach fruition, it seems that this reflects a more thoughtful and diligent approach which appears to be paying off. By thinking through and taking time upfront to determine the primary business objectives for success, organizations in the Asia-Pacific region report high levels of IoT adoption (96 percent in Australia), importance (99 percent of companies in China say IoT is critical to business success), and overall satisfaction (99 percent and 97 percent in China and Australia respectively). These objectives are broadly in line with global findings, with quality assurance and cloud security consistently mentioned across all three countries in this region. Organizations in Australia and Japan adopt IoT to help with optimization and operational efficiencies: in Australia, the focus is on energy optimization (generation, distribution, and usage); and in Japan, it is on manufacturing optimization (agile factory, production optimization, and front-line worker). Those in Australia and China also tend to do more device monitoring as part of IoT-enabled condition-based maintenance practices.

Companies in the region report that these varied use cases are delivering significant benefits in terms of more operational efficiency and staff productivity, improved quality by reducing the chance of human error, and greater yield by increasing production capacity.

2. Emerging technologies accelerate IoT adoption

Of the organizations surveyed, the 88 percent that are set to either increase or maintain their IoT investment in the next year are more likely to incorporate emerging technologies such as AI, edge computing, and digital twins into their IoT solutions. And in the Asia-Pacific region, awareness of these technologies tends to be higher than in other markets.

Organizations in China are far more likely than their counterparts elsewhere to have strategies that address these three areas. They lead all other countries when it comes to implementing against AI and edge computing strategies, and a staggering 98 percent of companies in Australia that are aware of digital twins say they have a specific strategy for that technology. More significantly, their experience with these technologies is driving greater adoption of IoT across the region, with around eight in ten organizations working to incorporate them into their IoT solutions.

3. Industry-specific IoT solutions drive a broader range of benefits

The IoT Signals report analyzed several industries in-depth, all well represented in the Asia-Pacific region. Organizations in Australia, for instance, should note that energy, power, and utility companies use IoT to help with grid automation (44 percent) and maintenance (43 percent), while oil and gas companies tend to apply it more to workplace and employee safety (45 percent and 43 percent respectively). Energy companies are also much more likely to use AI in their IoT solutions than other industries (89 percent of organizations versus 79 percent for all verticals). The benefits of IoT being seen by organizations in these sectors include increases in operational efficiency, increases in production capacity, and increases in customer satisfaction.

In Japan, where manufacturing makes up an important part of the market, we find that there are more IoT projects in the usage stage (26 percent) than in other sectors, mainly focused on bolstering automation. Manufacturing organizations are using these IoT solutions to ensure quality, facilitate industrial automation, and monitor production flow. In doing so, they benefit from improved operational efficiency and greater production capacity, driving competitive advantage. In this industry, it’s not technology that poses a challenge but the huge business transformation that takes extra time and thought, often due to legacy systems and processes.

China, of course, has always been an innovator when it comes to devices, so its manufacturing sector will see the same impacts. But smart spaces—as in other countries in the Asia Pacific region—are getting a lot of attention, and this is where we see the highest levels of IoT adoption (94 percent) and overall satisfaction (98 percent). It also has the strongest indications of future growth with 69 percent planning to use IoT more in the next two years. It’s also the industry sector where the highest proportion of organizations are implementing IoT against AI strategies. The top applications of IoT in smart spaces are around productivity and building safety, where organizations can benefit from improved operational efficiency and personal safety.

Learn more

It’s clear from the report that IoT is here to stay, and the diligent approach taken by organizations across the Asia Pacific region is paying off. For a more detailed exploration of how businesses in this region and across the globe are leveraging IoT, as well as drilldowns into topics such as security, implementation strategy, and sustainability, make sure you download the full Microsoft IoT Signals report.

Quelle: Azure

Amazon Simple Queue Service (SQS) kündigt in beiden AWS GovCloud (USA)-Regionen Support für die erneute Ausführung für Warteschlangen für unzustellbare Nachrichten in die Quellwarteschlange (DLQ) an, sodass Sie den Lebenszyklus nicht verarbeiteter Nachrichten besser kontrollieren können. Warteschlangen für unzustellbare Nachrichten sind eine vorhandene Funktion von Amazon SQS, die es Kunden ermöglicht, Nachrichten zu speichern, die Anwendungen nicht erfolgreich verarbeiten konnten. Sie können Nachrichten jetzt effizient aus Ihrer Warteschlange für unzustellbare Nachrichten in Ihre Quellwarteschlange auf der Amazon-SQS-Konsole umleiten. DQL erweitert das Management-Erfahrung der Warteschlange für unzustellbare Nachrichten für Entwickler und ermöglicht es ihnen, Anwendungen mit der Gewissheit zu erstellen, dass sie ihre nicht verarbeiteten Nachrichten untersuchen, Fehler in ihrem Code beheben und Nachrichten in ihren Warteschlangen für unzustellbare Nachrichten erneut verarbeiten können.

Quelle: aws.amazon.com

Phishing-Opfer müssen sich darauf gefasst machen, dass ihnen grobe Fährlässigkeit unterstellt wird. Ob das Urteil wegweisend sein wird, ist aber unklar. (Phishing, Politik/Recht)

Quelle: Golem

Nur wenige Tage vor der Veröffentlichung von Dying Light 2 wurde bekannt, dass der Anti-Tamper-Schutz Denuvo zum Einsatz kommt. (Denuvo, Steam)

Quelle: Golem

Die beiden Kabelnetzbetreiber Vodafone und Tele Columbus haben Strecken in einem etwas geheimnisvollen Rohrsystem erworben, das auch durch die U-Bahn-Tunnel läuft. (Glasfaser, Vodafone)

Quelle: Golem

Tesla hat wie in jedem Jahr seine Supercharger-Karte mit Schnelladestationen veröffentlicht und zeigt, wann wo neue Lademöglichkeiten entstehen. (Tesla, Elektroauto)

Quelle: Golem