Gesichtserkennung verbessert: iOS 15.4 unterstützt Face ID mit Maske

iOS 15.4 ermöglicht es, das iPhone per Gesichtserkennung trotz Maske zu entsperren. Die Apple Watch ist dazu nicht mehr nötig. (Apple, Applikationen)

Quelle: Golem

iOS 15.4 ermöglicht es, das iPhone per Gesichtserkennung trotz Maske zu entsperren. Die Apple Watch ist dazu nicht mehr nötig. (Apple, Applikationen)

Quelle: Golem

Eine der beiden Wiz-Lampen strahlt gleichzeitig nach oben und nach unten. Schöne Idee, doch leider klappt bei der Smart-Home-Steuerung einiges nicht. Ein Test von Ingo Pakalski (Smart Home, Test)

Quelle: Golem

Die dritte Generation an Stacked Memory ist spezifiziert: HBM3 vervierfacht die Kapazität und verdoppelt die Geschwindigkeit des 3D-Speichers. (3D-Speicher, Grafikhardware)

Quelle: Golem

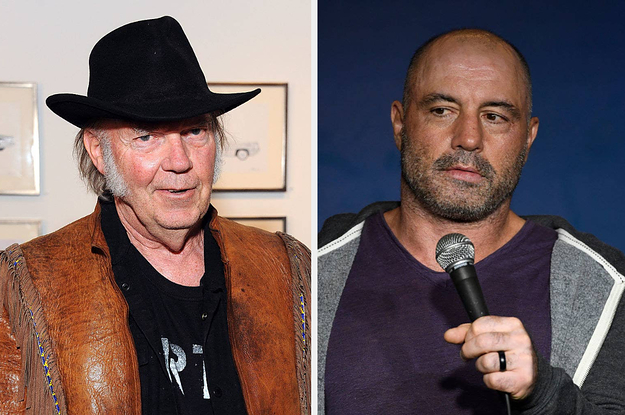

Quelle: <a href="Spotify Has Agreed To Remove Neil Young's Music Catalog After He Took A Stand On Joe Rogan's Vaccine Misinformation“>BuzzFeed

Photo by Hamish Weir on UnsplashUpdates:2020/08/27: Git repo updated with only private subnets support option.IntroductionWe define Auto Fleet Spotting as a way to provide support for Auto Scaling of a Fleet of Spot Instances on AWS EKS.This implementation is based on the official upstream Terraform AWS EKS implementation and was extended to provide an easy way for the deployment of EKS clusters with Kubernetes 1.17.9 in any region with Auto Fleet Spotting support.With this simple AWS EKS deployment automation solution with Docker you’ll not only be able to save time by EKS deployments and enforce some security best practices, but also you can reduce your Kubernetes costs by up to 90%.I’m not joking, we’re using this implementation for developing and running our own Kubernautic Platform, which is the basis of our Rancher Shared as a Service offering.We’re using the same implementation for other dedicated Kubernetes clusters running on AWS EKS which are running with a ratio of 98% for our Spot to On-Demand Instances and scale roughly from 6 to 40 Spot Instances during the day. Here is the Grafana view on the node count of a real world project over the last 7 days.TL;DRIf you’d like to try this implementation, all what you need is to clone the extended AWS Terraform EKS repo and run the docker/eks container to deploy the latest EKS Kubernetes 1.17.9 version (at this time of writing at 20/08/16). If you’d like to build your own image, please refer to this section of the README file in the repo.# Do this on your own risk, if you might want to trust me :-)$ git clone https://github.com/arashkaffamanesh/terraform-aws-eks.git& cd terraform-aws-eks$ docker run -it –rm -v "$HOME/.aws/:/root/.aws" -v "$PWD:/tmp" kubernautslabs/eks -c "cd /tmp; ./2-deploy-eks-cluster.sh"Run EKS without the Docker ImageIf these Prerequisites are met on your machine, you can run the deployment script directly without running the docker container:$ ./2-deploy-eks-cluster.shWould you like to have a cup of coffee, tee or a cold German beer?The installation should take about 15 minutes, time enough to enjoy a delicious cup of coffee, tee or a cold German beer ;-)Please let me describe what the deployment scripts mainly do and how you can extend the cluster with a new worker node group for On-Demand or additional Spot Instances and deploy additional add-ons like Prometheus, HashiCorp Vault, Tekton for CI/CD and GitOps, HelmOps, etc.The first script `./1-clone-base-module.sh` copies the base folder from the root of the repo to a new folder which is the cluster name which you provide after running the script and provides a README file in the cluster folder with the needed steps to run the EKS deployment step by step.The second script `2-deploy-eks-cluster.sh` makes the life easier and automates all the steps which is needed to deploy an EKS cluster. The main work which the deployment script does is to ask you about the cluster name and region and apply some few sed operations to substitute some values in the configuration files like setting the S3 bucket name, the cluster name and region and create a key pair, the S3 bucket and then run `make apply` to deploy the cluster with terraform and deploy the cluster autoscaler by setting the autoscaling group name in the cluster-autoscaler-asg-name.yml.Why using S3?The S3 bucket is very useful, if you want to work with your team to extend the cluster, it mainly saves the cluster state file `terraform.tfstate` in the S3 bucket, the S3 bucket should have a unique name and will be defined as the cluster name in the backend.tf file:https://medium.com/media/cc96ff9a3a086aaf41b1262a673d7070/hrefOne of the essential parts to get autoscaling working with spot instances is to define the right IAM Policy Document and attach it to the worker autoscaling group of the worker node group IAM role:https://medium.com/media/4a26caa805dd79cdb52ed85ad56fa92a/hrefHow does the whole thing work?Each cluster may have one or more Spot Instances or On-Demand Worker groups, sometimes referred as node pools as well. The initial installation will deploy only one worker group named spot-1, which we can easily extend after the initial deployment and run make apply again.If you’d like to add a new On-Demand worker group, you’ve to extend the `main.tf` file in your cluster module (in terraform a folder is named module) as shown below and run make plan followed with make apply:$ make plan$ make applyhttps://medium.com/media/6a117e59f75001d9794bd3edfed50df8/hrefUsing Private SubnetsI extended the VPC module definition to make use of private subnets with a larger /20 network address range, which allows us to use up-to 4094 IPs for our pods.https://medium.com/media/98f725c41df79cb9caeace191ca538eb/hrefAdd-OnsSome additional components which we refer as add-ons are provided in the addons folder in the repo. Each addon should have a README file about how to use it.TestingTo test how the auto-scaling of spot instances works, you can deploy the provided nginx-to-scaleout.yaml from the addons folder and scale it to 10 replicas and see how the spot instances are scaled-out and decrease the number of replicas to 1 and see how the spot instances are scaled-in back (the scaled-in process may take up to 5 minutes or longer, or even don't happen, if something was not configured properly!).k create -f ../addons/nginx-to-scaleout.yamlk scale deployment nginx-to-scaleout –replicas=10k get pods -wk get nodesConclusionRunning an EKS cluster with autoscaling support for spot instances or on-demand instances is a matter of running a single docker run command, even if you don’t have terraform, the aws-iam-authenticator or the kubectl-terraform plugin installed. All needed configurations are provided as code, we didn’t even had to login to the AWS web console to setup the EKS cluster and configure the permissions for the cluster auto-scaler for for our worker groups.This is the true Infrastructure as Code made with few lines of code and minor adaptions to the upstream Terraform EKS implementation to run EKS with support of Autoscaling of Spot and On-Demand instances.We are using this implementation with Kubernetes 1.15 today, the in-place migration from 1.15 directly to 1.17 can’t work directly, we’re going to use blue green deployments with traffic routing to migrate our workloads and our users who are using our Kubernautic Platform to a new environment, users would be able to use a full-fledged, fully isolated virtual Kubernetes cluster running in a Stateful Namespace, stay tuned!Questions?Join us on Kubernauts Slack channel or add a comment here.We’re hiring!We are looking for engineers who love to work in Open Source communities like Kubernetes, Rancher, Docker, etc.If you wish to work on such projects and get involved to build the NextGen Kubernautic Platform, please do visit our job offerings page or ping us on twitter.Related resourcesTerraform AWS EKSTerraform Provider EKSHey Docker run EKS, with Auto Fleet Spotting Support, please! was originally published in Kubernauts on Medium, where people are continuing the conversation by highlighting and responding to this story.

Quelle: blog.kubernauts.io

Centrailize your log storageContinue reading on Kubernauts »

Quelle: blog.kubernauts.io

Photo by Jonathan Singer on UnsplashThis tutorial should help you to get started with Helm Operations, referred to as HelmOps and CI/CD with Tekton on K3s running on your Laptop deployed on multipass Ubuntu VMs with MetalLB support. We’ll use Chartmuseum to store a simple Helm Chart in our own Helm Repository in Chartmuseum and deploy our apps from there through Tekton Pipelines in the next section of this tutorial.This guide should work with any K8s implementation running locally or on any cloud with some minor adaptations to the scripts provided in the Github Repo of Bonsai.To learn more about K3s and our somehow opinionated Bonsai implementation on Multipass VMs with MetalLB support, please refer to this article about K3s with MetalLB on Multipass VMs.Get startedWith the following commands you should get a K3s cluster running on your machine in about 3 minutes on real Ubuntu VMs, which we refer sometimes as a near-to-production environment.git clone https://github.com/kubernauts/bonsai.gitcd bonsai./deploy-bonsai.sh# please accept all default values (1 master and 2 workers)Deploy ChartmuseumPrerequisitesA running k8s clusterLB service (it will be installed by default with Bonsai)helm3 versionWe deploy chartmuseum with custom values and create an ingress with the chartmuseum.local domain name.Please set the following host entries in your /etc/hosts file:# please adapt the IP below with the external IP of the traefik LB (run `kubectl get svc -A` and find the external IP)192.168.64.26 chart-example.local192.168.64.26 chartmuseum.local192.168.64.26 tekton-dashboard.local192.168.64.26 registry-ui.local192.168.64.26 getting-started-triggers.localAnd deploy chartmuseum:cd addons/chartmuseumhelm repo add stable https://kubernetes-charts.storage.googleapis.comkubectl create ns chartmuseumkubectl config set-context –current –namespace chartmuseumhelm install chartmuseum stable/chartmuseum –values custom-values.yamlkubectl apply -f ingress.yamlNow you should be able to access chartmuseum through:http://chartmuseum.localIf you see the “Welcome to ChartMuseum!”, then you’re fine.Now we are ready to add the chartmuseum repo and install the helm-push plugin and package the sample hello-helm chart and push it to our chartmuseum repo running on K3s:# add the repohelm repo add chartmuseum http://chartmuseum.local# install helm push plugin:helm plugin install https://github.com/chartmuseum/helm-push.git# build the package:cd hello-helm/charthelm package hello/# the helm package name will be hello-1.tgzls -l hello-1.tgz# push the package to chartmuseumhelm push hello-1.tgz chartmuseum# You should get:Pushing hello-1.tgz to chartmuseum…Done.Install the ChartTo install a chart, this is the basic command used:helm install <chartmuseum-repo-name>/<chart-name> –name <release-name> (helm2)helm install <release-name> <chartmuseum-repo-name>/<chart-name> (helm3)We need to update our helm repos first and install the chart:helm repo updatehelm install hello chartmuseum/helloWe should get a similar output like this:NAME: helloLAST DEPLOYED: Sat Jul 25 17:56:23 2020NAMESPACE: chartmuseumSTATUS: deployedREVISION: 1TEST SUITE: NoneHarvest your workcurl http://chart-example.local/Welcome to Hello Chartmuseum for Private Helm Repos on K3s Bonsai!What happened here?We deployed the chart with helm through the command line, the hello service was defined in the values.yaml with the type LoadBalancer and received an external IP from MetalLB.https://medium.com/media/262c126dd063c5258cd7e13a936c6f93/hrefIn the above values.yaml we define to have an ingress and the hostname which is chart-example.local as the domain name which is mapped in our /etc/hosts file to the IP address of the traefik load balancer, alternatively we could map the domain to the external IP address of the hello-hello service (in my case 192.168.64.27) as well.Delete the chart and the helm hello releaseSince we want to automate the deployment process in the next section with Tekton Pipelines and do the CI part as well, we’ll clean up the deployment and delete our chart from chartmuseum.helm delete hellocurl -i -X DELETE http://chartmuseum.local/api/charts/hello/1CI/CD and HelmOps on KubernetesIn this section, we’re going to introduce Tekton and Tekton Pipelines which is the technological successor to Knative Build and provides a Kube-Native style for declaring CI/CD pipelines on Kubernetes. Tekton supports many advanced CI/CD patterns, including rolling, blue/green, and canary deployment. To learn more about Tekton, please refer to the official documentation page of Tekton and also visit the new neutral home for the next generation of continuous delivery collaboration by Continuous Delivery Foundation (CDF).For the impatientKatakoda provides a very nice Scenario for Tekton which demonstrates building, deploying, and running a Node.js application with Tekton on Kubernetes using a private docker registry.By going through the Katakoda scenario in about 20 minutes, you should be able to learn about Tekton concepts and how to define various Tekton resources like Tasks, Resources and Pipelines to kick off a process through PipelineRuns to deploy our apps in a GitOps style manner on Kubernetes.In this section, we’re going to extend that scenario with Chartmuseum from the first section and provide some insights about Tekton Triggers which rounds up this tutorial and hopefully helps you to use it for your daily projects.About GitOps and xOpsGitOps is buzzing these days after the dust around DevOps, DevSecOps, NoOps, or xOps has settled down. We asked the community about the future of xOps and what’s coming next, if you’d like to provide your feedback, we’d love to have it:body[data-twttr-rendered=”true”] {background-color: transparent;}.twitter-tweet {margin: auto !important;} — @kubernautsfunction notifyResize(height) {height = height ? height : document.documentElement.offsetHeight; var resized = false; if (window.donkey && donkey.resize) {donkey.resize(height);resized = true;}if (parent && parent._resizeIframe) {var obj = {iframe: window.frameElement, height: height}; parent._resizeIframe(obj); resized = true;}if (window.location && window.location.hash === “#amp=1″ && window.parent && window.parent.postMessage) {window.parent.postMessage({sentinel: “amp”, type: “embed-size”, height: height}, “*”);}if (window.webkit && window.webkit.messageHandlers && window.webkit.messageHandlers.resize) {window.webkit.messageHandlers.resize.postMessage(height); resized = true;}return resized;}twttr.events.bind(‘rendered’, function (event) {notifyResize();}); twttr.events.bind(‘resize’, function (event) {notifyResize();});if (parent && parent._resizeIframe) {var maxWidth = parseInt(window.frameElement.getAttribute(“width”)); if ( 500 < maxWidth) {window.frameElement.setAttribute("width", "500");}}The reality is we did CVS- and SVN- Ops about 15 years ago with CruiseControl for both CI and CD. Later Hudson from the awesome folks at Sun Micorsystems was born and renamed to Jenkins, which is still one of the most widely used CI/CD tools today.Times have changed and with the rise of Cloud Computing, Kubernetes and the still buzzing term Cloud-Native, which is a new way of thinking, the whole community is bringing new innovations at the speed of light.Cloud Native: A New Way Of Thinking!New terms like Kube-Native-Ops, AppsOps, InfraOps and now HelmOps are not so common or buzzing yet at this time of writing. We believe that Tekton or Jenkins-X which is based on Tekton along with other nice solutions like ArgoCD, FluxCD, Spinnaker or Screwdriver are going to change the way we’re going to automate the delivery and roll-out of our apps and services the Kube-Native way on Kubernetes.With that said, let’s start with xOps and deploy our private docker registry and Tekton on K3s and run our simple node.js app from the previous section through helm triggered by Tekton Pipelines from Tekton Dashboard.Make sure you have helm3 version in place, add the stable and incubator Kubernetes Charts Helm Repo and install the docker registry proxy, the registry UI and the ingress for the registry UI:helm version –shorthelm repo add stable https://kubernetes-charts.storage.googleapis.comhelm repo add incubator http://storage.googleapis.com/kubernetes-charts-incubatorhelm upgrade –install private-docker-registry stable/docker-registry –namespace kube-systemhelm upgrade –install registry-proxy incubator/kube-registry-proxy –set registry.host=private-docker-registry.kube-system –set registry.port=5000 –set hostPort=5000 –namespace kube-systemkubectl apply -f docker-registry-ui.yamlkubectl apply -f ingress-docker-registry-ui.yamlAccess the Docker Registryhttp://registry-ui.local/Deploy Tekton Resources, Triggers and Dashboardkubectl apply -f pipeline-release.yamlkubectl apply -f triggers-release.yamlkubectl config set-context –current –namespace tekton-pipelineskubectl apply -f tekton-dashboard-release.yamlkubectl apply -f ingress-tekton-dashboard.yamlAccess the Tekton DashboardThe Tekton dashboard for this installation on K3s can be reached viahttp://tekton-dashboard.local/The dashboard is great to see how tasks and PipelineRuns are running or if something goes wrong to get the logs for troubleshooting or sell it as your xOps Dashboard ;-)For now we don’t have any pipelines running. We’re going to run some tasks first, build a pipeline and initiate it in the next steps along with pipeline runs. But before we fire the next commands, I’d like to explain what we’re going to do.We have 2 apps, the first app is named app, which builds an image from the source on github with kaniko executor and pushes it to our private docker registry and deploy it from there into the hello namespace, where we deploy our hello app from our private chartmuseum repo for the HelmOps showcase as well.N.B.: we cleaned-up the same hello app at the end of the first section of this tutorial and are going to deploy it through HelmOps.Take it easy or not, we’re going to create a task, build a pipeline containing our task, use the TaskRun resource to instantiate and execute a Task outside of the Pipeline and use the PipelineRun to instantiate and run the Pipeline containing our Tasks, oh my God? ;-)Take it easy, let’s start with HelmOps or xOpsYou need the tkn cli to follow the next steps, I’m using the latest version at this time of writing.➜ pipeline git:(master) ✗ tkn versionClient version: 0.11.0Pipeline version: v0.15.0Triggers version: v0.7.0We create a namespace named hello and switch to the namespace:kubectl create ns hellokubectl config set-context –current –namespace helloNow we need to define the git-resource, which is the source in our git repo defined through a custom resource definition PipelineResource:https://medium.com/media/d3cfe785de60fce2658e90bfeb8bf36e/hrefAnd apply the git-resource:kubectl apply -f node-js-tekton/pipeline/git-resource.yamlWith that we are defining where our source is coming from in git, in our case from Github.We can now list our first resource with the tkn cli tool as follow:tkn resources listIn the next step, we’re going to define a task named “build-image-from-source”. In the spec part of the Task object (CRD) we define the git-source with the type git and some parameters as pathToContext, patToDockerfile, imageUrl and imageTag as inputs and the steps needed to run the task, the list-src (which is optional) and the build-and-push step which uses the kanico-project executor image with the command /kaniko/executor/ and some args to build and push the image.https://medium.com/media/d77e198facd1fbc89e8882dd49015ab9/hrefNow we need to deploy our task build and push Task resource to our cluster with:kubectl apply -f node-js-tekton/pipeline/task-build-src.yamlAnd deploy another Task which is defined in the task-deploy.yamlhttps://medium.com/media/c2fcf43d3fd076b7c5df2391cc2c5140/hrefThis Task has 4 steps:update-yaml # set the image tagdeploy-app # deploy the first apppush-to-chartmuseum # push the hello helm chart to chartmuseumhelm-install-hello # install the helm chart to the clusterThe last 3 tasks are using a slightly extended helm-kubectl image which has the helm push plugin installed.N.B. the Dockerfile for helm-kubectl is provided under addons/helm-kubectl.We can now run the task-deploy.yaml and list our tasks with:kubectl apply -f node-js-tekton/pipeline/task-deploy.yamltkn tasks listAnd finally we’ll declare the pipeline and run it with the pipeline-run entity.Nice to know: In PipelineRun we define a collection of resources that define the elements of our Pipeline which include tasks.kubectl apply -f node-js-tekton/pipeline/pipeline.yamltkn pipelines listkubectl apply -f node-js-tekton/pipeline/service-account.yamlkubectl get ServiceAccountskubectl apply -f node-js-tekton/pipeline/pipeline-run.yamltkn pipelineruns listtkn pipelineruns describe application-pipeline-runkubectl get all -n hellocurl chart-example.localWelcome to Hello Chartmuseum for Private Helm Repos on K3s Bonsai!Harvest your Helm-X-Ops workIf all steps worked, in the Tekton Dashboard you should get what you did in the hello namespace, enjoy :-)What’s coming next: AutomationIn the third section of this tutorial, we’ll see how to use Tekton Triggers to automate our HelmOps process, stay tuned.Related resourcesSample nodes-js app for tektonhttps://github.com/javajon/node-js-tektonTekton Pipelines on Githubhttps://github.com/tektoncd/pipelineKatacoda Scenarionshttps://www.katacoda.com/javajon/courses/kubernetes-pipelines/tektonhttps://katacoda.com/ncskier/scenarios/tekton-dashboardTekton Pipelines Tutorialhttps://github.com/tektoncd/pipeline/blob/master/docs/tutorial.mdOpen-Toolchain Tekton Catalogopen-toolchain/tekton-catalogGitOpshttps://www.gitops.tech/Building a ChatOps Bot With TektonBuilding a ChatOps Bot With TektonHello HelmOps with Tekton and Chartmuseum on Kubernetes was originally published in Kubernauts on Medium, where people are continuing the conversation by highlighting and responding to this story.

Quelle: blog.kubernauts.io

Deploy Ghost in a Spot Namespace on Kubernautic from your browserPhoto by Karim MANJRA on UnsplashIn my previous post Spot Namespaces was introduced.In this post I’m going to show you how you can run ghost, a headless CMS or your own apps in a namespace by defining the proper requests and limits in the deployment manifest file of ghost or your own app on Kubernautic.After you’re logged into Rancher you’ll have access to your project on your assigned Kubernetes cluster in form of <user-id>-project-spot-namespace:Click on the project and in the navigation menu bar click on Namespaces, now you can see a the namespace which is assigned to your project:Now that we know the id of our namespace, we’re ready to deploy our Ghost app.First you need to select the cluster and click on the “Launch kubectl” button:With that we’ll have Shell access to the cluster in our browser:With the following kubectl command, you can switch to your namespace:kubectl config set-context –current –namespace <your namespace>And deploy Ghost like this:kubectl create -f https://raw.githubusercontent.com/kubernauts/practical-kubernetes-problems/master/3-ghost-deployment.yamlPlease note in the spec part of the manifest we’re setting the limits for CPU and Memory in the resources part of our ghost image, unless you’ll not be able to deploy the app.spec: containers: – image: ghost:latest imagePullPolicy: IfNotPresent name: ghost resources: limits: memory: 512Mi cpu: 500m requests: memory: 256MiTo see if our deployment was successful, we can run:kubectl get deploymentNow we can create a L7 Ingress to ghost easily in Rancher by selecting Resources → Workloads → LoadBalancing → Add ingress.In this example we’re going to tell Rancher to generate a .xip.io hostname for us, we select the target which is ghost and provide the port 2368 in Port filed:After couple of seconds the L7 ghost ingress is ready to go, click on the ghost-ingress-xyz-xip.io link and enjoy:Questions?If you have any questions, we’d love to welcome you to our Kubernauts Slack channel and be of help.We’re hiring!We love people who love building things, New Things!If you wish to work on New Things, please do visit our job offerings page.Deploy Ghost in a Spot Namespace on RSaaS from your browser was originally published in Kubernauts on Medium, where people are continuing the conversation by highlighting and responding to this story.

Quelle: blog.kubernauts.io

Kubernautic Data Platform base componentsIntroductionEvery digital transformation needs a data platform to transform data and master the challenges of Data Operations referred as DataOps to bring Data, Apps and Processes made by humans and machines together.To make data accessible in real time to the right data scientists by decoupling business decisions from the underlying infrastructure, organizations need to remove bottlenecks from their data projects by implementing a Data Platform based on the best DataOps practices.DataOps DefinedAccording to Wikipedia DataOps was first introduced by Lenny Liebmann, Contributing Editor, InformationWeek, in a blog post on the IBM Big Data & Analytics Hub titled “3 reasons why DataOps is essential for big data success” on June 19, 2014.DataOps is an automated, process-oriented methodology, used by analytic and data teams, to improve the quality and reduce the cycle time of data analytics. While DataOps began as a set of best practices, it has now matured to become a new and independent approach to data analytics.DataOps Re-DefinedEckerson Group re-defines DataOps as follow, which we like so much:“DataOps is an engineering methodology and set of practices designed for rapid, reliable, and repeatable delivery of production-ready data and operations-ready analytics and data science models. DataOps enhances an advanced governance through engineering disciplines that support versioning of data, data transformations, data lineage, and analytic models. DataOps supports business operational agility with the ability to meet new and changing data and analysis needs quickly. It also supports portability and technical operations agility with the ability to rapidly redeploy data pipelines and analytic models across multiple platforms in on-premises, cloud, multi-cloud, and hybrid ecosystems.” [1]Take it easy: DataOps combines Agile development, DevOps and statistical process controls and applies them to data analytics, but needs a well designed Data Platform!What is a Data Platform?We define a Data Platform as the infrastructure with a minimal set, easy to extend base components to successfully deliver data-driven business outcomes with increased productivity and improved collaboration between data engineers, data and security operators through a governed Self-service operation with the highest possible automation.That said, we believe a governed Self-service operation and automation is key to the success of most DataOps initiatives and needs a Platform to build Platforms. With Kubernautic Platform we made it happen to build a Data Platform based on Kubernetes, which is a Platform to build Platforms.Kubernautic Data Platform: DataOps Orchestration on KubernetesKubernautic Data Platform provides a cloud-native infrastructure with the base components as the foundation to run DataOps initiatives on Kubernetes through orchestration in a Self-service manner without the need to operate the platform on top of our Kubernautic offering.Kubernautic Data Platform is designed to enable data scientists to deploy, implement and run their data analytic pipelines built on top of Apache Kafka, Cassandra, MQTT implementations, Spark and Flink with Jupyter notebooks to achieve the following goals:Gather and manage data in one secure placeBuild and share interactive dashboardsReduce time spent on errors and operational tasksAccelerate productivity through team collaborationOrchestrate your development and production pipelines for re-usability and securityUnlock the full potential of DataOps without the need to operate the platformCreate innovative data analytics and deliver intelligent business valueDataOps Needs a Culture made by People to build the Data FactoryLike DevOps, DataOps is mainly a principle to create and develop a strategy in your organisation to overcome the cultural obstacles to achieve higher agility by development and delivery of enterprise grade data pipelines to turn data into value through automation and the right processes and product design made by people to build the Data Factory!Kubernautic Data Platform: The DataOps Data FactoryThese days machines are assembled mostly by machines designed by people and complex processes in a data-driven world to deliver products in factories.The following quote from Elon Musk describes the true problem and the solution to thinking of the Factory like a product.“We realised that the true problem, the true difficulty, and where the greatest potential is — is building the machine that makes the machine. In other words, it’s building the factory. I’m really thinking of the factory like a product.” Elon MuskWith Kubernautic Data Platform we deliver a highly automated system to build a Data Factory for DataOps to build enterprise grade data-driven products and services on top of our Kubernautic Platform.Some base components of Kubernautic Data Platform — which mainly provides the persistent layer of the platform — are presented through the following figure:Automation and Self-service at the heart of Kubernautic Data PlatformLike our Kubernautic public offering, where developers can get instant access for free to Spot Namespaces on Kubernetes or B2B customers within minutes to a dedicated Kubernetes cluster managed by Rancher Cluster Manager, Kuberbautic Data Platform provides the same agile experience to access the Data Factory for data pipeline and model orchestration, test and deployment automation for data pipelines and analytic models to make decisions faster with high quality data governed across a range of users, use cases, architectures and deployment options.According to Gartner’s Survey Analysis in March 2020 titled as “Data Management Struggles to Balance Innovation and Control” [2], only 22% of a data team’s time is spent on new initiatives and innovation.The Self-service capability of our Data Platform allows DevOps teams to provision Kubernetes clusters with DataOps base components within a few hours, not days or weeks waiting for IT operation teams to set-up the environment to explore, blend, enrich and visualize data. And exactly this Self-service capability of Kubernautic Data Platform boosts innovation by your DataOps projects.DataOps and MLOps are almost the same, but …MLOps stands for Machine Learning Operations and is almost the sibling of DataOps.We love the great blog post by Fernando Velez — Chief Data Technologist by Persistent — in Data-Driven Business and Intelligence titled as:“DataOps and MLOps: Almost, But Not Quite The Same” [3]and invite you to read through Fernando’s view on this topic, which might help to keep your Data and Machine Learning initiatives accurate over time with NoOps!Related resources[1] DataOps: More Than DevOps for Data Pipelines (by Eckerson Group)https://www.eckerson.com/articles/dataops-more-than-devops-for-data-pipelines[2] Survey Analysis: Data Management Struggles to Balance Innovation and Controlhttps://www.gartner.com/en/documents/3982237/survey-analysis-data-management-struggles-to-balance-inn[3] DataOps and MLOps: Almost, But Not Quite The Same https://www.persistent.com/blogs/dataops-and-mlops-almost-but-not-quite-the-same/Kubernautic Data Platform for DataOps & MLOps was originally published in Kubernauts on Medium, where people are continuing the conversation by highlighting and responding to this story.

Quelle: blog.kubernauts.io

RHEL 8.5 introduced a new Microsoft SQL Server system role that automates the installation, configuration, and tuning of Microsoft SQL Server on RHEL. In this post, learn how to use the network system role to implement a bonded active-backup network interface.

Quelle: CloudForms