P2 powers internal collaboration at WordPress.com — and now it’s free for everyone.

As more collaboration is happening remotely and online — work yes, but increasingly also school and personal relationships — we’re all looking for better ways to work together online. Normally, teachers hand out homework to students in person, and project leaders gather colleagues around a conference table for a presentation. Suddenly all this is happening in email, and Slack, and Zoom, and Google docs, and a dozen other tools.

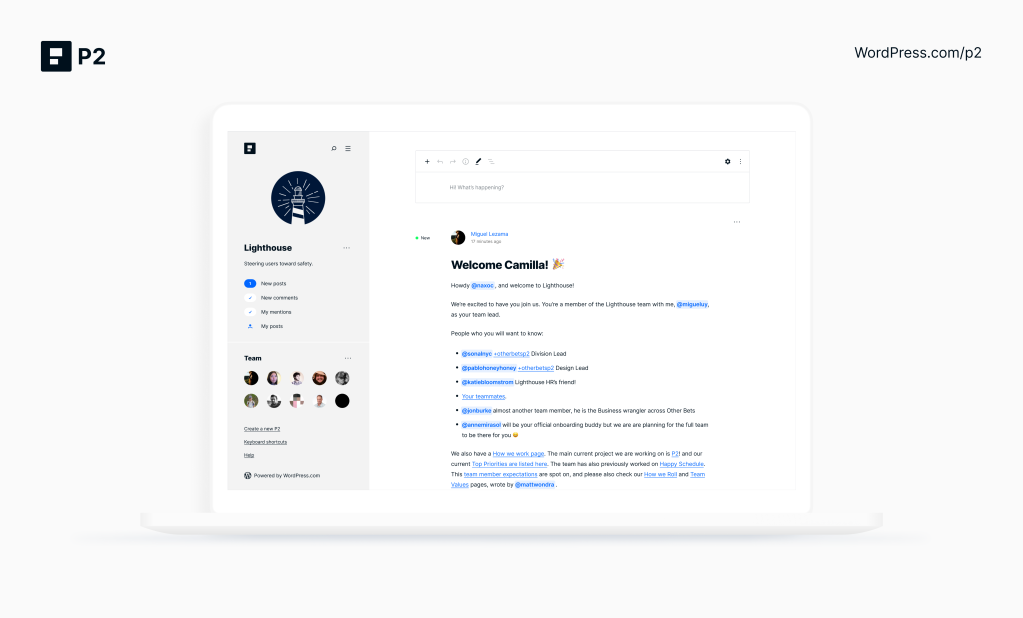

At WordPress.com, our 15 years as a fully distributed company with over 1,200 employees working from 77 countries relies on P2: an all-in-one team website, blog, database, and social network that consolidates communications and files in one accessible, searchable spot.

It powers work at WordPress.com, WooCommerce, and Tumblr. And today, a beta version is available for anyone — for your newly-remote work team, your homeschooling pod, your geographically scattered friends. P2 is the glue that gives your group an identity and coherence.

What’s P2?

P2 moves your team or organization away from scattered communication and siloed email inboxes. Any member of your P2, working on any kind of project together, can post regular updates. Discussions happen via comments, posted right from the front page and updated in real time, so your team can brainstorm, plan, and come to a consensus. Upload photos or charts, take a poll, embed files, and share tidbits from your day’s activities. Tag teammates to get their attention. Your P2 members can see updates on the Web, via email, or in the WordPress mobile apps.

Keep your P2 private for confidential collaboration. Or make it public to build a community. How you use it and who has access is up to you. And as folks come and go, all conversations and files remain available on the P2, and aren’t lost in anyone’s inbox.

The beta version of P2 is free for anyone, and you can create as many P2 sites as you need. (Premium versions are in the works.)

What can I use P2 for?

Inside Automattic, we use P2 for:

Companywide blog posts from teams and leadership, where everyone can ask questions via comments.Virtual “watercoolers” to help teammates connect — there are P2s for anything from music to Doctor Who to long-distance running.Project planning updates.Sharing expertise to our broader audience. We’ve got a P2 with guidance on how to manage remote work, and WooCommerce uses P2 to organize their global community.

P2 works as an asynchronous companion to live video like Zoom or live chat like Slack. It’s a perfect partner for live video and chat — you have those tools when a real-time conversation gets the job done, and P2 for reflection, discussion, and commemorating decisions.

How can you use your P2?

Plan a trip with friends and family — share links, ticket files, and travel details. (See an example on this P2!).Create a P2 for your school or PTA to share homeschooling resources and organize virtual events.Manage your sports team’s schedules and share photos from games.Let kids track and submit homework assignments remotely, with a space for Q&A with your students.

How can I learn more?

Visit this demo P2 to learn the P2 ropes! Check out a range of example posts and comments to see how you can:

Post, read, comment, like, and follow conversations. @-mention individuals and groups to get their attention. Share video, audio, documents, polls, and more. Access in-depth stats and get notifications.

Ready for your own P2?

Visit WordPress.com/p2 and create your own P2.

Quelle: RedHat Stack