Gwyneth Paltrow’s Goop Just Got Slammed For Deceptive Advertising

Mat Hayward

Gwyneth Paltrow’s Goop routinely draws criticism for its promotion of crystals, supplements, vaginal jade eggs, and all manner of other health products. Now, a consumer watchdog group says that Goop can’t back up many of its promises about improving health — and it wants regulators to investigate what it says is deceptive advertising.

On Tuesday, Truth in Advertising said that it had catalogued more than 50 instances of the e-commerce startup claiming that its products — along with outside products it promotes on its blog and in its newsletter — could treat, cure, prevent, alleviate, or reduce the risk of ailments such as infertility, depression, psoriasis, anxiety, and even cancer. “The problem is that the company does not possess the competent and reliable scientific evidence required by law to make such claims,” the advocacy group wrote in a blog post.

Truth in Advertising says Goop made these explicit and indirect claims both on its website and at its inaugural wellness summit in June.

The group, which has previously slammed the Kardashians for their allegedly deceptive Instagram ads, brought its Goop complaints to two California district attorneys who are part of a state task force that prosecutes matters related to product safety and food, drug, and medical device labeling. Goop, founded as a newsletter by the Academy Award-winning actress in 2008, is headquartered in Los Angeles. It has raised $20 million in venture capital.

Here are a few examples of Goop’s deceptive marketing, according to and as captured by Truth in Advertising.

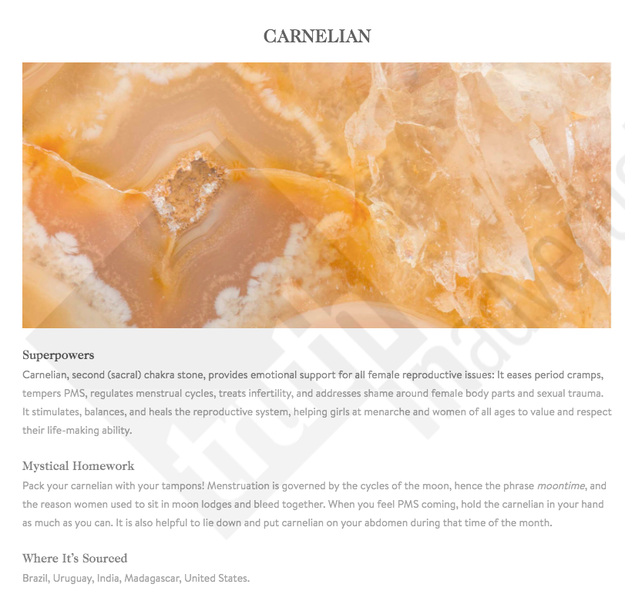

This crystal “eases period cramps, tempers PMS, regulates menstrual cycles, treats infertility.”

Truth in Advertising / Via truthinadvertising.org

One post promoted walking barefoot, a.k.a. “earthing“: “Several people in our community,” including Paltrow, “swear by earthing — also called grounding — for everything from inflammation and arthritis to insomnia and depression.”

Truth in Advertising / Via truthinadvertising.org

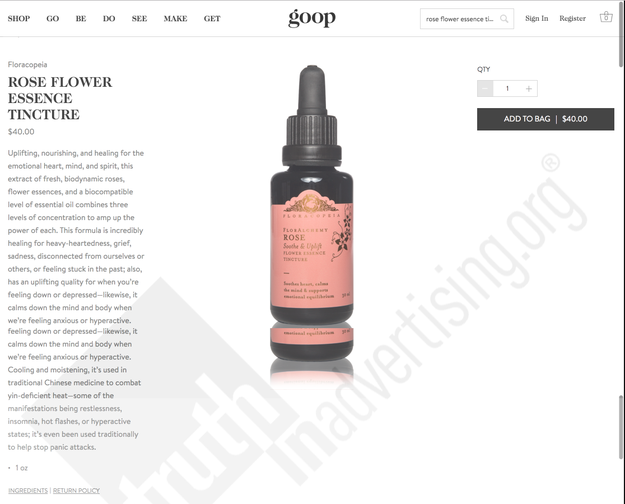

Rose extract for panic attacks, among other things.

“Cooling and moistening, it's used in traditional Chinese medicine to combat yin-deficient heat — some of the manifestations being restlessness, insomnia, hot flashes, or hyperactive states; it’s even been used traditionally to help stop panic attacks.”

Truth in Advertising / Via truthinadvertising.org

Truth in Advertising also condemned Goop for allegedly saying body stickers reduce inflammation, vitamin D3 guards against autoimmune diseases and cancer, a hair treatment treats anxiety and depression, and vaginal eggs increase hormonal balance and preventing the uterus from slipping.

The group said that it told Goop about what it saw as its problematic health claims on Aug. 11, and if Goop didn’t fix its language by Aug. 18, it would alert regulators. The day before the deadline, it said, it also provided a list of web pages with unsubstantiated claims. “Despite being handed this information, Goop to date has only made limited changes to its marketing,” the group wrote Tuesday.

A Goop spokesperson told BuzzFeed News in a statement, “Goop is dedicated to introducing unique products and offerings and encouraging constructive conversation surrounding new ideas. We are receptive to feedback and consistently seek to improve the quality of the products and information referenced on our site.”

The spokesperson said that the company “responded promptly and in good faith to the initial outreach from representatives of TINA and hoped to engage with them to address their concerns. Unfortunately, they provided limited information and made threats under arbitrary deadlines which were not reasonable under the circumstances.

“Nevertheless, while we believe that TINA’s description of our interactions is misleading and their claims unsubstantiated and unfounded, we will continue to evaluate our products and our content and make those improvements that we believe are reasonable and necessary in the interests of our community of users,” the spokesperson added.

This isn’t the first time Goop has faced criticism of its marketing. In August 2016, it said it would voluntarily stop making certain claims about its Moon Juice dietary supplements, such as “brain dust” and “action dust.” That came after an investigative unit of the advertising industry said Goop was required to verify its claims that the products could improve customers’ energy, stamina, thinking ability, and capacity for stress.

But Goop has started to respond to some of its critics. In July, it fired back at Jen Gunter, a San Francisco obstetrician-gynecologist who rails against many of Goop’s health claims — the kinds Truth in Advertising is now taking to task.

LINK: This Doctor Says Gwyneth Paltrow’s Goop Promotes Bullshit. Goop Just Clapped Back.

LINK: Advocacy Group Files FTC Complaint Over Kardashians’ Instagram Ads

Quelle: <a href="Gwyneth Paltrow’s Goop Just Got Slammed For Deceptive Advertising“>BuzzFeed