The post What is container orchestration? appeared first on Mirantis | Pure Play Open Cloud.

The past several years have brought the onset of applications built in containers such as Docker containers, but running a production application means more than simply creating a container and running it on Docker Engine. It means container orchestration.

Understanding container orchestration

Before we get into the specifics of how it works, we should understand what is meant by container orchestration.

Containerization of applications makes it possible to more easily run them in diverse environments, because Docker Engine acts as the application’s conceptual “home”. However, it doesn’t solve all of the problems involved in running a production application — just the opposite, in fact.

A non-containerized application assumes that it will be installed and run manually, or at least delivered via a virtual machine. But a containerized application has to be placed, started, and provided with resources. This kind of container automation is why you need container orchestration tools.

These Docker container orchestration tools perform the following tasks:

Determine what resources, such as compute nodes and storage, are available

Determine the best node (or nodes) on which to run specific containers

Allocate resources such as storage and networking

Start one or more copies of the desired containers, based on redundancy requirements

Monitor the containers and in the event that one or more of them is no longer functional, replace them.

Multiple container orchestration tools exist, and they don’t all handle objects in the same way.

How to plan for container orchestration

In an ideal situation, your application should not be dependent on which container orchestration platform you’re using. Instead, you should be able to orchestrate your containers using any platform as long as you configure that platform correctly.

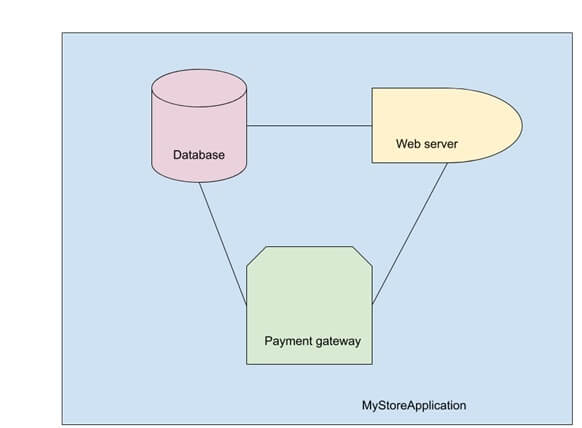

All of this relies, again, on knowing the architecture of your application so that you can implement it outside of the application itself. For example, let’s say we’re building an e-commerce site.

We have a database, web server, and payment gateway, all of which communicate over a network. We also have all of the various passwords needed for them to talk to each other.

The compute, network, storage, and secrets are all resources that need to be handled by the container orchestration platform, but how that happens depends on the platform that you choose.

Types of container orchestration platforms

Because different environments require different levels of orchestration, the market has spun off multiple container orchestration tools over the last few years. While they all do the same basic job of container automation, they work in different ways and were designed for different scenarios.

Docker Swarm Orchestration

To the engineers at Docker, orchestration was a capability to be provided as a first class citizen. As such, Swarm is included with Docker itself. Enabling Swarm mode is straightforward, as is adding nodes.

Docker Swarm enables developers to define applications in a single file, such as:

version: “3.7”

services:

database:

image: dockersamples/atsea_db

ports:

– “5432”

environment:

POSTGRES_USER: gordonuser

POSTGRES_DB_PASSWORD_FILE: /run/secrets/postgres-password

POSTGRES_DB: atsea

PGDATA: /var/lib/postgresql/data/pgdata

networks:

– atsea-net

secrets:

– domain-key

– postgres-password

deploy:

placement:

constraints:

– ‘node.role == worker’

appserver:

image: dockersamples/atsea_app

ports:

– “8080”

networks:

– atsea-net

environment:

METADATA: proxy-handles-tls

deploy:

labels:

com.docker.lb.hosts: atsea.docker-ee-stable.cna.mirantis.cloud

com.docker.lb.port: 8080

com.docker.lb.network: atsea-net

com.docker.lb.ssl_cert: wildcard_docker-ee-stable_crt

com.docker.lb.ssl_key: wildcard_docker-ee-stable_key

com.docker.lb.redirects: http://atsea.docker-ee-stable.cna.mirantis.cloud,https://atsea.docker-ee-stable.cna.mirantis.cloud

com.libkompose.expose.namespace.selector: “app.kubernetes.io/name:ingress-nginx”

replicas: 2

update_config:

parallelism: 2

failure_action: rollback

placement:

constraints:

– ‘node.role == worker’

restart_policy:

condition: on-failure

delay: 5s

max_attempts: 3

window: 120s

secrets:

– domain-key

– postgres-password

payment_gateway:

image: cna0/atsea_gateway

secrets:

– staging-token

networks:

– atsea-net

deploy:

update_config:

failure_action: rollback

placement:

constraints:

– ‘node.role == worker’

networks:

atsea-net:

name: atsea-net

secrets:

domain-key:

name: wildcard_docker-ee-stable_key

file: ./wildcards.docker-ee-stable.key

domain-crt:

name: wildcard_docker-ee-stable_crt

file: ./wildcards.docker-ee-stable.crt

staging-token:

name: staging_token

file: ./staging_fake_secret.txt

postgres-password:

name: postgres_password

file: ./postgres_password.txt

In this example, we have three services: the database, the application server, and the payment gateway, all of which include their own particular configurations. These configurations also refer to objects such as networks and secrets, which are defined independently.

The advantage of Swarm is that it’s got a small learning curve, and developers can run their applications in the same environment on their laptop as it will use when it runs in production. The disadvantage is that it’s not as full-featured as its companion, Kubernetes.

Kubernetes Orchestration

While Swarm is still widely used in many contexts, the acknowledged champion of container orchestration is Kubernetes. Like Swarm, Kubernetes enables developers to create resources such as groups of replicas, networking, and storage, but it’s done in a completely different way.

For one thing, Kubernetes is a separate piece of software; in order to use it, you must either install a distribution locally or have access to an existing cluster. For another, the entire architecture of applications and how they’re created is totally different from Swarm. For example, the application we created in the earlier example would look like this:

apiVersion: v1

data:

staging-token: c3RhZ2luZw0K

kind: Secret

metadata:

creationTimestamp: null

labels:

io.kompose.service: staging-token

name: staging-token

type: Opaque

—

apiVersion: v1

data:

postgres-password: cXdhcG9sMTMNCg==

kind: Secret

metadata:

creationTimestamp: null

labels:

io.kompose.service: postgres-password

name: postgres-password

type: Opaque

—

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

kompose.version: 1.21.0 (HEAD)

creationTimestamp: null

labels:

io.kompose.service: payment-gateway

name: payment-gateway

spec:

replicas: 1

selector:

matchLabels:

io.kompose.service: payment-gateway

strategy: {}

template:

metadata:

annotations:

kompose.version: 1.21.0 (HEAD)

creationTimestamp: null

labels:

io.kompose.network/atsea-net: “true”

io.kompose.service: payment-gateway

spec:

containers:

– image: cna0/atsea_gateway

name: payment-gateway

resources: {}

volumeMounts:

– mountPath: /run/secrets/staging-token

name: staging-token

nodeSelector:

node-role.kubernetes.io/worker: “true”

restartPolicy: Always

volumes:

– name: staging-token

secret:

items:

– key: staging-token

path: staging-token

secretName: staging-token

status: {}

—

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

creationTimestamp: null

name: ingress-appserver

spec:

ingress:

– from:

– namespaceSelector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

– podSelector: {}

podSelector:

matchLabels:

io.kompose.network/atsea-net: “true”

policyTypes:

– Ingress

—

apiVersion: v1

data:

domain-key: <snip>

kind: Secret

metadata:

creationTimestamp: null

labels:

io.kompose.service: domain-key

name: domain-key

type: Opaque

—

apiVersion: v1

data:

Domain-crt: <snip>

kind: Secret

metadata:

creationTimestamp: null

labels:

io.kompose.service: domain-crt

name: domain-crt

type: Opaque

—

apiVersion: v1

kind: Service

metadata:

annotations:

kompose.version: 1.21.0 (HEAD)

creationTimestamp: null

labels:

io.kompose.service: database

name: database

spec:

ports:

– name: “5432”

port: 5432

targetPort: 5432

selector:

io.kompose.service: database

status:

loadBalancer: {}

—

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

kompose.version: 1.21.0 (HEAD)

creationTimestamp: null

labels:

io.kompose.service: database

name: database

spec:

replicas: 1

selector:

matchLabels:

io.kompose.service: database

strategy: {}

template:

metadata:

annotations:

kompose.version: 1.21.0 (HEAD)

creationTimestamp: null

labels:

io.kompose.network/atsea-net: “true”

io.kompose.service: database

spec:

containers:

– env:

– name: PGDATA

value: /var/lib/postgresql/data/pgdata

– name: POSTGRES_DB

value: atsea

– name: POSTGRES_DB_PASSWORD_FILE

value: /run/secrets/postgres-password

– name: POSTGRES_USER

value: gordonuser

image: dockersamples/atsea_db

name: database

ports:

– containerPort: 5432

resources: {}

volumeMounts:

– mountPath: /run/secrets/domain-key

name: domain-key

– mountPath: /run/secrets/postgres-password

name: postgres-password

nodeSelector:

node-role.kubernetes.io/worker: “true”

restartPolicy: Always

volumes:

– name: domain-key

secret:

items:

– key: domain-key

path: domain-key

secretName: domain-key

– name: postgres-password

secret:

items:

– key: postgres-password

path: postgres-password

secretName: postgres-password

status: {}

—

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

creationTimestamp: null

name: atsea-net

spec:

ingress:

– from:

– podSelector:

matchLabels:

io.kompose.network/atsea-net: “true”

podSelector:

matchLabels:

io.kompose.network/atsea-net: “true”

—

apiVersion: v1

kind: Service

metadata:

annotations:

kompose.version: 1.21.0 (HEAD)

creationTimestamp: null

labels:

io.kompose.service: appserver

name: appserver

spec:

ports:

– name: “8080”

port: 8080

targetPort: 8080

selector:

io.kompose.service: appserver

status:

loadBalancer: {}

—

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

io.kompose.network/atsea-net: “true”

io.kompose.service: appserver

name: appserver

spec:

containrs:

– env:

– name: METADATA

value: proxy-handles-tls

image: dockersamples/atsea_app

name: appserver

ports:

– containerPort: 8080

resources: {}

volumeMounts:

– mountPath: /run/secrets/domain-key

name: domain-key

– mountPath: /run/secrets/postgres-password

name: postgres-password

nodeSelector:

node-role.kubernetes.io/worker: “true”

restartPolicy: OnFailure

volumes:

– name: domain-key

secret:

items:

– key: domain-key

path: domain-key

secretName: domain-key

– name: postgres-password

secret:

items:

– key: postgres-password

path: postgres-password

secretName: postgres-password

status: {}

—

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

annotations:

kompose.version: 1.21.0 (HEAD)

creationTimestamp: null

labels:

io.kompose.service: appserver

name: appserver

spec:

rules:

– host: atsea.docker-ee-stable.cna.mirantis.cloud

http:

paths:

– backend:

serviceName: appserver

servicePort: 8080

tls:

– hosts:

– atsea.docker-ee-stable.cna.mirantis.cloud

secretName: tls

status:

loadBalancer: {}

The application is the same, it’s just created in a different way. As you can see, the web application server, the database, and the payment gateway are still created using Kubernetes, just with a different structure. In addition, the support structures such as networks and secrets must be created.

The additional complexity does bring a number of benefits, however. Kubernetes is much more full-featured than Swarm, and can be appropriate in both small and large environments.

Where to find container orchestration

Not only are there different types of container orchestration, you can also find it in different places, depending on your situation.

Local desktop/laptop

Most developers work on their desktop or laptop machine, so it’s convenient if the target container orchestration platform is available at that level.

For Swarm users, the process is straightforward; Swarm is already part of Docker and just needs to be enabled.

For Kubernetes, the developer needs to take an additional step to install Kubernetes on their machine, but there are several tools that make this possible, such as Kubeadm.

Internal network

Once the developer is ready to deploy, if the application will live on an on-premise data center, typically the user won’t need to install a cluster because it will have been installed by administrators; instead they will connect using the connection information given to them.

Administrators can deploy a number of different cluster types; for example, enterprise grade Docker Swarm clusters and Kubernetes clusters can be deployed by Docker Enterprise Container Cloud.

AWS

Businesses that run their infrastructure on Amazon Web Services have a number of different choices. For example, you can run install Docker Enterprise on Amazon EC2 compute servers, or you can use Docker Enterprise Container Cloud to deploy clusters directly on Amazon Web Services. You also have the option to use specific container resources, such as Amazon Container Services or Amazon Kubernetes service.

Google

Choices for Google cloud are similar; you can install a container management platform such as Docker Enterprise, or you can use Google Kubernetes Engine to spin up clusters using Google’s hardware and software — and their API.

Azure

The situation is the same for Azure Cloud: you must choose between deploying a distribution such as Docker Enterprise on compute nodes, providing Swarm and Kubernetes capabilities, or use the Azure Kubernetes Service to provide Kubernetes clusters to your users.

Getting started with container orchestration

The best way to get started with container orchestration is to simply pick a system and try it out! You can try installing kubeadm, or you can make it easy on yourself and install a full system such as Docker Enterprise, which provides you with multiple options for container orchestration platforms.

The post What is container orchestration? appeared first on Mirantis | Pure Play Open Cloud.

Quelle: Mirantis

Published by